AI Bias In Healthcare: Types, Examples, Impacts And Solutions Overview Of AI Bias In Healthcare: A Key Issue For the Future

Have you considered how AI bias in healthcare could impact your business? For healthcare providers, clinics, and hospitals, overlooking bias in AI systems can lead to inaccurate diagnoses, unequal treatment, and significant legal risks. This article from MOR Software will provide your business with comprehensive insights and solutions to detect and reduce bias, enhancing care quality and safeguarding your organization’s reputation.

What Is AI Bias In Healthcare?

AI bias in healthcare refers to the phenomenon where artificial intelligence systems make predictions, diagnoses, or decisions that are systematically unfair. This issue has become increasingly critical in modern medicine as AI is now widely applied in diagnostic support, medical imaging analysis, and even treatment planning.

Examples of AI bias in healthcare include:

- Heart disease diagnosis in women: because training datasets often contain more male patient data, AI systems may underestimate the severity of symptoms in women, resulting in misdiagnosis or delayed treatment.

- Dermatology image analysis: Many AI models are trained primarily on images of lighter skin tones. As a result, they show a higher rate of false negatives for patients with darker skin, which reflects AI biases in healthcare.

- Organ transplant allocation algorithms: when healthcare costs are used as a proxy variable, wealthier patients are unintentionally prioritized over lower-income patients, demonstrating algorithmic bias in healthcare AI.

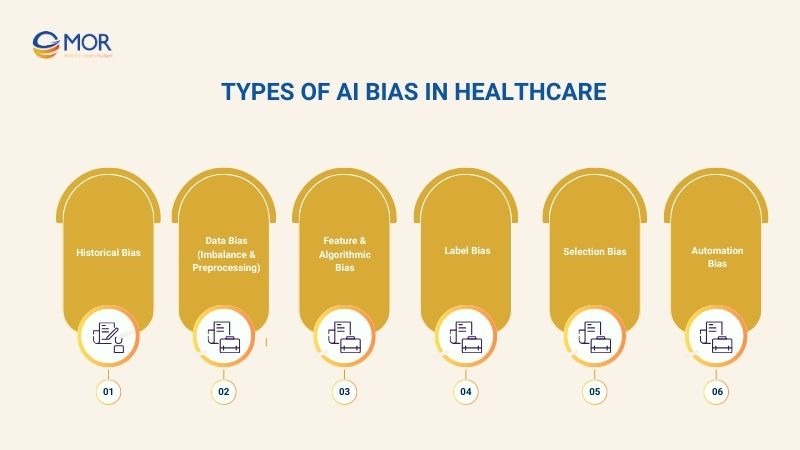

Types Of AI Bias In Healthcare

In practice, there are many types of AI biases in healthcare. To fully understand the nature of bias in healthcare AI, we need to examine each specific type of bias and its impact on treatment outcomes.

Historical Bias

Historical bias in healthcare occurs when long-standing inequalities in medical practice are “copied” into training data and carried forward into AI systems. As a result, models not only reflect but also reinforce the systemic disparities that have existed for decades in healthcare delivery.

A typical example is the underdiagnosis of minority groups. For many years, symptoms in these populations were often overlooked or underestimated. When medical records containing these biases are used for training, AI systems replicate the same flawed patterns, leading to delayed or missed diagnoses.

This is a clear case of algorithmic bias in healthcare AI, where past injustices are not eliminated but instead amplified through automated systems.

Data Bias (Imbalance & Preprocessing)

One of the most common sources of AI biases in healthcare is data bias. When training datasets fail to represent all demographic groups adequately, AI predictions become skewed. For instance, if a diabetes dataset contains mostly urban patients, the model may perform poorly on rural populations.

Additionally, preprocessing steps, such as resampling or data cleaning, are often designed to reduce bias. However, if applied incorrectly, they may actually intensify the problem rather than solve it.

Feature & Algorithmic Bias

Feature and algorithmic bias arise when the choice of variables or algorithm design unintentionally encodes social inequities. For example, including ZIP codes or income levels as input features may lead the system to prioritize wealthier patients, regardless of actual medical needs.

Moreover, algorithms that optimize for overall accuracy while ignoring subgroup performance may worsen disparities. Such outcomes illustrate how bias in AI healthcare can emerge from data and from human design decisions.

Interpretability & Transparency Bias

In many healthcare systems, AI functions as a “black box,” making it difficult to understand why a specific decision was made. This problem, known as interpretability and transparency bias, prevents medical professionals from properly evaluating the fairness of AI outputs.

Without explainability, detecting and correcting bias becomes nearly impossible. This is why frameworks such as fairness of artificial intelligence in healthcare review and recommendations stress the importance of transparent, interpretable models. Only when clinicians can verify AI decisions can safety and equity be ensured for patients.

Label Bias

Label bias occurs when medical data is labeled inaccurately or inconsistently, often due to human subjectivity in diagnosis.

For instance, chest pain in women is sometimes labeled as “mild” or “non-serious.” When AI systems are trained on these biased labels, they underestimate the risk of heart disease in female patients. This is a clear example of examples of bias in artificial intelligence, showing how even small labeling errors can lead to major consequences in diagnosis and treatment.

Selection Bias

Selection bias arises when datasets are drawn from populations that are not representative of the broader patient community. For example, an AI model trained primarily on data from large urban hospitals may not perform well when applied to rural populations.

As a result, the model delivers accurate predictions for one group but fails in real-world applications across diverse demographics. This is a common form of bias in healthcare AI, particularly in systems where medical data is fragmented and unevenly distributed.

Automation Bias

Automation bias occurs when healthcare providers place excessive trust in AI outputs. In such cases, they may downplay their own clinical judgment or ignore patient-specific signals.

For example, if an AI system suggests that a patient shows no signs of stroke, a physician may delay intervention, even if symptoms are evident. This represents a dangerous form of artificial intelligence bias in healthcare, as the risk stems not only from the technology itself but also from the way humans interact with it.

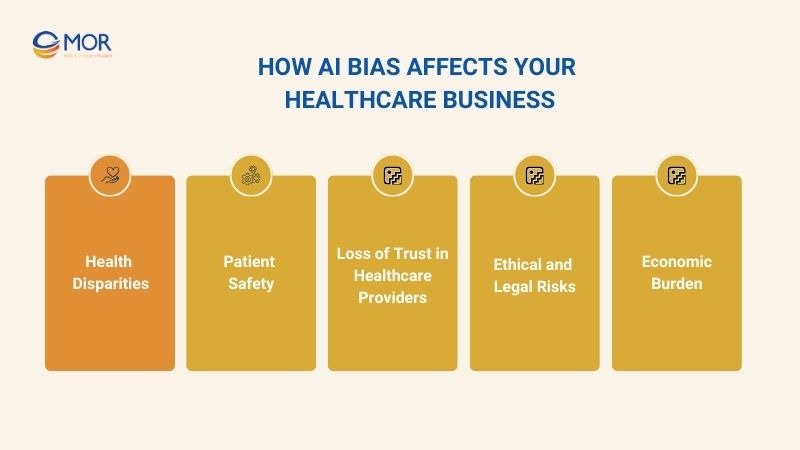

How AI Bias Affects Your Healthcare Business

The impact of bias in AI in healthcare goes beyond diagnostic accuracy or treatment outcomes; it directly affects the operations and reputation of healthcare organizations. To understand the full scope of these risks, we need to examine the specific consequences that AI bias can have on healthcare businesses.

Health Disparities

One of the most severe consequences of AI biases in healthcare is the widening of disparities in medical care among different population groups. When training data or algorithms are already biased, AI tends to deliver more accurate diagnostic and treatment outcomes for majority populations, while minority groups receive less reliable results.

For example, a study from the University of Michigan using the well-known MIMIC ICU dataset found that the medical testing rate for white patients was about 4.5% higher than for Black patients with the same age, gender, and emergency status. This shows how pre-existing inequalities in healthcare data can be embedded into AI models, reinforcing unfair outcomes.

Patient Safety

When AI models are biased, the quality of diagnosis and treatment declines, creating direct risks to patient safety. Biased predictions can delay disease detection or lead to incorrect treatment plans.

This is why many examples of bias in artificial intelligence emphasize severe medical consequences, including complications and increased mortality rates. Patient safety therefore remains one of the most critical challenges in deploying AI in healthcare.

Loss of Trust in Healthcare Providers

Patient trust is the foundation of effective healthcare delivery. However, when patients perceive AI systems as biased or unfair, trust in them quickly erodes.

Biases in AI not only create doubt about technology but also damage the reputation of healthcare providers. Once trust declines, the adoption and scaling of new AI-driven technologies across the healthcare sector slows significantly.

Ethical and Legal Risks

AI bias is a technical issue but also a major ethical and legal challenge. A 2019 study revealed that Optum’s healthcare risk prediction algorithm in the U.S. used healthcare costs as a proxy for patient “health needs.” Because Black patients historically received less care, the algorithm systematically underestimated their true medical needs.

Research, including reviews and recommendations on the fairness of artificial intelligence in healthcare, has shown that failing to comply with legal frameworks such as HIPAA, GDPR, or anti-discrimination laws exposes healthcare businesses to serious liability, lawsuits, and reputational damage.

Economic Burden

Beyond ethics and safety, AI bias also generates a significant economic burden. Misdiagnoses lead to repeat visits, unnecessary testing, and prolonged treatments, all of which increase operational costs.

At scale, these errors can add substantial financial strain to healthcare systems. This is why tracking AI bias statistics and proactively managing financial risks from biased algorithms are critical to the sustainability of healthcare businesses.

Real-World Examples Of AI Bias In Healthcare You Should Know

In reality, there are many examples of AI bias in healthcare that clearly demonstrate the harmful effects of skewed data and unfair algorithms. The following cases show how AI biases in healthcare can lead to misdiagnosis, increased mortality risk, and greater inequities in treatment outcomes.

Misdiagnosis of Heart Disease in Women

In healthcare, one of the clearest examples of AI bias is the poor performance of AI systems in diagnosing heart disease in women. Most training datasets are built predominantly on male patients, while female patients often present different symptoms, such as shortness of breath, fatigue, or nausea. Without representative data, AI tends to overlook these signals and deliver biased results.

The impact of this bias in healthcare AI is severe. Women often receive delayed or inaccurate diagnoses, which leads to higher complication rates and greater mortality risk. Beyond the clinical consequences, this form of bias also worsens the gender gap in healthcare, an inequality that has long existed due to systemic underrepresentation in clinical research.

Skin Cancer Detection for Darker Skin Tones

Most dermatology image datasets used for training AI are dominated by lighter skin tones, while images of patients with darker skin remain underrepresented. As a result, machine learning models can accurately detect lesions on lighter skin but perform poorly on darker tones.

This type of AI bias in healthcare leads to higher false-negative rates among patients with darker skin, meaning that cases of skin cancer are more likely to be missed. The consequence is delayed treatment and increased mortality risk.

Organ Transplant Priority and Socioeconomic Bias

A further bias in AI in healthcare example is the unfairness in organ transplant allocation. Some algorithms designed to prioritize transplant candidates use healthcare costs as a proxy for medical need. However, spending levels are not an accurate measure of health status, they mainly reflect access to care and socioeconomic status.

In practice, this results in wealthier patients or those from more developed regions receiving higher transplant priority, while poorer patients do not. This form of artificial intelligence bias in healthcare is particularly harmful, as it creates inequities in life-or-death situations and contradicts core principles of medical fairness and ethics.

Best Practices To Reduce AI Bias In Healthcare

In modern healthcare, reducing AI bias in healthcare is a key factor in improving diagnosis quality, treatment effectiveness, and ensuring fairness. To achieve this, the healthcare industry needs to apply the following best practices in healthcare AI:

Build and Balance Diverse Datasets

In developing AI in healthcare, building and balancing datasets is a crucial step to reducing AI bias in healthcare. Data collection must ensure diversity in gender, ethnicity, geography, and income levels so that the system accurately reflects real-world healthcare needs.

Beyond quantity, the data must ensure quality and representativeness, especially for minority and underserved populations. This prevents training datasets from being skewed, thereby reducing the risk of AI producing biased decisions.

Improve Labeling and Preprocessing Practices

To reduce AI bias in healthcare, labeling and preprocessing practices must be standardized and tightly controlled. This includes setting clear labeling guidelines to minimize human subjectivity and ensuring training data accurately reflects the characteristics of different patient groups.

Additionally, applying bias-correction techniques during preprocessing is essential to balance data before feeding it into models. This process helps prevent systemic errors early on, thereby improving fairness in healthcare AI and enhancing the reliability of diagnostic and treatment results.

Continuous Model Testing and Monitoring

A crucial step in reducing bias in artificial intelligence in healthcare is continuous model testing and monitoring. This involves regular audits to check fairness across different subgroups, not only relying on accuracy but also on fairness metrics.

Applying fairness metrics alongside accuracy allows early detection of bias in models, enabling timely algorithm adjustments and improvements. Continuous model testing and monitoring ensure not only the efficiency of healthcare AI but also build trust in AI-driven healthcare systems.

Enhance Transparency and Explainability

Transparency and explainability are vital factors in reducing bias in healthcare AI. Using interpretable models where possible helps clinicians understand how AI makes decisions, allowing them to control potential bias risks.

Providing explanations for AI predictions to healthcare professionals allows them to evaluate and cross-check AI results with real clinical data. Transparency and explainability not only improve fairness in healthcare AI but also increase trust in AI-driven healthcare solutions.

Train and Educate Healthcare Professionals

Training and educating healthcare professionals is a key factor in mitigating AI bias in healthcare. Providing knowledge about the limitations of AI helps clinicians identify and challenge decisions that are inaccurate or biased.

Encouraging a human-in-the-loop approach when applying AI reduces the risk of blind reliance on AI outputs. This not only ensures patient safety but also promotes fairness and accountability in AI healthcare applications.

In Conclusion

Addressing AI bias in healthcare is no longer optional; it is essential for the future of healthcare delivery. By adopting best practices and leveraging solutions like MOR Software, your business can ensure fairness, improve patient outcomes, and mitigate risks. Start taking proactive steps today to make your AI systems more equitable and reliable. Contact MOR Software now to transform your healthcare AI strategy.

Rate this article

0

over 5.0 based on 0 reviews

Your rating on this news:

Name

*Email

*Write your comment

*Send your comment

1