TOP 12 Popular Machine Learning Algorithms Explained in 2026

Have you ever wondered how Netflix seems to know exactly which movie you’ll enjoy, or how banking apps detect unusual transactions almost instantly? It all comes down to Machine Learning Algorithms — the techniques that allow computers to learn from data, identify patterns, and continuously improve their performance over time. This article of MOR Sofware will help you understand the role, categories, and real-world applications of the most popular Machine Learning Algorithms today.

What are Machine Learning Algorithms?

Machine learning algorithms are methods that enable computers to learn from data without being explicitly programmed. They form the foundation of ML, where systems improve performance through experience. Each machine learning algorithm follows a unique approach to identify patterns and relationships in data.

These algorithms build machine learning model that predict outcomes and adapt as more data becomes available. Understanding ML algorithms is essential for selecting the right solution and reducing errors. Today, machine learning algorithms power systems in finance, healthcare, e-commerce, and artificial intelligence applications.

Why is it important to understand different machine learning algorithms?

As an ML engineer, having a solid understanding of different machine learning algorithm categories is essential. Below are key reasons explaining why it is important to understand different machine learning algorithms.

Choosing the right algorithm for the right problem

Not all algorithms work well for every problem. For instance, classification tasks are best handled by supervised machine learning algorithms like SVM or Logistic Regression. A solid grasp of types of machine learning algorithms helps engineers quickly select the best model, reduce trial-and-error, and improve performance. This is crucial for building practical ML systems.

Controlling risks in machine learning models

Each machine learning algorithm has its strengths and risks when misapplied. For example, Decision Trees are prone to overfitting without depth control, while KNN performs poorly on high-dimensional or unevenly distributed data.

Some algorithms are highly sensitive to noise or require well-normalized features to function correctly. Understanding how different ML algorithms operate allows engineers to anticipate and mitigate errors early in training or deployment.

Optimizing model performance and computational cost

Not every machine learning algorithm is resource-efficient. According to a NIPS paper, histogram-based LightGBM can train over 20× faster than traditional GBDT while maintaining similar accuracy.

Another study shows GPU‑accelerated GBDT is 7–8× faster than CPU GBDT, and 25× faster than exact-split XGBoost in certain cases. Meanwhile, models like Logistic Regression are much lighter, typically training in seconds to minutes. Understanding how each ML algorithm uses CPU/GPU and memory enables engineers to balance accuracy, speed, and deployment cost more effectively.

Better interpretation and explainability of results

Engineers not just need to understand how a machine learning algorithm works, but also how to explain its results clearly to non-technical stakeholders. When they grasp how each ML algorithm operates, they can translate complex predictions into simple, intuitive explanations, without relying on academic jargon that only other engineers might understand.

For example, a Decision Tree allows you to visualize the prediction path, making it easier for clients to see how a decision was made. In contrast, using a complex model like a Neural Network in quantum machine learning without truly understanding it can make it hard to explain outputs in a meaningful way to a product team or business client. That’s why mastering different basic machine learning algorithms is also key to delivering insights that people understand and trust.

Flexible application across multiple domains

Understanding different machine learning algorithms allows engineers to apply the right model to the right domain. For example, in finance, interpretable ML algorithms like Logistic Regression are often preferred for risk control.

In e-commerce, models like KNN or Random Forest perform well in analyzing user behavior. For image-related tasks, Neural Networks are the go-to choice. Mastering various machine learning algorithms categories enables engineers to move beyond a one-size-fits-all approach and build solutions tailored to each use case.

Top 12 Popular Machine Learning Algorithms To Know in 2025

In the field of Machine Learning, each algorithm has different approaches and applications, suited to various data types and specific analytical goals. Below are the 12 most popular algorithms, widely applied across many industries.

Linear Regression

Linear Regression is one of the most common basic machine learning algorithms used to predict continuous values based on one or more input variables. It establishes a linear relationship between the independent variable(s) and the dependent variable by fitting the best line.

The linear regression equation is: Y=aX+b

Real-world example:

Imagine a student asked to arrange classmates by increasing weight without knowing their actual weights. The student might use height and build as visible clues to estimate and order them.

Logistic Regression

Logistic Regression is one of the basic machine learning algorithms used to solve binary classification problems. The algorithm predicts the probability that an instance belongs to a class by applying the sigmoid function to a linear combination of input variables.

Specifically, the model's output is a value between 0 and 1, representing the probability of belonging to class "1." If this probability exceeds a threshold (e.g., 0.5), the model classifies the instance into that class.

Real-world example:

To predict whether a customer will buy a product, Logistic Regression can use features such as age, income, and shopping habits.

Decision Tree

Decision Tree is one of the popular machine learning algorithms used for classification and regression tasks. This algorithm builds a model in the form of a tree, where each node represents a decision rule based on input features.

The advantage of Decision Trees is their interpretability and easy visualization, making it simpler to explain results. Understanding Decision Trees helps engineers select the right algorithm among many machine learning algorithms when model explainability is important.

Real-world example: In healthcare, Decision Trees can classify patients based on features like age, blood pressure, and symptoms to suggest appropriate treatments.

Random Forest

Random Forest is a powerful machine learning algorithm in the ensemble methods group, working by combining multiple decision trees to make more accurate predictions. Thanks to the bootstrap aggregating (bagging) mechanism, this model minimizes overfitting and improves accuracy on large datasets.

Real-world example: Random Forest is widely used in the financial sector to predict credit risk, helping banks determine the likelihood of a customer defaulting on a loan.

Support Vector Machine (SVM)

SVM is a machine learning algorithm used for classification and regression, working by finding the optimal hyperplane to separate data into distinct groups. With the kernel trick, SVM efficiently handles non-linear data, improving model accuracy.

Real-world example: SVM is widely applied in handwritten character recognition, enabling systems to classify handwritten letters into specific categories, supporting Optical Character Recognition (OCR) technology.

K-Nearest Neighbors (KNN)

Based on the Nearest Neighbor Principle, KNN is a simple yet effective algorithm that identifies the closest data points in a multi-dimensional space. When classifying a new point, the model examines k-nearest neighbors and assigns a label based on majority voting. Due to its intuitive nature, KNN is widely used in pattern recognition and recommendation systems.

Real-world example: KNN plays a crucial role in movie recommendation systems, where the algorithm compares a user’s preferences with those who have similar tastes to suggest relevant content.

Naive Bayes

Efficient Probabilistic Reasoning Naive Bayes relies on Bayes' theorem to calculate the probability of an event occurring based on existing data. Its key assumption is that features within the dataset are independent, making computations simpler while maintaining strong performance.

Real-world example: Naive Bayes is widely applied in spam email filtering, where the algorithm analyzes email content and predicts whether it’s spam based on the probability of certain keywords appearing.

K-Means Clustering

Grouping data by Cluster Centers, K-Means Clustering classifies data into groups by optimizing cluster centers based on the distance between points. Once data is separated into distinct clusters, the model can uncover patterns and trends that were previously unknown.

Real-world example: In customer analytics, K-Means helps segment consumers based on shopping behaviors, allowing businesses to personalize marketing strategies.

Gradient Boosting Machines

Gradient Boosting Machines (GBM) employ a sequential learning approach, where each new model focuses on correcting mistakes made by earlier iterations. By continuously adjusting predictions, GBM progressively improves accuracy and overall performance.

Real-world example: In financial risk assessment, GBM helps institutions evaluate the probability of loan default, providing valuable insights for better decision-making.

Neural Networks

Artificial neural networks are designed to mimic the way the human brain processes information, utilizing interconnected layers that adapt and learn from data. By adjusting weights through training, these models refine predictions and enhance performance.

Real-world example: Neural Networks serve as a foundation for facial recognition systems, allowing AI to accurately identify individuals in photos or videos.

XGBoost

XGBoost is an advanced machine learning algorithm that enhances both speed and accuracy by leveraging optimization techniques such as parallel processing and regularization. These improvements make it exceptionally well-suited for handling complex and large-scale datasets efficiently.

Real-world example: XGBoost is widely adopted in financial forecasting, where it helps institutions predict trends in stock prices or assess credit risk with remarkable precision.

Principal Component Analysis

Simplifying Complex Data PCA is a dimensionality reduction technique that transforms high-dimensional data into a smaller set of principal components while preserving essential information. By eliminating redundancy and emphasizing the most significant features, PCA helps streamline data analysis and visualization.

Real-world example: In image compression, PCA is used to reduce the number of features in an image, allowing storage and processing to be more efficient while maintaining visual quality.

>>> READ MORE: Blockchain Development Outsourcing: Benefits, Process, and Tips

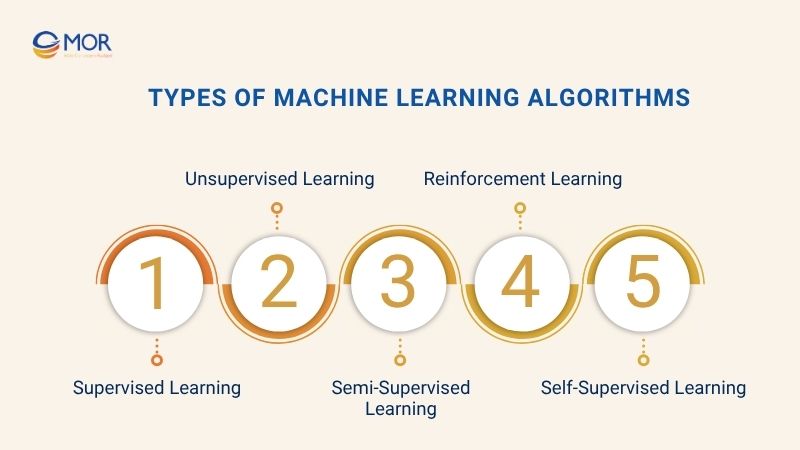

Types of machine learning algorithms

Understanding different machine learning algorithms categories helps engineers choose the right approach, avoiding wasted resources and excessive training time. Below are the five most common algorithm types, each suited to specific data structures and application scenarios.

Supervised Learning

Supervised machine learning algorithms learn from labeled output data. Each training sample includes a pair of input and corresponding output values, allowing the model to learn the mapping between them. One key advantage of this method is the ability to measure performance using metrics such as accuracy, AUC, or mean squared error (MSE).

Examples: Linear Regression (used for price prediction), Logistic Regression (binary classification), and Random Forest (for complex tasks with high accuracy).

Unsupervised Learning

Unsupervised machine learning algorithms learn from unlabeled data. Unlike supervised learning, there are no predefined outputs. The model identifies patterns, groupings, or structures that naturally exist in the data without guidance.

One key advantage of this method is the ability to uncover hidden relationships or segmentations, especially when labeled data is unavailable or expensive to obtain.

Examples: K-Means Clustering (for customer segmentation), DBSCAN (for anomaly detection), and Principal Component Analysis (for dimensionality reduction and visualization).

Semi-Supervised Learning

Semi-supervised machine learning algorithms combine both labeled and unlabeled data to train models. In many real-world cases, only a small portion of data is labeled due to high costs or time constraints, while the majority remains unlabeled.

The main advantage of this approach is its ability to optimize learning efficiency without requiring all data to be labeled, reducing labeling costs while maintaining higher accuracy compared to using only unlabeled data.

Examples: Semi-Supervised SVM, Label Propagation, and Ladder Networks (commonly used in deep learning with limited labeled data).

Reinforcement Learning

Reinforcement learning algorithms learn by interacting with an environment and receiving feedback in the form of rewards or penalties. Unlike supervised learning, they do not rely on labeled input-output pairs but instead learn optimal actions through trial and error.

A key advantage of reinforcement learning is its ability to solve complex sequential decision-making problems, especially where the outcome depends on a series of actions rather than a single prediction.

Examples: Q-Learning, Deep Q-Networks (DQN), and Policy Gradient methods, widely used in robotics, game playing, and autonomous systems.

Self-Supervised Learning

Self-supervised machine learning algorithms learn from unlabeled data by creating artificial labels from the data itself. This approach allows models to learn useful representations without requiring manual labeling.

A major advantage is that self-supervised learning leverages vast amounts of unlabeled data to improve model performance, especially in domains where labeled data is scarce or expensive.

Examples: Contrastive Learning, Autoencoders, and Masked Language Models like BERT.

>>> READ MORE: How Does Enterprise AI Software Work to Automate Decisions?

The Main Components of a Machine Learning Algorithm

A machine learning algorithm operates based on three key components: decision process, error function, and model optimization process. Each plays a crucial role in enabling the model to learn from data and make accurate predictions.

- Decision Process: This is the first step in any machine learning algorithm. When receiving input data, the model analyzes it and tries to make a prediction. Example: A supervised machine learning algorithm can examine features like age, occupation, and salary to predict whether a person qualifies for a loan.

- Error Function: The error function measures how accurate the predictions are. It calculates the difference between actual values and the model’s predictions. Example: In a clustering machine learning algorithm, if the model incorrectly groups customers, the error function identifies the inaccuracies and helps adjust the clustering to improve segmentation.

- Model Optimization Process: Once errors are identified, the algorithm works to minimize them by adjusting internal parameters. This process improves the model’s efficiency over time. Example: A popular machine learning algorithm, like neural networks, updates the weights of its connections to enhance accuracy as it learns from new data.

Conclusion

Mastering different Machine Learning Algorithms is more than just an academic exercise, it’s the key to building accurate, efficient, and explainable machine learning vs AI systems that solve real-world problems. As you continue your journey in Machine Learning, take the time to explore how each algorithm works, where it shines, and when it falls short. Ready to take the next step? Contact us now and dive deeper into hands-on projects, experiment with real datasets, and bring these algorithms to life in your own ML applications!

MOR SOFTWARE

Frequently Asked Questions (FAQs)

What is the difference between artificial intelligence vs machine learning algorithms?

Artificial Intelligence is the broader concept of machines simulating human intelligence. Machine Learning Algorithms are specific methods that allow machines to learn from data and improve automatically.

What is the most used machine learning algorithm?

Linear Regression, Logistic Regression, and Decision Trees are among the most widely used due to their simplicity and effectiveness across common tasks.

What are the main types of machine learning algorithms?

Supervised, Unsupervised, Semi-Supervised, Reinforcement, and Self-Supervised Learning

Can I learn machine learning algorithms without coding?

Yes, you can understand the concepts without coding, but to apply ML algorithms effectively, learning basic coding (e.g., Python) is highly recommended.

Rate this article

0

over 5.0 based on 0 reviews

Your rating on this news:

Name

*Email

*Write your comment

*Send your comment

1