TOP 6 Information Sets Used in Machine Learning You Should Know

How can machines truly “understand” the real world? The answer lies in information sets used in machine learning, the essential data that fuels model training, performance tuning, and accurate predictions. In this article, MOR Software will help you to discover how to effectively find and select high-quality machine learning datasets to power your AI projects. Let’s start!

What are information sets used in machine learning?

In the context of machine learning, information sets refer to datasets utilized throughout the process of training, tuning, and evaluating models. This data set can come in various forms, such as structured tables, images, natural language text, or sensor data.

By processing and analyzing these information sets, a machine learning model can learn patterns and develop its predictive capabilities. Information sets used in machine learning and predictive analytics play a role in every stage of the ML pipeline.

- Training phase: Information sets provide the raw data that allows models to learn patterns and relationships between inputs and outputs. This forms the foundation for algorithms like neural networks, decision trees, and others to “understand” the problem.

- Tuning phase: A subset of the data (the validation set) is used to optimize hyperparameters and avoid overfitting.

- Evaluation phase: The model is tested on a separate test set, an independent information set it hasn't seen before, to assess its accuracy and ability to generalize.

- Deployment phase: Models may continue to be monitored using real-world datasets, which help evaluate long-term performance and adaptability to changing conditions.

Why Are Datasets Essential in Machine Learning?

Data is the foundation of every machine learning model. Without high-quality datasets, a model can hardly learn effectively or make accurate predictions. Below are some key reasons why datasets are essential in machine learning:

Datasets Are the Foundation for Model Learning

During the training phase, machine learning model require information sets to learn the relationship between input data and expected output. These datasets act like "textbooks," gradually enabling the model to build knowledge. Without appropriate data, a machine learning algorithm cannot generalize or make any meaningful predictions.

In a digit classification, the MNIST dataset, a well-known information technology dataset, provides tens of thousands of handwritten digit images from 0 to 9.

- Input: Digit images (as pixel matrices)

- Output: Correct labels (e.g., an image of "3" is labeled as "3")

The model learns from this dataset to identify the relationship between pixel patterns and the corresponding digit. Without access to datasets like MNIST, the model would have no reference for recognizing what the digit “3” looks like, making an accurate prediction impossible.

Training Data Determines Model Quality

A strong machine learning model cannot be built on poor-quality data. Choosing the right information sets used in machine learning has a direct impact on the model’s real-world applicability. According to a Gartner survey, poor data quality costs organizations an average of $15 million per year, as it negatively affects decision-making and business outcomes.

The saying "garbage in, garbage out" holds especially true in AI and ML. Inaccurate datasets can ruin model performance and limit the effectiveness of even the most advanced algorithms. The more diverse, clean, and well-labeled the data, the more effective and trustworthy the model will be.

Datasets Help Models Reflect the Real World

The ultimate goal of machine learning is to solve real-world problems. To achieve this, models need to be trained on information sets that reflect the actual environments in which they will operate. Real-world data allows the model to learn practical patterns and constraints, ultimately improving its performance in real-life scenarios beyond the lab.

In the domain of air pollution forecasting, for example. Machine learning datasets are often collected from real-time IoT sensor networks installed in urban monitoring stations.

Sources of real-world data

- Environmental sensors that measure fine dust (PM2.5, PM10), NO₂, CO₂, humidity, and temperature.

- Historical data from open government portals or platforms such as OpenAQ.

Applications

- Predicting pollution levels over the next 24–48 hours.

- Issuing early health warnings to communities at risk.

If the model is trained only on theoretical or artificial datasets, it may miss key patterns found in real environments. As a result, its predictions could become less accurate in practice. In critical areas like public health, this can lead to serious consequences.

Datasets Are Tools for Model Validation and Improvement

Information sets are used for training and play a vital role in testing and fine-tuning models after deployment. Validation, test, and real-world datasets help check performance and reveal if the model needs adjustment. This is a crucial part of the lifecycle of any machine learning system.

>>> READ MORE: Quantum Machine Learning: The Complete Guide for 2025

Types of Information Sets in Machine Learning

In the process of training a machine learning model, not all datasets are used in the same way. Each type of information set serves a distinct and important purpose. This section will help you clearly understand the different types of datasets in machine learning:

Classification by Function in Machine Learning

Criteria | Training Dataset | Validation Dataset | Test Dataset | Holdout Dataset |

Primary Purpose | Train the model to learn patterns and relationships | Tune hyperparameters and optimize performance | Evaluate the final model's accuracy after training | Independently assess performance after deployment or model updates |

Key Characteristics | Labeled, reused multiple times, often shuffled | Labeled, not used to update model weights | Labeled, never used during training or tuning | Completely separate, reflects unseen or future data |

Stage of Use | During the training phase | During training (in parallel with the training set) | After training is complete | After deployment or when the model is updated |

Impact on Model | High | Moderate | High | High |

Representation of Real-World Data | Medium - may be preprocessed or selectively sampled | Fair - mimics unseen data | High - simulates actual user data | Very High - resembles deployment environment or future scenarios |

Usage Frequency | Frequently used throughout the training process | Reused for each tuning cycle | Used once for final evaluation | Used once (or once per model update) |

Classification by Data Characteristics

Criteria | Structured Data | Unstructured Data | Semi-structured Data |

Definition | Data organized in rows and columns (e.g., tables) | Data without a predefined format or structure | Data with partial structure (e.g., key-value pairs) |

Common Formats | CSV, SQL databases, Excel sheets | Text documents, images, audio, and video | JSON, XML, HTML |

Ease of Processing | High | Low | Medium |

Use Cases in ML | Regression, classification, forecasting | Sentiment analysis, image recognition, speech processing | Web data mining, metadata analysis, log data processing |

Examples | Customer data, sales records, sensor logs | News articles, tweets, photographs, audio recordings | JSON APIs, email headers, and system logs |

ML Techniques Commonly Used | Decision trees, linear regression, SVMs | CNNs, RNNs, transformers (depending on type: image/text/audio) | Hybrid approaches, parsing + traditional ML/NLP |

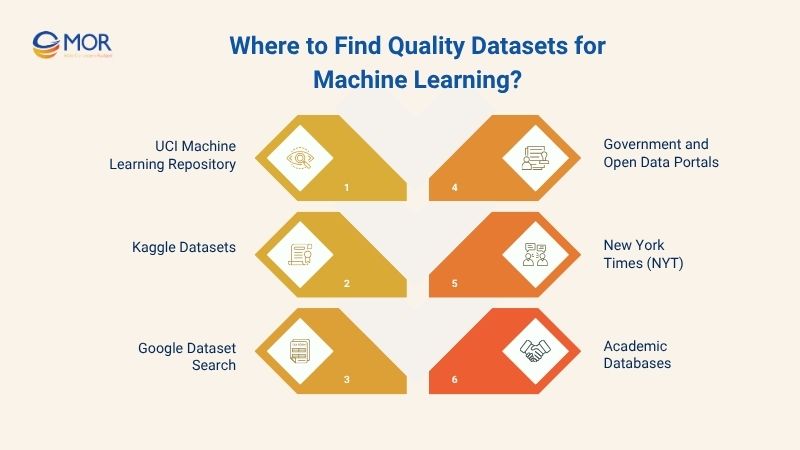

Where to Find Quality Datasets for Machine Learning?

Finding the right, high-quality dataset is the first step toward building an effective machine learning model. Depending on your goal and domain, there are various sources you can explore. Below are trusted platforms and dataset repositories suitable for real-world machine learning projects.

UCI Machine Learning Repository

The UCI Machine Learning Repository is a long-standing, publicly available collection of datasets maintained by the University of California, Irvine. It serves as one of the most trusted sources of information sets used in machine learning, widely adopted for education and academic research.

Who uses it?

- University students and instructors

- Academic researchers

- Machine learning beginners

Key advantages:

- No registration is required, making it easy to explore and use.

- Most datasets come with clean formatting and detailed metadata, making them easy to work with.

- Includes data from ai and machine learning in healthcare, finance, image recognition, natural language, and more.

Kaggle Datasets

Kaggle is an open data-sharing platform owned by Google. It serves as a vibrant online community where users can upload, explore, and utilize thousands of datasets. These information sets used in machine learning span a wide range of topics and are frequently used in data science projects, predictive analytics, and ML competitions.

Who uses it?

- Data engineers, data scientists

- AI developers

- Intermediate to advanced learners

Key advantages:

- Offers a wide variety of datasets across different domains.

- Most datasets come with detailed descriptions, sample files, and sometimes starter notebooks.

- Supports cloud-based analysis via Kaggle Kernels without needing local downloads.

Google Dataset Search

Google Dataset Search is a specialized search engine developed by Google. It helps users discover publicly available datasets across the web. It functions similarly to Google Search but focuses entirely on locating open-access data sources relevant to machine learning and analytics.

Who uses it?

- Researchers seeking specialized or rare datasets

- Data scientists

- AI professionals and social science analysts

Key advantages:

- Enables access to a wide range of hard-to-find datasets

- Seamlessly integrates with tools like Google Scholar and Data Commons.

- Extremely helpful for comparing or aggregating information across multiple data repositories.

Government and Open Data Portals

These are platforms maintained by governments or public institutions to provide open datasets for research, development, and transparency. The machine learning datasets from these sources are often high-quality, real-world data collected systematically and updated regularly.

Who uses it?

- Researchers and ML professionals seeking reliable, official datasets

- Businesses applying predictive analytics in sectors

- Educators and students in data science or information technology dataset fields

Key advantages:

- Trusted data with verified sources and quality control

- Covers a wide range of topics: population, public health, environment, education, finance, and more

- Many datasets come in structured formats, ready for analysis

- Freely accessible, making it ideal for academic and applied projects

New York Times (NYT)

Information sets used in machine learning NYT typically include news articles, headlines, comments, and metadata from The New York Times, supporting a wide range of NLP tasks.

Who uses it?

- AI and NLP researchers

- Media analytics firms and social trend analysts

- Students and developers building ML projects using real-world text data

Key advantages:

- Offers timely, diverse language data for training NLP models (e.g., sentiment analysis, fake news detection)

- Information sets used in machine learning NYT reflect real-world events and language variation.

- Data is accessible via the NYT API for automated, large-scale use.

Academic Databases

Academic databases such as IEEE Xplore, Springer, and OpenML host peer-reviewed research papers and datasets. These platforms offer high-quality, curated information sets used in machine learning, often created or referenced in academic studies.

Who uses it?

- Machine learning researchers

- University students and faculty

- Developers working on state-of-the-art models

- Data scientists seeking benchmark datasets

Key advantages:

- Provides verified and well-documented datasets with clear context.

- Supports reproducible research and comparison across models.

- Offers access to domain-specific information sets used in machine learning

What Makes a Good Dataset for Machine Learning?

Not every dataset is fit for machine learning. Some help models learn better, while others lead to poor results. So, what makes a dataset truly useful? This section will walk you through the key qualities of a strong machine learning dataset.

Relevant to the Project

A good dataset in machine learning must be directly relevant to the specific problem the model aims to solve. If the data is not aligned with the task, even the most advanced machine learning algorithms may learn the wrong patterns or fail to learn anything useful.

For instance, a facial recognition model for low-light settings won’t benefit from clear, well-lit portraits. It needs an information set used in machine learning with dimly lit images, varied angles, and clear labels like identity or emotion.

Clean and Well-Structured

A high-quality dataset must be clean, free of duplicates, missing entries, or logical inconsistencies. A clear structure also simplifies preprocessing and makes training ML models more efficient.

For example, in a 2021 study by MIT CSAIL, researchers found that 6% of labels in the ImageNet validation set were incorrect, affecting about 2,900 images. This led to significant consequences: benchmark rankings shifted for over 30% of top-performing models.

Sufficiently Large

An information set used in machine learning needs to be sufficiently large to help the model learn complex data relationships. If the dataset is too small, the model may simply memorize the training data (overfitting) and fail to generalize to new, unseen inputs.

A research paper on radiomics analysis for brain tumor classification revealed serious overfitting issues when using a small dataset.

- Initial dataset: 109 glioblastoma cases and 58 brain metastasis cases

- Experiment: Researchers created a smaller subset by randomly sampling 50% of the original data

- Method: Conducted 1,000 random train-test splits

Key findings:

- On the reduced dataset, the AUC difference between training and test sets reached 0.092 ± 0.071 (i.e., ±7% fluctuation)

- In extreme cases, training AUC was 0.882, but test AUC dropped to 0.667

Ethical and Legally Safe

An information set used in machine learning must not only be high-quality but also comply with ethical and legal standards. Using data without proper consent or containing sensitive information can lead to serious legal risks.

In 2019, developer David Heinemeier Hansson publicly revealed that Apple Card granted him a credit limit 20 times higher than his wife’s. Even though they shared finances and his wife had a higher credit score. The case went viral, prompting the New York State Department of Financial Services to launch an investigation into Goldman Sachs’ credit approval algorithm for potential gender bias.

How to Choose the Best Dataset Source for Your ML Project?

With so many dataset sources available, choosing the right one for your ML project can be challenging. This final section will guide you through key steps to evaluate and select the most suitable dataset source.

Define Your Machine Learning Objective

Start by clarifying the specific problem your model will solve. Are you working on image classification, sentiment analysis, or price prediction? Your objective will determine what type of information sets used in machine learning you should be looking for.

Assess Data Completeness and Quality

Examine whether the information sets have complete labels, the extent of missing data, and whether there is noise or inconsistency. Prefer datasets with detailed documentation and community validation.

Match the Source to Your Experience Level

If you’re new to machine learning, start with beginner-friendly platforms like Kaggle, UCI ML Repository, or Google Dataset Search.

Prioritize Real-World Relevance

A strong model performs well on training data and in real-world scenarios. Choose sources that provide representative information sets used in machine learning, such as weather data from NASA, news content from the New York Times, or financial data from Yahoo Finance.

Consider Licensing and Legal Constraints

Using information sets used in machine learning without proper rights or user consent may lead to legal issues. Prioritize sources like Creative Commons, MIT, or Public Domain licenses.

In Conclusion

Choosing the right information sets used in machine learning is critical to building accurate, reliable, and scalable AI models. Whether you're training a model to recognize images, forecast trends, or analyze text, the quality and relevance of your dataset will directly impact your results. Ready to take the next step? Explore trusted dataset sources, start experimenting with real-world data, and unlock the full potential of machine learning in your projects today. Contact us now!

MOR SOFTWARE

Frequently Asked Questions (FAQs)

What are information sets used in machine learning?

They are datasets that help machine learning models learn patterns, make predictions, and improve over time.

What are the main types of information sets used in machine learning?

Common types include training sets, validation sets, test sets, and holdout sets, each used at different stages of the ML process.

Where can I find real-world datasets for machine learning projects?

You can explore datasets on platforms like Kaggle, UCI Repository, Google Dataset Search, or government open data portals.

What does the crossword clue “information sets used in machine learning” refer to?

It usually refers to datasets, the core data used to train and evaluate machine learning models.

Rate this article

0

over 5.0 based on 0 reviews

Your rating on this news:

Name

*Email

*Write your comment

*Send your comment

1