Deep Reinforcement Learning: How It Works And Future Potential

AI is now a broad and rapidly evolving field, creating new opportunities for businesses to solve complex problems and optimize operations. One standout approach is deep reinforcement learning, which powers intelligent systems that learn from their environment. In this article, MOR Software shares key insights and future trends in deep reinforcement learning to help businesses stay competitive in a changing market.

What Is Deep Reinforcement Learning?

Deep reinforcement learning is a crucial field in artificial intelligence that combines deep learning and reinforcement learning to solve complex problems in dynamic environments. Unlike traditional machine learning methods, deep learning reinforcement uses deep neural networks to represent policies or value functions.

For example:

In Atari games like Breakout and Pong. Instead of hardcoding every move, the agent uses deep neural networks to observe the game screen and learns to control the paddle to maximize the score. This learning process happens over millions of plays, with each win or loss recorded as a reward or penalty, gradually improving the gameplay strategy.

What Is Deep Reinforcement Learning?

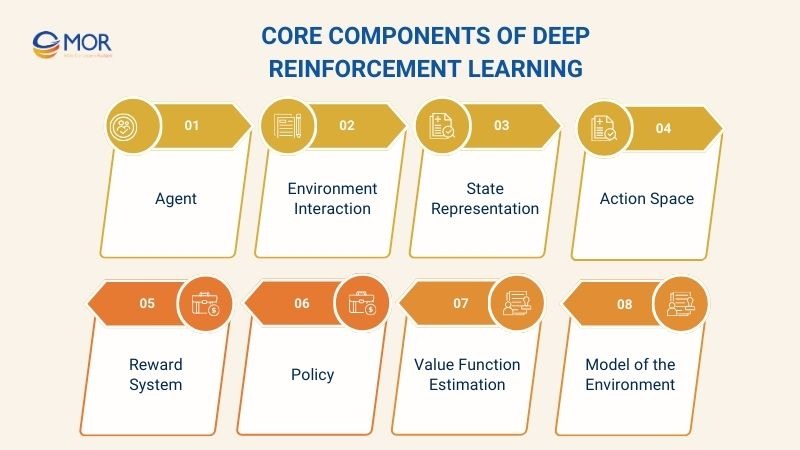

Core Components Of Deep Reinforcement Learning

Deep Reinforcement Learning has several core components, each playing a crucial role for the agent to learn and optimize its behavior through continuous interaction with the environment. Below are the main components that make up a deep reinforcement learning system:

Agent

In deep reinforcement learning, the agent is the central component responsible for making decisions and taking actions within the environment. The agent observes the current state of the environment and uses a learned policy to select optimal actions aimed at achieving long-term goals.

A key feature of the agent in reinforcement learning is its ability to continuously learn from real experiences through the observation-action-reward loop. The agent does not rely solely on static input data but adapts and adjusts its behavior based on feedback received from the environment.

Environment Interaction

In deep reinforcement learning, the interaction between the agent and the environment is the core of the learning process. The agent takes actions based on its current policy, and the environment responds with a new state and a reward. This cycle, known as the interaction loop, forms the foundation of reinforcement learning.

For example, in Atari games, each time the agent controls the paddle to hit the ball, the environment returns the corresponding score and a new game screen state. The agent uses this information to adjust its actions in subsequent plays, thereby maximizing the cumulative reward over time.

State Representation

State representation refers to how the agent perceives and encodes the current information from the environment. In deep RL, states can be images, sensor data, or other complex inputs. Efficient state representation helps the agent process information accurately and quickly.

Action Space

The action space defines the set of all possible actions the agent can choose from. In deep learning reinforcement, the action space can be discrete or continuous depending on the specific task.

For example, in chess, the action space consists of all valid moves (discrete), whereas a robotic arm might have a continuous action space involving various joint angles and applied forces.

Reward System

The reward system guides the agent during learning by providing positive or negative feedback depending on the actions taken. It is the core of reinforcement learning and deep learning reinforcement, helping the agent distinguish between good and bad behaviors.

Policy

A policy is a mapping from states to actions that determines how the agent behaves in the environment. In deep RL, the policy is typically represented by deep neural networks, enabling the learning of complex and flexible strategies that surpass traditional methods.

For example, the REINFORCE algorithm directly optimizes the policy through gradient ascent, allowing the agent to learn stochastic policies suitable for environments with high randomness.

Value Function Estimation

Value function estimation helps the agent predict expected future rewards when taking an action or following a specific policy. It is a critical part of reinforcement learning algorithms such as Q-learning and Deep Q-Network (DQN).

For example, in DQN, the neural network learns to estimate Q-values for each state-action pair, enabling the agent to choose the action with the highest value to maximize long-term rewards.

Model of the Environment

A model of the environment simulates or predicts how the environment will respond to the agent’s actions. In model-based reinforcement learning, the agent uses this model to plan actions ahead of time, improving learning efficiency and reducing the need for extensive real-world interactions.

For instance, a robot may use a physical model to predict the movement of its arm when changing joint angles, allowing it to plan precise motions without excessive trial and error in the real environment.

Core Components Of Deep Reinforcement Learning

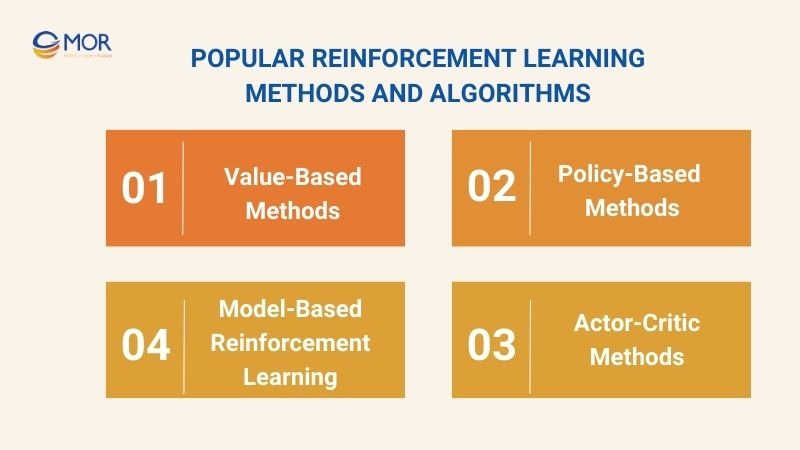

Popular Reinforcement Learning Methods And Algorithms

In the field of deep reinforcement learning, there are various methods and algorithms designed to solve the problem of optimizing an agent’s behavior. Below is an overview of the most popular algorithm groups in deep RL:

Value-Based Methods

Value-based methods focus on estimating the value function, which predicts the expected reward when the agent takes a specific action in a given state. Typical algorithms include Q-Learning and its deep learning extension, the Deep Q-Network (DQN).

Policy-Based Methods

Instead of estimating values, policy-based methods in deep reinforcement learning directly learn a policy — a mapping from states to actions. Popular algorithms include REINFORCE training and other policy gradient techniques.

Example: A robotic arm learning to pick up objects doesn’t estimate value for each movement but directly optimizes its movement strategy through policy gradients to perform smooth and precise actions in continuous action spaces.

Actor-Critic Methods

Actor-Critic methods combine two components: an actor that selects actions based on a policy, and a critic that estimates the value of those actions to help improve the policy. Common algorithms are A3C and PPO.

Example: In strategic games, the actor chooses moves according to its strategy while the critic evaluates those moves. This combination helps the agent learn faster and more stably in complex decision-making environments.

Model-Based Reinforcement Learning

Model-based reinforcement learning builds a model of the environment to predict future states and rewards before taking actions. This enables the agent to plan better and reduce the number of real-world interactions.

Example: In autonomous driving, instead of trying every action on the real road, the agent simulates different scenarios in a virtual model to choose the safest and most efficient driving strategy.

Method | Learning Focus | Action Space Suitability | Use of the Environment Model | Typical Algorithm Examples | Key Innovation / Feature |

| Value-Based Methods | Estimate value function (Q-values) | Mainly discrete action spaces | Model-free | Q-Learning, Deep Q-Network (DQN) | Directly evaluate the expected reward of actions |

| Policy-Based Methods | Optimize policy directly | Supports continuous & discrete | Model-free | REINFORCE, Policy Gradient | Learns stochastic policies, handles continuous action smoothly |

| Actor-Critic Methods | Combines value estimation & policy optimization | Continuous & discrete | Model-free | A3C, PPO | Uses separate networks for policy (actor) & value (critic) to reduce variance and improve stability |

| Model-Based RL | Builds and uses environment models | Continuous & discrete | Model-based | Planning algorithms, Model Predictive Control | Predicts future states to plan better actions, improves sample efficiency |

Popular Reinforcement Learning Methods And Algorithms

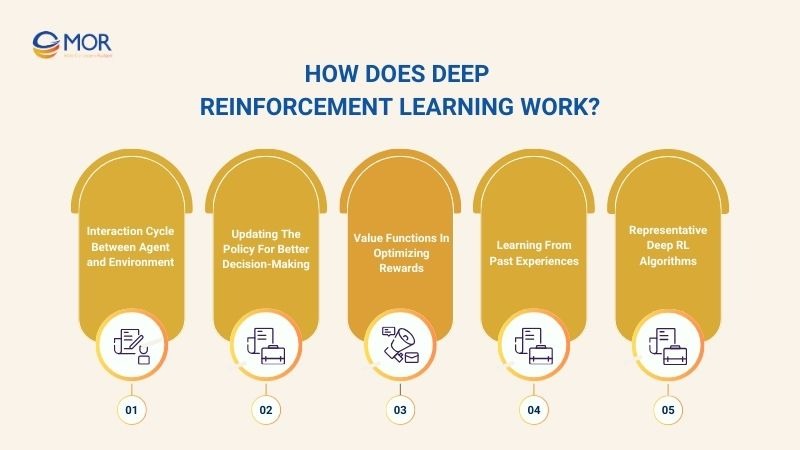

How Does Deep Reinforcement Learning Work?

In deep reinforcement learning, the learning process of an agent is based on a continuous interaction cycle between the agent and the environment. Below is an overview of the key steps in this interaction cycle:

Interaction Cycle Between Agent and Environment

In deep reinforcement learning, the interaction cycle between the agent and the environment follows a loop of four fundamental steps:

- Observing the current state

- Selecting an action

- Receiving a reward

- Updating the state.

Upon receiving information about the current state, the agent uses its policy to decide the optimal action to maximize future rewards.

Example: In an Atari game like Breakout, the agent observes the screen, chooses paddle movements, receives scores as rewards, and updates its state to improve performance over time.

Updating The Policy For Better Decision-Making

In deep reinforcement learning, after each interaction loop, the agent needs to adjust its policy based on the rewards obtained. Algorithms utilize techniques such as gradient descent to optimize the policy parameters, enabling the agent to select better actions in future states and maximize the accumulated reward over time.

Value Functions In Optimizing Rewards

The value function in deep reinforcement learning enables the agent to predict expected future rewards when taking an action or following a specific policy. Estimating the value function helps the agent evaluate potential actions and guide the action selection process to maximize long-term rewards.

Learning From Past Experiences

Unlike traditional machine learning methods, deep reinforcement learning applies experience replay to store and reuse past interaction data. This technique helps the agent learn more stably by reducing dependency on sequential data and preventing overfitting.

In DQN, for example, the replay buffer stores thousands of experiences randomly sampled during training. This enables the agent to update its value function and policy based on diverse and rich data, improving learning speed and stability in dynamic environments.

Representative Deep RL Algorithms

Several prominent algorithms have demonstrated high effectiveness across various problems in deep reinforcement learning. The Deep Q-Network (DQN) focuses on learning the Q-value function using deep neural networks, which helps agents solve large state-space problems.

Advantage Actor-Critic (A2C) and Asynchronous Advantage Actor-Critic (A3C) combine value function learning and policy optimization. These algorithms extend the applicability of deep reinforcement learning to more complex and continuous environments.

How Does Deep Reinforcement Learning Work?

Real-World Applications Of Deep Reinforcement Learning

In practice, deep RL is increasingly applied across various fields, from entertainment to industry and healthcare. Below are the key areas where deep reinforcement learning demonstrates significant effectiveness:

Game Playing

In deep reinforcement learning, game playing is one of the most iconic and easy-to-understand applications of how the algorithms work. Agents are trained to autonomously learn and master complex games such as board games, classic Atari games, or modern real-time strategy games.

Example: AlphaGo – a deep reinforcement learning system that defeated world champion Go players. The operation flow of AlphaGo includes:

- Collecting professional Go game data from top players and using it to pre-train a deep neural network.

- Training via reinforcement learning allows the agent to play numerous matches against itself to improve both the policy and value function.

- Combining the deep neural network with the Monte Carlo Tree Search (MCTS) algorithm to evaluate potential moves and select the optimal action in each situation.

- Continuously refining the policy based on real match outcomes to enhance gameplay performance.

Robotics and Automation

In deep reinforcement learning, robotics applications enable robots to learn complex tasks through real-world interactions and feedback. This allows robots to autonomously adjust their behavior and optimize task performance.

Example: Robotic object grasping using deep RL:

- Initialize the robot with basic actions and sensory inputs.

- Perform various actions in a simulated environment.

- Gather feedback in the form of rewards based on the precision and safety of grasping.

- Update the control policy using the recorded experiences.

- Repeat the cycle until the robot can reliably grasp objects in real-world settings.

Financial Modeling and Trading

Deep reinforcement learning is applied in finance to predict market movements and optimize investment strategies. Agents learn to make decisions about buying, selling, or holding financial instruments based on historical data and market signals.

Example: Deep RL in stock trading – process flow:

- Collect historical price data and market indicators.

- Build an agent model with the state space representing current financial indicators.

- The agent decides to buy, sell, or hold based on the current policy.

- Receive rewards based on profits or losses from trades.

- Update the policy to improve investment performance over multiple trading sessions.

Healthcare and Medical Decision Support

In healthcare, deep reinforcement learning improves diagnosis quality and treatment planning by analyzing patient data and simulating treatment options. Agents learn to propose optimal treatment plans based on medical history and patient responses.

Example: Deep RL for personalized treatment – workflow:

- Collect patient medical records and past treatment results.

- The agent observes the patient’s current health status.

- Recommend a treatment plan based on the learned policy.

- Receive feedback from the actual treatment outcome (reward or penalty).

- Update the policy to enhance future treatment recommendations.

Autonomous Vehicles and Transportation

Deep reinforcement learning plays a critical role in developing self-driving cars and optimizing transportation systems. Agents learn to navigate complex traffic environments, handle diverse scenarios, and make safe, efficient decisions.

Example: Deep RL for self-driving cars – process flow:

- The vehicle collects sensor data and the current environmental state.

- The agent selects actions such as accelerating, braking, or steering based on its current policy.

- The environment provides feedback in the form of a new state and rewards related to safety and efficiency.

- Update the policy to improve driving skills over multiple interactions.

- Apply deep RL to traffic signal control systems to reduce congestion.

Real-World Applications Of Deep Reinforcement Learning

Key Challenges Facing Deep Reinforcement Learning Today

In the process of development and application, deep reinforcement learning not only offers significant potential but also faces major challenges. Below are the most notable issues that deep reinforcement learning often encounters.

Sample Efficiency and Data Requirements

Deep reinforcement learning algorithms often require a large volume of training data and extensive experience from the environment to learn an optimal policy. This leads to long training times and high computational costs, especially in complex environments or those with large state spaces.

The problem becomes more severe when data collection is costly or risky, such as in robotics, where each trial action can cause equipment damage or safety hazards.

To improve sample efficiency, researchers often adopt techniques such as:

- Replay Buffer: Storing and reusing past experience to maximize the value of collected data.

- Model-Based reinforcement learning: Building a simulated environment to reduce the number of real-world interactions required.

- Transfer Learning: Leveraging knowledge from similar tasks to shorten the learning process.

Stability and Convergence Issues

In deep reinforcement learning, ensuring stability and convergence during training is a major challenge. Because deep RL combines reinforcement learning with deep neural networks, training can become unstable if parameters change too rapidly or if the policy fluctuates excessively.

This instability can lead to divergence, causing the agent to learn suboptimal or even nonsensical behaviors that harm overall performance.

Scalability and Computational Demand

Deep reinforcement learning demands significant computational resources, especially when dealing with complex environments and large state - action spaces. To achieve strong results, an agent may need to process millions to billions of training steps, alongside training deep neural networks with millions of parameters.

A notable example is the Rainbow algorithm (which integrates several improvements over DQN), presented in Google Research. Training Rainbow across the full Atari 57 benchmark requires about 34,200 GPU hours (equivalent to 1,425 GPU days) just to obtain reliable evaluation results for each game.

Safety and Ethical Concerns

In deep reinforcement learning, safety and ethical considerations are critical when deploying agents in real-world scenarios. If an agent learns incorrectly or behaves unexpectedly, the consequences can be severe.

Example: A self-driving system trained with deep reinforcement learning might optimize for travel speed and fuel efficiency. However, if the model is not sufficiently trained for emergency scenarios or lacks comprehensive coverage in its training data, the agent could make dangerous decisions posing serious safety risks.

Key Challenges Facing Deep Reinforcement Learning Today

Trends And Future Directions In Deep Reinforcement Learning

Many experts see deep reinforcement learning as a key step toward Artificial General Intelligence (AGI). Its strength lies in learning from both real-world interaction and simulation. New architectures like world models and meta-learning can increase adaptability, giving agents richer and more flexible behaviors, even outside predefined environments.

Combining deep reinforcement learning from human preferences with model-based RL can speed up and improve safety. In some cases, model-based RL uses only 10% real-world data, while the remaining 90% comes from simulation. This greatly improves sample efficiency compared to model-free methods.

In the future, deep reinforcement learning will aim beyond human-level control through deep reinforcement learning in one task. It will focus on continuous control with deep reinforcement learning to handle multiple tasks in sequence and support long-term interaction.

Benchmarks like cart-pole swing-up, humanoid locomotion, and manipulation tasks already show that deep RL can manage continuous and complex challenges.

In Conclusion

Deep reinforcement learning has moved beyond the realm of research, becoming a powerful driver of innovation and optimization in real-world business operations. By partnering with MOR Software, your business can design, develop, and deploy AI solutions tailored to your industry’s specific needs. Contact MOR Software today to build a sustainable competitive advantage with cutting-edge AI technologies.

Rate this article

0

over 5.0 based on 0 reviews

Your rating on this news:

Name

*Email

*Write your comment

*Send your comment

1