Semi Supervised Learning Explained: Guide To Techniques And Uses

Are you unsure whether to choose supervised learning or unsupervised learning for your business? With massive amounts of data being generated every day, effectively leveraging both labeled and unlabeled data is key to optimizing machine learning models. In this article, MOR Software will introduce a balanced approach – semi supervised learning – that helps your business maximize data value and improve model performance.

What Is Semi Supervised Learning?

Semi supervised learning is a method that uses both labeled and unlabeled data simultaneously to train a model. By combining labeled data with a large amount of unlabeled data, the model can learn more about the data structure, which helps improve prediction performance.

For example:

In the field of image processing, a semi supervised machine learning system can use some labeled images (such as object classification) together with thousands of unlabeled images to increase the model’s accuracy.

What Is Semi Supervised Learning?

Semi Supervised Learning vs Supervised Learning vs Unsupervised Learning

In the field of machine learning, there are three popular learning methods: supervised learning, unsupervised learning, and semi supervised learning. Each method approaches data differently and is suitable for specific types of problems.

Criteria | Supervised Learning | Unsupervised Learning | Semi Supervised Learning |

Data Used | Fully labeled data | Unlabeled data | A combination of labeled and unlabeled data |

Accuracy | High if enough labeled data is available | Lower accuracy due to a lack of labels | Usually higher than unsupervised, close to supervised |

Data Labeling Cost | High, requires labeling the entire dataset | Low, no labeling required | Lower than supervised, saves cost by using unlabeled data |

Common Applications | Classification, prediction with labeled data | Clustering, dimensionality reduction | Image processing, natural language processing, speech recognition |

Training Complexity | Medium to high due to labeled data requirement | Low to medium | Medium, combines supervised and unsupervised techniques |

Required Domain Knowledge | High to label data accurately | Low, automatic discovery of data structure | Medium, needs understanding of both labeled and unlabeled data |

Robustness to Noise | Low if labeled data contains errors | Relatively high due to no reliance on labels | Medium, requires techniques to handle noisy data |

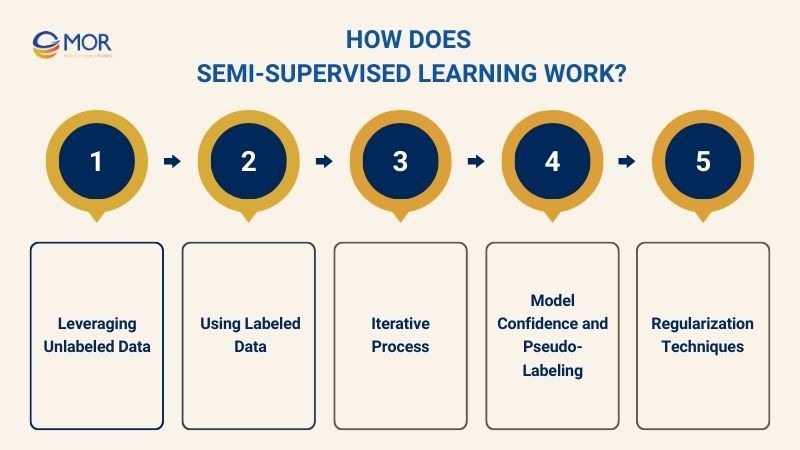

How Does Semi Supervised Learning Work?

Semi supervised learning effectively combines both labeled and unlabeled data to build more accurate and efficient machine learning models. Below, we will explore how semi-supervised learning works.

Leveraging Unlabeled Data

In semi supervised learning, leveraging unlabeled data plays a crucial role because most real-world data is often unlabeled. Unlabeled data provides a rich source of information that helps the model better understand the overall data structure and distribution.

Properly leveraging unlabeled data significantly reduces data labeling costs while maintaining model performance.

Using Labeled Data

Labeled data remains essential in semi supervised learning. Using labeled data enables the model to learn precise and clear relationships within the data, providing an anchor for the training process.

Labeled data acts as a quality benchmark, ensuring the model does not get misled when learning from unlabeled data.

Iterative Process

The training process in semi supervised learning typically involves multiple iterative cycles, known as the iterative process. In each cycle, the model learns from labeled data, predicts labels for unlabeled data, and updates itself based on these pseudo-labels.

Thanks to training in iterative cycles, the model gradually improves its predictive ability over each iteration, increasing confidence and accuracy. This repetitive process allows the model to fully exploit the potential of unlabeled data while minimizing errors caused by inaccurate pseudo-labels.

Model Confidence and Pseudo-Labeling

An important technique in semi supervised learning is pseudo-labeling, which relies on the model's confidence, referred to as model confidence. The model assigns pseudo-labels to unlabeled data with high prediction confidence.

Creating pseudo-labels for unlabeled data helps expand the labeled dataset without manual annotation efforts.

Regularization Techniques

To prevent overfitting when combining labeled and unlabeled data, various regularization techniques are applied in semi supervised learning. Common methods include dropout, data augmentation, and adjusted loss functions that help control model complexity.

Applying regularization in semi supervised learning ensures the model performs effectively even when unlabeled data may contain noise or incorrect information.

How Does Semi Supervised Learning Work?

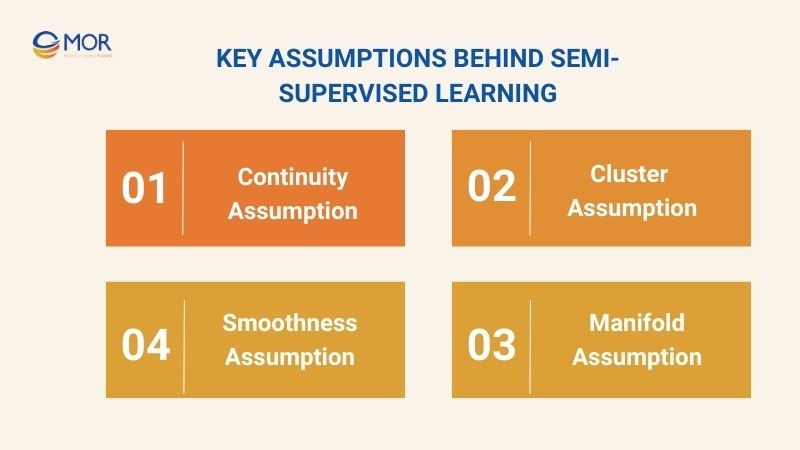

Key Assumptions Behind Semi Supervised Learning

In semi supervised learning, to effectively leverage both labeled and unlabeled data, several fundamental key assumptions are made. Understanding these assumptions is a crucial foundation for properly applying these techniques and achieving optimal results.

Continuity Assumption

The continuity assumption in semi supervised learning assumes that if two data points are close to each other in the feature space, they are likely to share the same label. In other words, the labels change smoothly and continuously in response to changes in the features.

For example, suppose you have an image dataset containing dogs and cats. If two images have very similar colors, shapes, and sizes (located close together in the feature space), they are likely both images of dogs or both images of cats.

Cluster Assumption

The cluster assumption in semi supervised learning suggests that data points tend to form distinct groups or clusters, and points within the same cluster usually share the same label. The model can leverage this structure to assign labels to unlabeled data points based on the cluster they belong to.

For example, in a text classification task, documents with similar content tend to be grouped in a cluster. If you know the labels of some documents within the cluster, you can infer the labels of the unlabeled documents in the same cluster.

Manifold Assumption

The manifold assumption in semi supervised learning assumes that complex data lie on a lower-dimensional space called a manifold, which is embedded within the high-dimensional feature space. Models can exploit this manifold structure to learn more effectively from limited labeled data.

For example, in a facial recognition task, images can lie on a low-dimensional manifold representing variations like pose, lighting, or expression. Understanding and utilizing this manifold helps the model make more accurate predictions on unlabeled data.

Smoothness Assumption

The smoothness assumption in semi supervised learning means that data points close to each other usually share similar labels, and label changes occur gradually, not suddenly.

For example, in an audio classification task, audio segments with similar features (such as the same speaker or music type) usually share the same label. Therefore, the classification function should reflect smooth transitions between these types to achieve better learning performance.

Key Assumptions Behind Semi Supervised Learning

Top Common Techniques In Semi Supervised Learning

In the field of semi supervised learning, several common techniques are used to effectively leverage both labeled data and unlabeled data. Understanding and correctly applying these methods helps develop more efficient semi learning models:

Self-Training

Self-training is one of the most popular techniques in semi supervised learning. This method starts with a model trained on an initial set of labeled data. The model then predicts labels for the unlabeled data.

This process repeats iteratively, allowing the model to gradually improve its ability to learn from both labeled and unlabeled data. Self-training effectively leverages large amounts of unlabeled data, reducing data labeling cost while increasing the accuracy of the model.

Co-Training

Co-training is a popular semi supervised learning technique that involves training two or more separate models on different feature sets of the same dataset. Each model is initially trained on labeled data and then predicts labels for unlabeled data that the other model has not seen.

Confident predictions from one model are used to augment the labeled dataset for the other model, enabling the models to teach each other.

Graph-Based Methods

Graph-based methods in semi supervised learning build a graph where each node is a data point, and edges show how similar these points are. Labels from labeled data points propagate through the edges to assign labels to unlabeled data based on their connections.

Generative Models

Generative models are statistical models that learn the underlying data distribution from both labeled and unlabeled data. Examples include Variational Autoencoders (VAE) and Generative Adversarial Networks (GANs), which can generate new data samples or learn efficient data representations.

Top Common Techniques In Semi Supervised Learning

Advantages Of Semi Supervised Learning For Your Business

Semi supervised learning offers significant advantages for businesses, especially when handling large volumes of data with limited labeled data available. These benefits make semi learning an ideal choice for companies looking to enhance efficiency in applying machine learning.

Reduced Labeling Costs

Reduced labeling costs are one of the biggest benefits of using semi supervised learning. In practice, collecting and labeling data can be time-consuming and expensive, especially for large or complex datasets such as images, audio, or specialized text.

However, with semi supervised learning, labeling costs can be reduced by up to 47%. While delivering a return on investment (ROI) that is 3.2 times higher compared to traditional manual labeling methods.

Improved Model Performance

Semi supervised learning is especially effective when the amount of labeled data is limited, but there is a large volume of unlabeled data available. This helps businesses develop robust machine learning models, reducing errors and increasing reliability.

For example, the EPASS (Ensemble Projectors Aided for Semi supervised Learning) method improved model accuracy on the ImageNet dataset, achieving a top-1 error rate reduction of 31.39%.

Scalability with Large Unlabeled Data

A key advantage of semi supervised learning is its ability to efficiently handle large datasets, especially unlabeled data. Semi supervised models use large amounts of unlabeled data instead of relying only on labeled data.

This helps businesses scale their data easily without greatly increasing manual labeling costs.

Better Generalization

Semi supervised learning helps models avoid overfitting when the amount of labeled data is small and not diverse enough. By learning from unlabeled data, models better understand data structure, improving accuracy and prediction across cases.

As a result, the model not only performs well on training data but also maintains high performance when applied to new real-world data, helping businesses achieve more sustainable and reliable outcomes.

Advantages Of Semi Supervised Learning For Your Business

Real-World Applications Of Semi Supervised Learning

In practice, semi supervised learning is increasingly being applied across many important fields. Below are some key real-world applications of semi supervised:

Natural Language Processing (NLP)

Semi supervised learning in natural language processing (NLP) improves model performance when labeled data is limited but unlabeled data is abundant. This method enables models to learn complex semantic features from unlabeled data while effectively utilizing labeled data to enhance accuracy.

Example: A company wants to classify customer emails such as “technical support,” “billing,” and “product feedback,” but only has a small portion of emails labeled. The semi supervised learning process involves:

- Initially, train the model on the limited labeled email dataset to learn basic features of each email category.

- Adding emails with high-confidence predictions to the labeled dataset, thereby expanding the training data.

- Retraining the model iteratively with the expanded dataset to improve classification by leveraging both labeled data and unlabeled data.

Computer Vision

Semi supervised learning in computer vision enhances object recognition and image classification by leveraging unlabeled data. This allows the model to learn more complex image features and improve accuracy.

Ex: A tech company develops an object recognition system for surveillance cameras, possessing 10,000 clearly labeled images and over 100,000 unlabeled images.

The semi supervised learning workflow includes:

- Training a deep learning model on the 10,000 labeled images to learn fundamental object features like shape, color, and position.

- Predicting labels on the 100,000 unlabeled images, selecting only those with confidence scores above 90%.

- Adding these high-confidence labeled images to the original labeled dataset significantly increases the labeled data volume.

- Retraining the model multiple times with the expanded dataset improves object recognition accuracy under varied conditions.

Healthcare

In healthcare, semi supervised learning supports building effective disease prediction systems and medical image analysis when labeled data is scarce due to high costs and strict privacy regulations.

Example: A hospital aims to develop a cancer diagnosis model from X-ray images, but has only a limited number of expert-labeled images.

The process is:

- Training the model on the labeled data to recognize cancer features.

- Using the model to predict labels on unlabeled X-rays, selecting high-confidence predictions.

- Creating an expanded dataset from these confident predictions to retrain the model, improving detection accuracy.

Fraud Detection

Semi supervised learning aids in detecting financial fraud and anomalies when labeled fraud data is minimal, but transaction data without labels is large.

Example: A bank wants to detect fraudulent transactions based on historical data with a small fraction labeled as fraud or legitimate.

The semi supervised learning procedure involves:

- Training the initial model on labeled transaction data to recognize fraud patterns.

- Predicting labels on unlabeled transactions, keeping only high-confidence predictions.

- Expanding the training dataset with these confident predictions to retrain the model, enabling the detection of new and diverse fraud types.

Real-World Applications Of Semi Supervised Learning

Challenges And Limitations Of Semi Supervised Learning

During the application of semi supervised learning, businesses and researchers often face several key challenges to consider. Understanding these challenges is essential for developing and implementing more effectively in real-world scenarios.

Data Quality Issues

In semi supervised learning, data quality plays a crucial role in determining model effectiveness. When both labeled data and unlabeled data contain noise, inaccuracies, or misleading information, the model can learn incorrectly, leading to poor prediction results.

According to research from ScienceDirect, low-quality unlabeled data can reduce the performance of machine learning models by 15% to 30% if not properly handled.

Scalability Challenges

In practice, applying semi supervised learning to extremely large datasets presents significant scalability challenges. Handling and training models on massive amounts of unlabeled data requires high computational resources and long processing times.

Balancing learning efficiency with computational cost is key to successfully deploying semi supervised learning at scale.

Model Interpretability

One notable limitation of semi supervised learning is the difficulty in interpreting and understanding how the model makes decisions. This poses challenges for businesses that require transparency or control over model decisions, especially in high-stakes domains like finance and healthcare.

Semi supervised models are complex because they combine labeled and unlabeled data, making learning and prediction harder than in traditional supervised models.

Challenges And Limitations Of Semi Supervised Learning

In Conclusion

If you’re still unsure about how to effectively apply semi supervised learning for your business, let MOR Software be your trusted partner. With our expertise and practical experience, MOR Software will help your business fully harness the potential of semi supervised learning to improve model performance and reduce labeling costs. Contact us today to explore tailored solutions and take your business further on the path of digital transformation.

Rate this article

0

over 5.0 based on 0 reviews

Your rating on this news:

Name

*Email

*Write your comment

*Send your comment

1