Top 8 Big Data Platforms And Tools For Growth In 2026

Are you looking for a reliable big data platform to handle your organization’s growing data? In today’s data-driven world, choosing the right big data analytics platform is critical to unlocking insights and making strategic decisions. This article will help you explore, compare, and better understand the most popular platforms available today.

What Is A Big Data Platform?

A big data platform is an integrated system that combines tools, technologies, and architecture designed to store, process, and analyze massive volumes of data efficiently. These platforms allow businesses to collect data from multiple sources, perform batch or real-time processing, and extract meaningful insights to support decision-making.

In the digital era, big data software plays a critical role in helping organizations understand customer behavior, optimize operations, and predict future trends. More than just data storage, it incorporates advanced big data tools and powerful big data analytics platform features that enable fast, scalable, and intelligent data analysis.

Implementing the right platform empowers businesses to seize market opportunities faster, stay ahead of competitors, and drive long-term growth through data-driven strategies.

What Is A Big Data Platform?

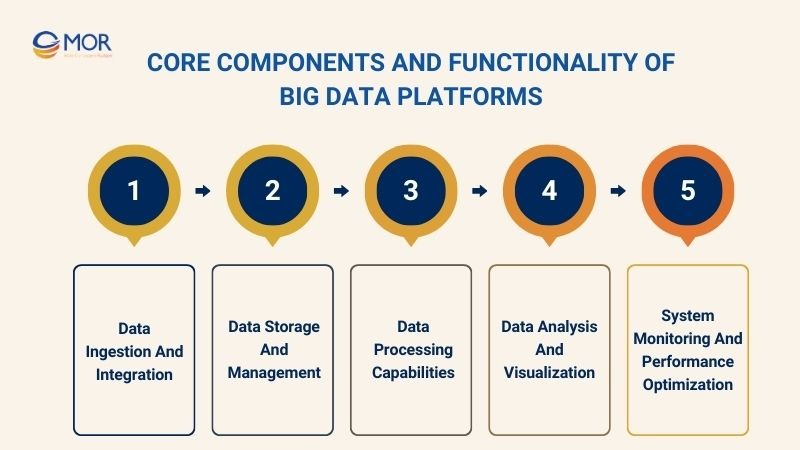

Core Components And Functionality Of Big Data Platforms

To operate effectively, a big data platform must be built on several core components, each playing a vital role in the data processing and analytics lifecycle. In this section, we will explore the key functions of this platform:

Data Ingestion And Integration

One of the first and most critical steps in any big data platform is the ingestion and integration of data from multiple sources. Modern big data originates from hundreds of systems, including websites, mobile applications, IoT devices, internal databases, and cloud services.

Bringing these diverse data streams into a unified analytics system requires the platform to support flexible and robust connectivity. To achieve this, big data tools such as Apache NiFi, Talend, and Kafka are often integrated directly into the platform to automate the process of data collection, transformation, and cleansing.

Data Storage And Management

After data is ingested into the system, the next essential phase is efficient, secure, and scalable storage and management. A modern big data platform must support multiple storage models, ranging from structured data in relational databases to unstructured content such as logs, videos, or documents.

Common data platforms like Hadoop HDFS, Amazon S3, or Google Cloud Storage are often used as foundational storage layers. These platforms offer distributed storage capabilities, high availability, scalability, and cost efficiency.

Data Processing Capabilities

Once data is securely stored, the platform must provide powerful processing capabilities to enable analytics and business operations. Today’s big data analytics platforms support two key forms of processing: batch and real-time.

Batch processing is suitable for deep analytical tasks such as generating financial reports or analyzing historical user behavior. On the other hand, real-time processing is critical for scenarios that require instant responses, such as fraud detection or personalized product recommendations.

Big data tools like Apache Spark, Flink, or Storm are commonly integrated to ensure high-speed, scalable processing and efficient resource usage.

Data Analysis And Visualization

True value from big data is realized when organizations can extract insights through powerful analytics and intuitive visualizations. Leading big data analytics platforms offer capabilities such as descriptive, predictive, and even prescriptive analytics.

Depending on business needs, the platform can be integrated with big data analytics solutions such as Power BI, Tableau, or Looker, and support AI/ML-driven models. Strong visualization capabilities, including charts, dashboards, and tables, help non-technical users interact with data easily.

System Monitoring And Performance Optimization

As data volumes grow, a big data platform must remain stable, efficient, and responsive. System monitoring plays a vital role in detecting early performance issues, resource constraints, or system failures.

Popular big data tools such as Prometheus, Grafana, or the Elastic Stack are frequently integrated to provide real-time dashboards and automated alerts. These tools enable teams to manage platform health and optimize resource usage proactively.

Core Components And Functionality Of Big Data Platforms

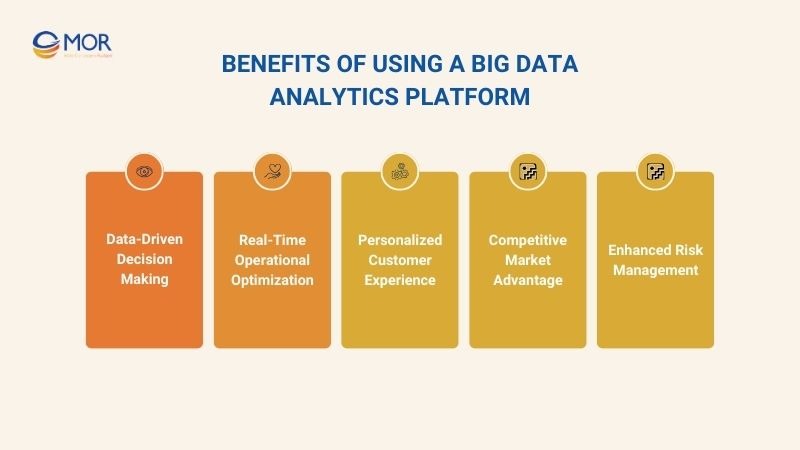

Benefits Of Using A Big Data Analytics Platform

Investing in a big data analytics platform enables enterprises to process and store massive volumes of data and delivers long-term strategic value. Below are the key benefits that a big data platform brings to today’s data-driven organizations:

Data-Driven Decision Making

One of the most significant advantages of implementing a big data analytics platform is its ability to support data-driven decision making. Modern data platforms enable organizations to collect, process, and analyze data in real time from multiple sources. Automating tasks that were once handled manually.

By aggregating and structuring this data, businesses can make faster and more informed decisions. According to Harvard Business Review, companies that adopt data-driven decision making experience a 5% increase in productivity and a 6% increase in profitability compared to competitors that do not rely on data.

Real-Time Operational Optimization

In today's fast-paced business environment, even a few seconds of delay can result in significant losses. Real-time data processing has become mission-critical. According to Gartner, organizations that implement real-time analytics reduce their mean time to resolution (MTTR) by an average of 65% compared to those using traditional monitoring approaches.

Modern big data platforms provide the ability to collect and analyze data in real time. This allows businesses to respond instantly to changes and continuously optimize operational processes.

Personalized Customer Experience

Big data analytics platforms empower businesses to collect multichannel data and build a complete customer profile, enabling highly personalized experiences.

For example, a cosmetics company implemented a big data platform to personalize the shopping journey. The platform gathered information from:

- Purchase history: product types, purchase frequency, and shopping times

- User surveys: skin type (oily, dry, sensitive), skin tone, skincare goals (acne treatment, whitening, anti-aging)

- Website interactions: previously viewed products, articles read, submitted reviews

With this data, the system created detailed customer personas, such as “female, sensitive skin, living in a dry climate, prefers organic products.”

Based on the profile, the platform automatically:

- Recommends products tailored to each skin type and goal

- Sends personalized promotions at the right time (e.g., moisturizing cream discounts when colder weather is forecasted)

- Customizes the website interface: each homepage displays different categories based on the user’s behavior

This level of customer personalization enhances the user experience and helps optimize marketing costs across every campaign.

Competitive Market Advantage

In a rapidly evolving market landscape, effectively leveraging data can help businesses stay one step ahead of the competition. 74% of companies acknowledge that their competitors are already using big data analytics to significantly differentiate themselves in the eyes of customers, media, and investors.

A powerful big data platform provides deep insights into industry trends, consumer behavior, and even competitor strategies. With this level of visibility, business leaders can make faster, more accurate, and more disruptive decisions that drive long-term market advantage.

Enhanced Risk Management

Risk is an unavoidable part of running any business. However, with a well-implemented big data analytics platform, organizations can proactively detect and mitigate potential threats before they escalate.

A typical example can be seen in the financial and banking sector, where a company deploys a big data platform to monitor transaction-related risks. The system collects real-time data from millions of daily transactions, combining it with auxiliary sources such as credit histories, fraud databases, user behavior, and market fluctuations.

Using advanced analytics algorithms and machine learning models, the platform can:

- Detect abnormal behavior patterns, such as irregular transaction frequency, logins from unfamiliar locations, or suspicious use of card information

- Automatically trigger alerts to risk management teams, enabling them to intervene before actual damage occurs

- Forecast emerging risk trends, such as rising default rates, credit risk, or cybersecurity threats

Benefits Of Using A Big Data Analytics Platform

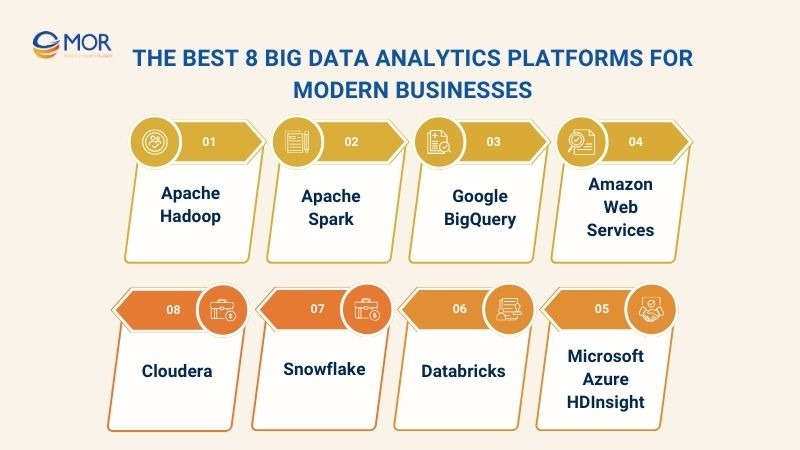

The Best 8 Big Data Analytics Platforms For Modern Businesses

In the era of digital data, choosing the right big data analytics platform is a critical step for modern businesses to fully unlock the power of their data. Below is a list of the 8 best big data platforms available today, each offering unique strengths tailored to different business needs and operational scales.

Apache Hadoop

When it comes to open-source big data analytics platforms, Apache Hadoop is almost always mentioned first. Designed for storing and processing massive volumes of unstructured data, Hadoop relies on batch processing. Meaning data is collected over time and processed in bulk, rather than instantly.

Key Benefits:

- Cost-effective: Can be deployed on commodity hardware without the need for expensive infrastructure.

- Open-source ecosystem: Backed by a large developer community and integrated with various big data tools like Hive (SQL-like queries), Pig (data scripting), and HBase (NoSQL database).

Limitations:

- Not suitable for real-time data workloads, since it requires data accumulation before processing.

- Requires technical expertise to deploy, scale, and optimize across distributed systems.

Use Case: A major telecom company uses Apache Hadoop as its core data platform to analyze system logs generated by millions of endpoint devices. The logs, which include connection errors, latency metrics, and network usage by region, are continuously recorded 24/7.

Instead of processing logs immediately, the company batches all logs over a 6-hour window, stores them in HDFS, and then runs MapReduce jobs to analyze:

- Areas with high connection failure rates.

- Devices are most prone to network issues.

- Peak traffic hours are causing network congestion.

Insights are compiled into end-of-day reports to help the engineering team identify infrastructure bottlenecks, schedule maintenance, and optimize network performance in problematic regions.

Apache Spark

Apache Spark is a high-performance big data analytics platform known for its in-memory computing capabilities. Unlike Hadoop, which relies on batch processing, Spark supports both real-time data processing and batch workloads, making it ideal for time-sensitive analytics and fast decision-making.

Pros:

- Lightning-fast performance: Spark processes data in memory (RAM), delivering speeds up to 100x faster than traditional Hadoop in many scenarios.

- Robust ecosystem: Offers built-in tools like MLlib for machine learning, GraphX for graph processing, and Spark Streaming for real-time data pipelines.

Cons:

- High system resource demands: Requires substantial RAM and CPU power, which can increase infrastructure costs if not optimized.

- Complex deployment: Running Spark at scale in distributed environments requires a skilled team experienced in big data infrastructure and parallel processing.

Use Case: A major digital bank uses Apache Spark Streaming to detect fraudulent transactions in real time. The system ingests live data from credit card transactions, capturing location, device ID, transaction amount, and past user behavior.

Spark processes this real-time data stream instantly (within milliseconds) and feeds it into a pre-trained machine learning model via MLlib to:

- Detect anomalies like unfamiliar locations, unusually high transaction amounts, or deviations from the user's normal activity.

- Flag high-risk transactions as “suspicious” and trigger alerts to the bank’s risk control team immediately.

- Continuously learn from new data to improve model accuracy and reduce false positives.

Google BigQuery

Google BigQuery is a serverless big data platform developed on the Google Cloud Platform. It is designed for executing lightning-fast SQL queries at scale, without the burden of managing infrastructure. It’s especially well-suited for modern businesses that need to analyze terabytes to petabytes of data quickly and efficiently.

Key Advantages:

- Simple deployment: As a fully managed big data analytics platform, BigQuery eliminates the need for cluster setup or system maintenance. Just upload your data and start querying using SQL.

- High-speed performance: Capable of processing massive datasets in seconds. Seamless integration with tools like Google Sheets, Looker Studio, and other big data analytics tools in Google’s ecosystem.

Limitations:

- Limited infrastructure control: Since it’s a serverless platform, users can't customize or optimize low-level compute settings.

- Cost management: Query-based billing can escalate quickly if not optimized, especially with high-frequency or poorly written queries.

Use Case: An eCommerce company leverages Google BigQuery to analyze performance data across multiple marketing channels, including Google Ads, Facebook Ads, TikTok, and email campaigns. Every day, the platform ingests millions of events, such as ad impressions, clicks, purchases, and user sessions, from various sources.

The company uses BigQuery as its central big data analytics solution to:

- Run real-time SQL queries that calculate click-through rates, cost-per-acquisition (CPA), and ROI by campaign.

- Feed the processed data into Looker Studio to build interactive marketing dashboards that update live throughout the day.

- Segment users based on region, device type, and traffic source to optimize targeting and ad spend.

Amazon Web Services (AWS)

Amazon Web Services (AWS) is a robust ecosystem of big data analytics platforms offering end-to-end capabilities, including storage (Amazon S3), processing (AWS Glue), and analytics (Amazon Redshift). With flexible scalability and integration, AWS enables businesses to build versatile data platforms tailored to any industry or size.

Key Advantages:

- Enterprise-grade infrastructure: Offers a wide range of services with global scalability and high availability.

- AI/ML integration: Seamlessly connects with services like SageMaker, making it ideal for advanced big data analytics solutions.

Challenges:

- Steep learning curve: Initial setup and configuration can be complex without prior experience with cloud services.

- Cost management: Without a well-structured usage strategy, cloud costs may scale unpredictably.

Use Case: A leading Southeast Asian e-commerce company leverages the AWS big data platform ecosystem to build a real-time big data analytics solution for tracking and understanding user behavior at scale.

Implementation Workflow:

- Amazon Kinesis collects real-time event streams from websites and mobile apps, such as product views, cart abandonment, search queries, and scrolling behavior.

- AWS Glue performs ETL operations on the ingested data every few minutes, standardizing and loading it into Amazon S3 for long-term storage.

- Amazon Redshift runs complex queries to answer key business questions like:

- Which regions have the lowest conversion rates?

- What times of day see the highest cart abandonment?

- Which product combinations are frequently searched together?

Insights from Redshift are visualized using Amazon QuickSight, offering real-time dashboards to the marketing and product teams.

Microsoft Azure HDInsight

Microsoft Azure HDInsight is a fully managed big data analytics platform built on the Azure cloud. It supports popular open-source frameworks such as Hadoop, Spark, Hive, and Kafka. HDInsight is particularly well-suited for enterprises already using Microsoft technologies, thanks to deep integration with Power BI, Excel, Azure Synapse, and Active Directory.

Pros:

- Multi-framework support: Easily deploys batch processing with Hadoop and Hive, or real-time streaming with Kafka and Spark Streaming.

- Strong Microsoft ecosystem integration: Seamlessly connects with Power BI for reporting, and Active Directory for secure user access and role-based permissions.

Cons:

- Smaller user community: Compared to AWS or Google Cloud, fewer community resources and third-party tutorials are available.

- Limited infrastructure customization: Less flexible for hybrid or multi-cloud deployments compared to other big data tools.

Use Case: A major retail chain in Europe implemented Azure HDInsight as part of its big data analytics solution to process and analyze financial data generated by its Microsoft Dynamics 365 ERP system.

Implementation steps:

- HDInsight Spark was used to handle large volumes of data, including invoices, sales orders, POS transactions, and inventory records from Dynamics 365.

- The data was cleaned, normalized, and aggregated daily, then stored securely in Azure Data Lake Storage.

- Power BI is connected directly to the data lake to generate automated financial reports.

- Integration with Active Directory ensured that different departments (finance, audit, and regional managers) could securely access reports based on assigned roles.

Databricks

Databricks is an advanced big data tool that combines the capabilities of a data lake and data warehouse into a unified architecture known as a Lakehouse. It is one of the most powerful big data analytics platforms for enterprises focusing on AI, machine learning (ML), and large-scale data engineering workflows.

Pros:

- Enables advanced analytics, AI model training, and full data lifecycle management.

- Real-time collaboration through user-friendly notebooks (similar to Jupyter), ideal for cross-functional teams.

Cons:

- Requires a technically skilled team to deploy and operate effectively.

- High operational cost at scale if data processing jobs are not optimized.

Use Case: A fast-growing fintech company used Databricks as its primary big data analytics platform to build and train a machine learning model for credit scoring. The model leverages hundreds of features, including:

- Consumer behavior data (spending frequency, payment patterns)

- Financial data (income, debt ratio, loan history)

- Social signals (e.g., employment trends, geolocation context)

Implementation highlights:

- Raw data from multiple sources was ingested and processed in parallel using Databricks’ scalable Spark engine.

- Feature engineering and model training were done collaboratively in notebooks by data scientists and analysts.

- The final ML model predicted creditworthiness in near real-time, allowing the fintech to offer more tailored lending options while significantly lowering bad debt risk.

Snowflake

Snowflake is a cloud-native big data analytics platform that separates data storage from compute resources, allowing organizations to scale each independently. It is known for its high concurrency, multi-cloud capabilities, and secure data sharing between departments and even external organizations.

Pros:

- Fully managed and easy to use — no need to manage or optimize clusters manually.

- Supports cross-cloud analytics across AWS, Azure, and Google Cloud.

- Seamless data sharing and collaboration across teams and partners.

Cons:

- Not ideal for real-time data processing; better suited for scheduled or ad-hoc queries.

- Costs can increase rapidly with high or unmonitored compute workloads.

Use Case: A global retail chain implemented Snowflake to unify inventory, sales, and supply chain data from over 10 countries into a centralized big data platform. Here's how they leveraged Snowflake:

Implementation highlights:

- All data from e-commerce transactions, point-of-sale systems, and supplier databases was ingested into Snowflake via automated pipelines using Fivetran and dbt.

- Business analysts used SQL to build dynamic forecasting dashboards for stock replenishment based on near-real-time demand signals.

- The company enabled secure data sharing with logistics partners to proactively align warehousing and delivery schedules.

Cloudera

Cloudera is a robust big data analytics platform tailored for enterprises with strict security and compliance requirements. Its support for hybrid cloud deployments is particularly suitable for regulated industries such as finance, healthcare, and government.

Pros:

- High-level data security and full control over data governance.

- Flexible deployment across on-premise, private, and public cloud environments.

Cons:

- Complex to deploy and operate; requires an experienced technical team.

- High resource demands and maintenance costs.

Use case: A large commercial bank adopted Cloudera to build a secure data analytics platform for storing and analyzing sensitive financial information such as transaction history, credit scores, and personally identifiable data.

Implementation highlights:

- Deployed a hybrid setup using Cloudera Data Platform (CDP) with secure clusters on-premise for high-risk data and scalable cloud storage for less-sensitive workloads.

- Integrated with the bank’s internal identity and access management systems to enforce strict role-based access controls.

- Enabled advanced analytics on customer credit profiles and transaction patterns using Apache Hive and Spark within Cloudera.

| Criteria | Apache Hadoop | Apache Spark | Google BigQuery | AWS | Azure HDInsight | Databricks | Snowflake | Cloudera |

| Primary Processing Type | Batch processing | Batch + Real-time streaming | SQL-based batch queries | Batch + Near Real-time | Batch + Real-time | Batch + Streaming (Lakehouse model) | Batch (SQL engine) | Batch + Streaming |

| Real-time Support | No | Yes | Near real-time (limited) | Yes | Yes | Yes | Limited | Yes |

| Deployment Model | Self-managed (on-premise or cloud DIY) | On-prem / Cloud | Fully serverless (Google Cloud) | Fully managed cloud (AWS) | Azure cloud | Multi-cloud (AWS, Azure, GCP) | Fully cloud-native (multi-cloud) | Hybrid (on-premise + cloud) |

| Ease of Use | Difficult, requires strong technical team | Moderate, needs tuning | Very easy (SQL-based, Google integrations) | Moderate, AWS knowledge required | Moderate, Microsoft ecosystem friendly | Moderate, notebook UI helps | Very easy, minimal setup | Complex to deploy and manage |

| AI/ML Support | Limited | Strong (MLlib, GraphX) | Via external integrations | Good (SageMaker, native ML tools) | Via Spark ML | Excellent, built for ML/AI workflows | Via external tools | Supported, strong enterprise use cases |

| Key Strengths | Open-source, low infrastructure cost | Fast processing, real-time capable | Easy to use, high-speed querying | Rich ecosystem, global scalability | Deep Power BI & Active Directory integration | Lakehouse architecture, team collaboration | Data sharing, cross-cloud support | High security & compliance |

| Main Weaknesses | No real-time, complex to operate | Resource-heavy, complex at scale | Low infra control, costly if misused | Complex setup, cost control issues | Smaller community, less infra flexibility | Expensive if not optimized | Not ideal for real-time use | High infra demands, steep learning curve |

| Typical Use Case | Telecom log batch analysis | Real-time credit card fraud detection | Marketing dashboards with Google Sheets | Near real-time e-commerce behavior analysis | Automated financial reporting from Dynamics 365 | AI model training for credit scoring | Data sharing across sales partners | Regulatory-compliant financial analytics |

The Best 8 Big Data Analytics Platforms For Modern Businesses

Key Characteristics Of Big Data Platforms

To build or choose an effective big data analytics platform, businesses must first understand its three core characteristics. Recognizing and optimizing for these characteristics is crucial to improving analytical performance and making faster, data-driven decisions.

Volume

Volume refers to the total amount of data that a big data platform must store and process. In today’s digital businesses, this can range from hundreds of gigabytes to multiple petabytes. An ideal data architecture should scale efficiently without compromising performance.

Real-world example: A large e-commerce site like Shopee or Lazada stores billions of user interactions, product clicks, search queries, abandoned carts, reviews, and images. They rely on Amazon S3 for data storage and Redshift or Databricks for high-performance analytics.

If your platform can’t handle Volume:

- Systems may crash or freeze when storage capacity is exceeded.

- Incomplete analytics due to missing data -> wrong business decisions.

- Increased cost and inefficiency from repeated or fragmented data processing.

Velocity

Velocity is the rate at which data is generated, ingested, and processed, especially for real-time or near real-time analytics. In sectors like banking, ride-hailing, or digital commerce, processing speed is mission-critical.

Real-world example:

A digital bank uses Apache Spark Streaming and Kafka to detect credit card fraud. When a suspicious transaction occurs (e.g., unusual location or amount), the system flags it and sends alerts within seconds.

If your platform can’t handle Velocity:

- Fraud or errors go undetected until it’s too late, major financial losses.

- Slow system response leads to poor customer experience (e.g., OTP delays).

- Missed opportunities in time-sensitive markets like fintech or e-commerce.

Variety

Variety refers to a platform’s ability to handle multiple data types — structured (SQL), semi-structured (JSON), unstructured (images, logs), or real-time sensor data — often coming from various disconnected sources.

Real-world example:

A logistics company like DHL or GHN deals with:

- GPS data from delivery trucks (real-time IoT),

- Invoices from ERP systems (structured SQL),

- Driver-submitted documents (images),

- Chatbot logs from customer service (text).

They use a Databricks Lakehouse platform to unify all formats for AI-driven delivery optimization.

If your platform can’t handle Variety:

- Data gets siloed, and each type requires different tools and manual effort.

- Poor integration, incomplete insights, and missed opportunities.

- Difficult to train AI models effectively due to fragmented or inconsistent data.

Key Characteristics Of Big Data Platforms

Challenges In Implementing Big Data Analytics

While big data analytics platforms offer powerful insights and competitive advantages, their implementation often comes with significant challenges.

Lack Of Skilled Talent

While modern big data platforms are becoming increasingly accessible. However, the lack of skilled talent remains one of the biggest obstacles for businesses. Running a robust big data analytics platform requires a specialized team, including data engineers, analytics experts, and system architects.

However, these roles are often difficult to hire and train, especially in developing markets where highly qualified professionals are in short supply. According to a survey by BARC, nearly 50% of companies report that the shortage of analytical and technical skills is a major challenge when implementing big data analytics solutions

Exponential Data Growth

Today, businesses are not only dealing with massive volumes of data, but that data is also growing at an exponential rate. To keep up, modern big data analytics platforms must constantly scale their storage and compute infrastructure.

The data influx comes from various sources such as websites, mobile applications, IoT devices, and social media streams. Without a scalable big data platform, organizations risk serious bottlenecks.

Data Quality And Integrity

Even the most advanced big data analytics platforms can fail if the input data is inaccurate, unstructured, or duplicated. In real-world scenarios, enterprises often collect data from multiple sources/ Without a robust process for data cleansing and normalization, any big data analytics solution is at risk of producing misleading results, leading to poor business decisions.

Example: A major supermarket chain deployed a big data analytics platform to analyze purchasing behavior and optimize inventory by region. The data originated from multiple sources: CRM systems, in-store POS machines, online orders, and IoT sensors in warehouses.

However, due to a lack of data standardization, the same product was entered with different identifiers (e.g., "Soft drink - 330ml can" vs "Drink_330ml_Can"). As a result, the data platform generated inaccurate reports on inventory and sales performance.

Regulatory And Compliance Risks

Organizations in regulated sectors such as finance, healthcare, and technology must adhere to strict data protection and security standards like GDPR, HIPAA, and ISO 27001. Deploying a big data software solution without considering these compliance frameworks can lead to severe legal violations, hefty fines, and long-term damage to brand reputation.

To avoid these risks, data platforms must be designed with compliance in mind from the ground up. A secure big data analytics platform helps ensure regulatory compliance while enabling safe and scalable data processing.

Integration Complexity

One of the most common barriers when deploying big data platforms is the complexity of integrating with existing systems. Without proper compatibility or available connectors, organizations often have to allocate additional manpower, time, and budget just to weave different systems together.

A Salesforce/MuleSoft study found that 84% of organizations experience stalled digital transformation initiatives due to integration hurdles.

Challenges In Implementing Big Data Analytics

In Conclusion

Implementing a successful big data platform goes beyond just choosing the right tools. It’s a strategic decision that involves people, processes, and data governance. Stay tuned for upcoming articles featuring the latest big data analytics trends and real-world case studies from enterprises around the world!

Rate this article

0

over 5.0 based on 0 reviews

Your rating on this news:

Name

*Email

*Write your comment

*Send your comment

1