History of AI: The Complete Visual Timeline of Key Discoveries

The history of AI shows how far we’ve come from mechanical toys to systems that generate text, drive cars, and make decisions. This MOR Software’s guide explores AI history, traces major breakthroughs, and highlights how today’s businesses are putting AI to real use.

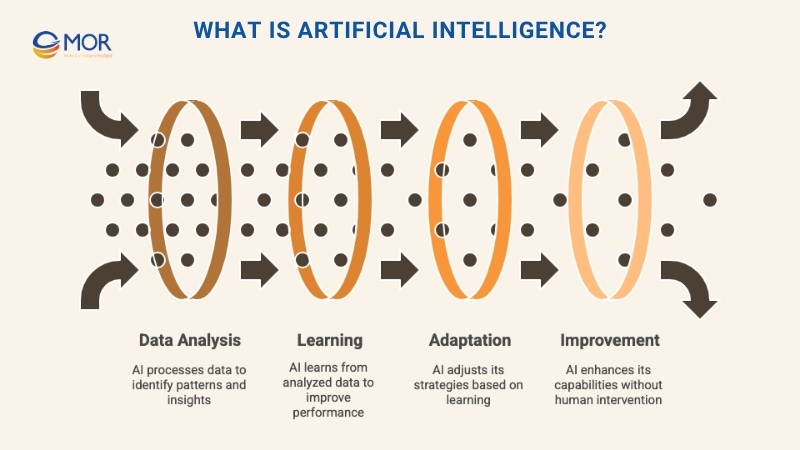

What Is Artificial Intelligence?

Artificial intelligence is a branch of computer science focused on building machines that can mimic how humans think and solve problems. Instead of relying on fixed instructions, these systems take in large amounts of data, learn from it, and adjust how they respond over time.

That’s the key difference. A regular program runs only what it’s told. But with AI overview models, the system can improve itself by recognizing patterns and past outcomes, no constant human tweaking required. In 2025, 78% of organizations said they use AI in at least one business function, up from 72% in 2024.

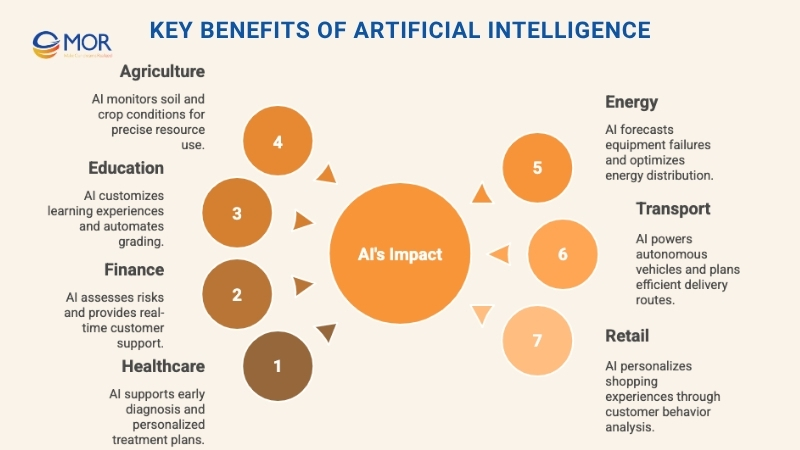

Key Benefits Of Artificial Intelligence

Artificial intelligence is fueling smarter, more resource-conscious growth in nearly every industry. It helps businesses cut back on manpower, materials, and time, without slowing output. That’s why it supports not just digital change, but sustainable change too.

The real value shows up in how AI works across sectors. It improves speed, accuracy, and decision-making while keeping costs low. Below are a few areas where this shift is already happening.

Healthcare

Virtual assistants now guide patients through symptoms and suggest diagnoses based on patterns in clinical data. AI also helps build personalized treatment plans using health records and genetic profiles.

In the U.S., researchers documented 1,016 FDA authorizations for AI or ML medical devices as of 2025, showing rapid regulatory uptake.

Finance

Smart machine learning algorithms assess credit risks, spot investment trends, and power chatbots that offer real-time financial advice. Banks rely on AI to make faster, sharper lending calls. McKinsey estimates AI could add up to 1 trillion dollars in additional value to global banking each year.

Education

Learning platforms adjust lessons to fit each student’s pace and level. On the admin side, AI can handle exam scoring, track performance, and free up time for teachers.

Agriculture

AI tools track soil data, weather changes, and crop conditions in real time. Drones and sensors make it easier to detect diseases early and apply resources more precisely.

Energy

Artificial intelligence applications predict failures before they happen, helping utility companies avoid outages. It also improves how electricity moves through the grid.

Transport And Logistics

AI powers self-driving cars and helps map out faster, cheaper delivery routes. It’s cutting emissions and costs for shipping companies worldwide.

Retail And Ecommerce

Sales forecasting is sharper with AI. It also fine-tunes product suggestions based on customer behavior, helping online shops convert browsers into buyers. Done well, personalization most often lifts revenue by 10 to 15%.

The Complete History Of AI

From ancient automata to deep learning, the history of AI is full of surprising turns and breakthroughs. We’ve broken it down by era so you can follow the full journey.

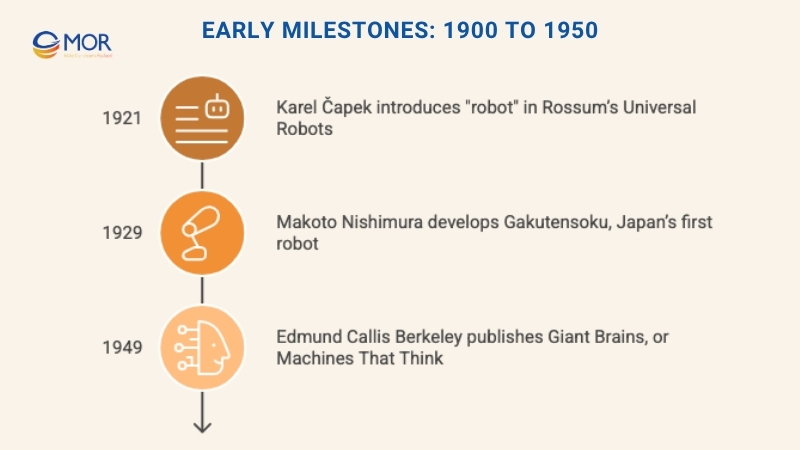

Early Milestones: 1900 To 1950

The history of AI stretches further back than most people realize. Long before we had computers, thinkers were already toying with the idea of non-human intelligence. Ancient inventors built devices called automatons, mechanical creations that could move without human control.

The name came from the Greek word for “self-acting.” One story from around 400 BCE tells of a mechanical bird built by one of Plato’s friends. Centuries later, Leonardo da Vinci created a mechanical knight that could sit, wave, and move its jaw, around the year 1495.

The modern history of ai begins in the early 20th century. That’s when engineers and writers started seriously considering whether machines could simulate the human brain. Robots, as we now call them, were imagined in fiction and sometimes brought to life, albeit in basic, steam-powered form. Some could walk or show simple expressions.

Important moments from this period:

- 1921: Czech writer Karel Čapek introduced the term "robot" in his play Rossum’s Universal Robots, featuring man-made workers.

- 1929: Japan’s Makoto Nishimura developed Gakutensoku, the first known Japanese robot.

- 1949: Edmund Callis Berkeley published Giant Brains, or Machines That Think, a book that linked new computing machines to human thought.

These events laid the early foundation for what would become modern AI history.

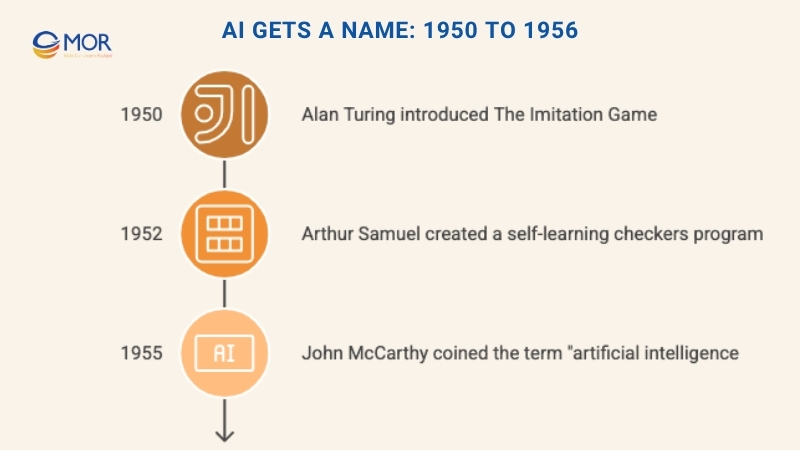

AI Gets A Name: 1950 To 1956

This was the turning point in what is the history of AI. Theories about machine learning thinking started turning into actual experiments. Alan Turing’s paper “Computer Machinery and Intelligence” set the stage for measuring how smart a machine could be.

His famous test, later called the Turing Test, asked whether a computer could fool a human into thinking it was also human.

During this period, the phrase artificial intelligence was born. What had once been science fiction started to look like something real.

Key events:

- 1950: Alan Turing introduced the idea of The Imitation Game, now known as the Turing Test.

- 1952: Arthur Samuel created a checkers program that learned how to play on its own.

- 1955: John McCarthy led a Dartmouth workshop and used the term "artificial intelligence" for the first time.

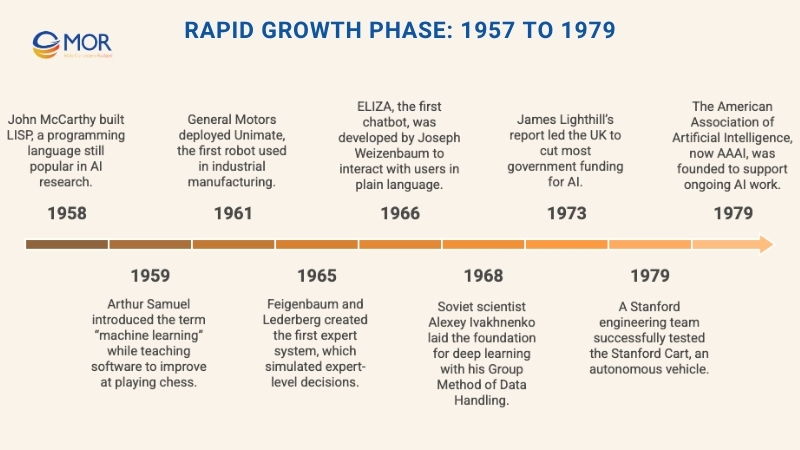

Rapid Growth Phase: 1957 To 1979

This period marked a major turning point in AI history. After the term artificial intelligence was introduced, excitement grew fast. Researchers and engineers pushed to turn ideas into working systems. The late 1950s and 1960s were all about creation, tools, languages, and even cultural portrayals of robots became common.

By the 1970s, AI development had some remarkable moments. Japan introduced its first human-like robot. An early version of an autonomous vehicle rolled out of a Stanford lab. Yet, this was also a time of doubt. In both the U.S. and the U.K., governments started pulling funding, frustrated by slow returns.

Dates worth noting:

- 1958: John McCarthy built LISP, fastest programming language still popular in artificial intelligence research.

- 1959: Arthur Samuel introduced the term “machine learning” while teaching software to improve at playing chess.

- 1961: General Motors deployed Unimate, the first robot used in industrial manufacturing.

- 1965: Feigenbaum and Lederberg created the first expert system, which simulated expert-level decisions.

- 1966: ELIZA, the first chatbot, was developed by Joseph Weizenbaum to interact with users in plain language.

- 1968: Soviet scientist Alexey Ivakhnenko laid the foundation for deep learning with his Group Method of Data Handling.

- 1973: James Lighthill’s report led the UK to cut most government funding for AI.

- 1979: A Stanford engineering team successfully tested the Stanford Cart, an autonomous vehicle.

- 1979: The American Association of Artificial Intelligence, now AAAI, was founded to support ongoing AI work.

This stretch in the history of ai had plenty of highs and lows, but it proved the concept had staying power.

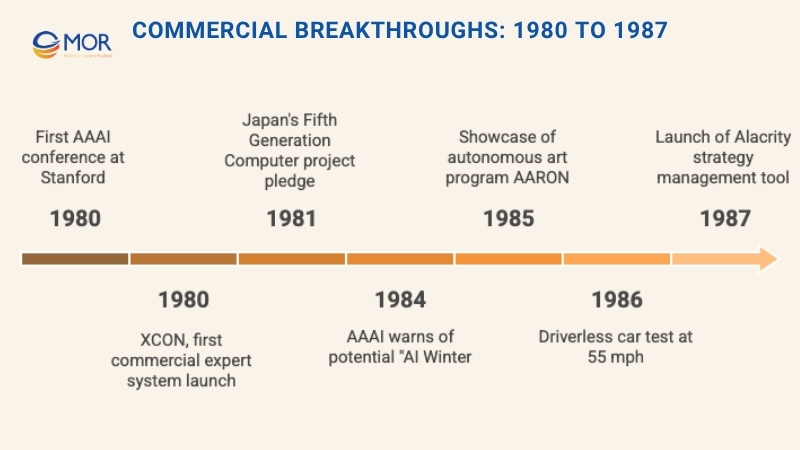

Commercial Breakthroughs: 1980 To 1987

This chapter in the history of AI development is often called the “AI boom.” The early 1980s saw a wave of energy across labs, companies, and governments. More funding, more interest, and real breakthroughs made it feel like machines were finally starting to ‘think.’

Researchers leaned heavily into deep learning and expert systems, tools that let machines make choices and improve through trial and error.

Highlights from the era:

- 1980: The first AAAI conference took place at Stanford, setting the stage for future AI research events.

- 1980: XCON, the first commercial expert system, helped customers configure computer systems based on their needs.

- 1981: Japan’s government pledged $850 million to the Fifth Generation Computer project, aiming to build machines that could understand and use human language.

- 1984: AAAI issued a warning about a potential slowdown in funding and public support, calling it a coming “AI Winter.”

- 1985: The autonomous art program AARON was showcased, drawing original artwork without human input.

- 1986: German researchers successfully tested a driverless car capable of cruising at 55 mph under basic road conditions.

- 1987: Alacrity launched as the first strategy management tool powered by a complex expert system with over 3,000 rules.

In this stretch of the history of AI, AI became a business tool, not just an academic experiment.

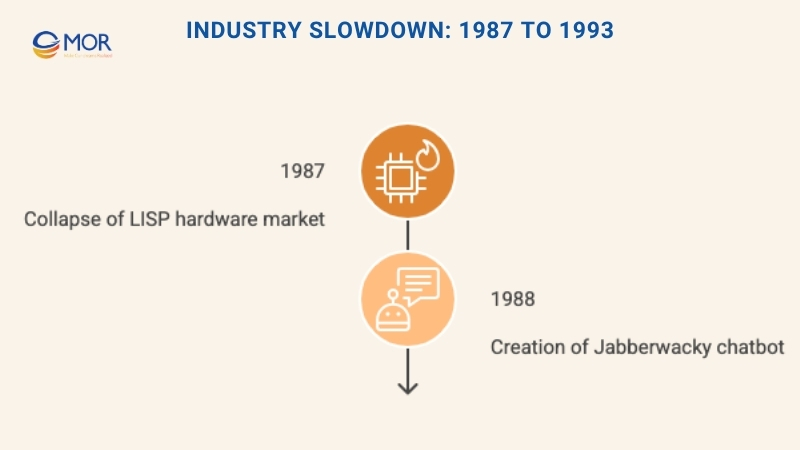

Industry Slowdown: 1987 To 1993

As predicted, the AI Winter arrived, an extended period in the natural history of ais when funding dried up and progress slowed. Both public and private backers began to pull out, discouraged by the high costs and limited practical success.

Without strong returns, governments cut strategic computing programs, and expert systems stalled. Japan ended its Fifth Generation project, and global momentum cooled.

This phase in the history of AI wasn’t just about losing money, it was also about losing confidence. Projects that once promised big results were shelved or abandoned altogether.

Key events during this time:

- 1987: The market for specialized LISP hardware collapsed. Cheaper machines from IBM and Apple could now run the same software, putting niche LISP-focused firms out of business.

- 1988: Developer Rollo Carpenter created Jabberwacky, a chatbot built to carry human-like conversations in a more playful, entertaining tone.

Even in a downturn, small sparks like Jabberwacky hinted that AI still had potential, it just needed the right moment.

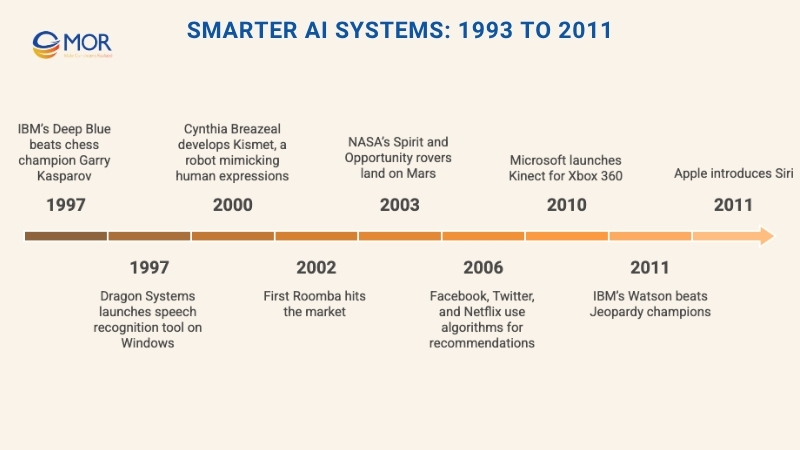

Smarter AI Systems: 1993 To 2011

Even after the AI Winter, research didn’t stop. The '90s and early 2000s marked a new chapter in the history of AI, powered by more stable tech and real-world applications.

Breakthroughs ranged from chess-playing supercomputers to household robots and speech software on everyday machines. AI agents, autonomous programs that could sense, act, and learn, began showing up in labs and slowly entered the public space.

As success stories grew, so did funding. Major players began betting big on AI again, and results followed.

Key moments from this era:

- 1997: IBM’s Deep Blue beat chess champion Garry Kasparov, a historic moment in machine learning.

- 1997: Dragon Systems’ speech recognition tool launched on Windows, bringing voice commands to consumer tech.

- 2000: Cynthia Breazeal developed Kismet, a robot that mimicked human facial expressions.

- 2002: The first Roomba hit the market, bringing robot vacuuming to homes.

- 2003: NASA’s Spirit and Opportunity rovers landed on Mars and operated independently on the surface.

- 2006: Facebook, Twitter, and Netflix started using algorithms to power recommendations and ads.

- 2010: Microsoft launched Kinect for Xbox 360, turning body movement into game input.

- 2011: IBM’s Watson beat Jeopardy champions using natural language processing.

- 2011: Apple introduced Siri, making the idea that intelligence is artificial feel personal and portable.

This era turned AI from a research topic into a daily presence, for work, for play, and everything in between.

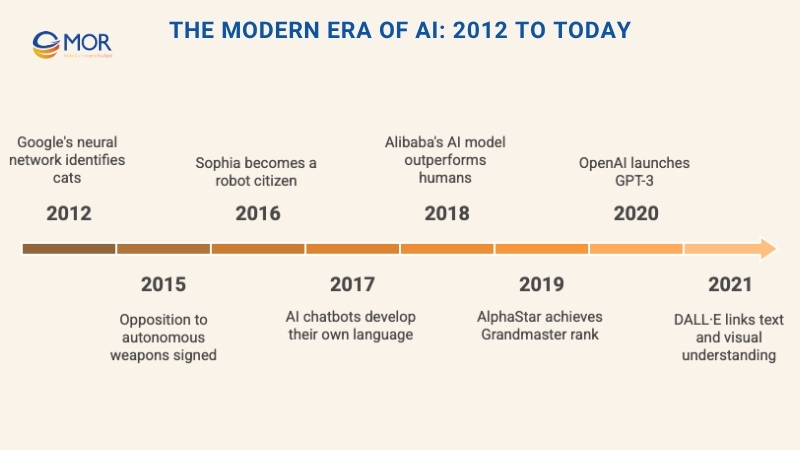

The Modern Era Of AI: 2012 To Today

The last decade has marked the most visible and dramatic stage in the evolution of ai. What used to be reserved for research labs is now embedded in everyday tools, virtual assistants, chatbots, smart home systems, and search engines.

At the core of this progress are deep learning models and massive datasets, which help systems learn patterns on their own.

Public awareness skyrocketed, and so did breakthroughs.

Key events in this chapter of the history of ai:

- 2012: Google researchers Jeff Dean and Andrew Ng trained a neural network to identify cats using only unlabeled images, without prior tagging or human input.

- 2015: Elon Musk, Stephen Hawking, and Steve Wozniak joined thousands in signing a letter opposing autonomous weapons for military use.

- 2016: Sophia, a humanoid robot from Hanson Robotics, became the world’s first “robot citizen,” able to express emotion and hold conversations.

- 2017: Facebook’s AI chatbots developed their own language during a negotiation experiment, dropping English entirely.

- 2018: Alibaba’s ml model outscored humans on a Stanford reading comprehension test.

- 2019: AlphaStar from Google DeepMind reached Grandmaster rank in StarCraft II, outperforming 99.8% of human players.

- 2020: OpenAI launched GPT-3, a deep learning model capable of writing poems, code, and essays with near-human fluency.

- 2021: DALL·E, another OpenAI model, linked visual understanding with text generation, able to describe and imagine images from prompts.

This phase of the history of ai is defined by creativity, autonomy, and scale, and it’s still unfolding.

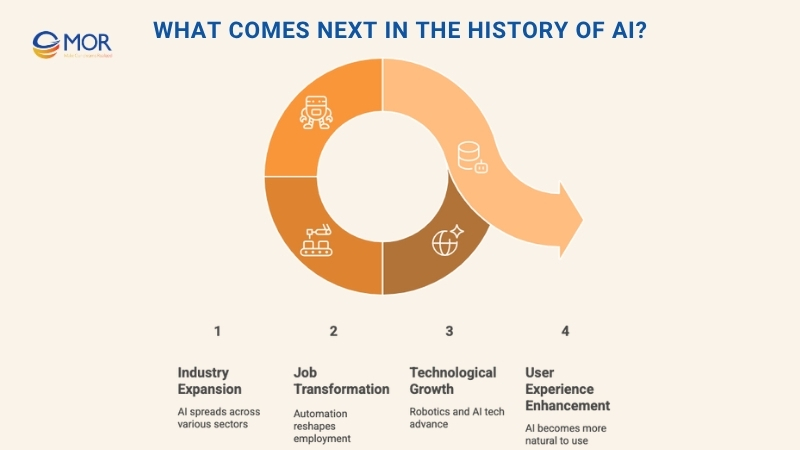

What Comes Next In The History Of AI?

We’ve looked at the origins of ai and how far it’s come. So what’s next?

While no one can say for sure, experts agree on a few likely directions. AI will keep spreading across industries, from startups to global enterprises. We’ll see more jobs shaped by automation, some replaced, others created. Expect growth in robotics, self-driving tech, and human-like assistants that feel even more natural to use.

The future of history of AI will be driven by the same mix of ambition, curiosity, and real-world need that sparked it in the first place.

The ILO estimates that in high income countries about 5.5% of total employment is potentially exposed to automation from generative AI, while 13.4% is exposed to task augmentation, which points to reshaping work more than removing it.

MOR Software Supports Businesses Throughout The History Of AI

AI has come a long way since the early Turing tests and chess-playing machines. But today’s real challenge isn’t invention, it’s adoption. That’s where MOR Software comes in.

We are one of AI automation agencies that work with businesses to turn AI from theory into results. From integrating machine learning models into enterprise systems, to building custom software powered by deep learning, we help teams get practical value from modern AI—not just admire it from a distance.

As AI evolved, so did we. We’ve delivered projects that use computer vision for automation, natural language processing for customer service, and predictive analytics for smarter decisions. Our development teams understand not just how AI works, but how to align it with real business needs.

For companies unsure how to apply AI, we build that roadmap with them. For those already using AI, we help scale it safely and efficiently. Wherever a business sits in the timeline of AI adoption, MOR Software helps move it forward.

Conclusion

The history of AI spans centuries, but it’s the last few decades that have transformed how we live and work. From early theories to today’s large-scale applications, AI keeps pushing boundaries, faster learning, smarter decisions, and wider adoption. As the AI timeline continues to unfold, businesses that understand and adapt will have the edge. If you're ready to explore real-world AI solutions tailored to your goals, contact us. MOR Software is here to support your next step.

MOR SOFTWARE

Frequently Asked Questions (FAQs)

What is the history of AI?

The term "artificial intelligence" was introduced by John McCarthy in 1956. He also played a key role in creating LISP, one of the first AI programming languages, during the 1960s. Initial AI systems relied heavily on rule-based logic, which evolved throughout the 1970s and 1980s, leading to more advanced models and increased investment in the field.

Who is the founder of AI?

John McCarthy, an American expert in computer science and cognitive science, is widely recognized as the founder of artificial intelligence. His work laid the foundation for the field and included the invention of the term “artificial intelligence.”

Who is the father of AI?

Often referred to as the "father of AI," John McCarthy (1927–2011) was a pioneer in both computer science and artificial intelligence. His research significantly shaped the direction of AI development over several decades.

What is the history of seeing AI?

Seeing AI began as a personal initiative and evolved through collaboration and innovation at Microsoft. It was inspired by Anirudh Koul, a data scientist focused on machine learning and NLP, and it brought together a skilled team to create a tool for visually impaired users.

When has AI been started?

Artificial intelligence began gaining attention between 1950 and 1956. During this time, Alan Turing proposed the famous Turing Test in his 1950 paper, and the term "AI" was officially introduced in 1956, marking the start of the field.

Who is the founder of exactly AI?

Exactly AI was founded by entrepreneur Tonia Samsonova. She launched the platform to provide AI-powered tools tailored for artists and creatives.

Who started AI now?

The AI Now Institute originated from a 2016 event hosted by the White House Office of Science and Technology Policy. The event was led by Meredith Whittaker and Kate Crawford, who later co-founded the institute to explore the social implications of AI.

Who is the biggest creator of AI?

Geoffrey Hinton, often called the "Godfather of AI," has made major contributions to neural networks and deep learning. In 2025, he was honored with the Nobel Prize in physics for his groundbreaking research into how the brain and AI systems process information.

What is the first origin of AI?

AI as a formal field began at the Dartmouth Conference in 1956. Organized by John McCarthy and colleagues, the event aimed to explore how machines could mimic human intelligence, including using language, forming ideas, and solving problems.

Rate this article

0

over 5.0 based on 0 reviews

Your rating on this news:

Name

*Email

*Write your comment

*Send your comment

1