How Do Neural Networks Work Explained With Real-World Examples

Is your business ready to harness the power of AI to enhance operational efficiency and strategic decision-making? In today’s rapidly digitalizing landscape, understanding how do neural networks work and integrating them with advanced technologies is a critical factor for maintaining a competitive edge. In this article, MOR Software provides an overview of how neural networks operate and the process of implementing AI solutions tailored to your business's specific needs.

What Is A Neural Network?

A neural network is a computational model in artificial intelligence designed to mimic how the human brain processes and transmits information. Instead of relying on simple linear algorithms, a neural network is built on multiple artificial neurons connected, similar to the structure of biological neural systems.

When learning about neural networks, consider that each artificial neuron receives input data, performs calculations, and then passes the results to other neurons in the network. This interconnected system, often referred to as neural networking, allows machines to continuously learn from data and improve accuracy over time.

Basic Concepts In Neural Networks You Should Know

To better learn about neural networks, it’s important to understand some foundational concepts. These elements are the building blocks that explain how neural networks work and why it has become so powerful in AI.

- Neuron (processing node): The basic unit in a neural network. Each neuron receives input data, performs simple calculations, and passes signals to other neurons. When thousands of neurons connect together, they create a system capable of learning.

- Weights: Every connection between neurons has an associated weight. Weights determine the importance of each signal. During neural network learning, these weights are adjusted to improve the model’s accuracy.

- Activation function: After computation, neurons need a mechanism to decide whether to pass signals forward. The activation function allows the network to capture nonlinear relationships, rather than just linear transformations.

- Layers: A typical neural network is structured into three types of layers:

- Input layer: where raw data enters the network.

- Hidden layers: where features are processed and extracted.

- Output layer: where final predictions or classifications are produced.

How Do Neural Networks Work?

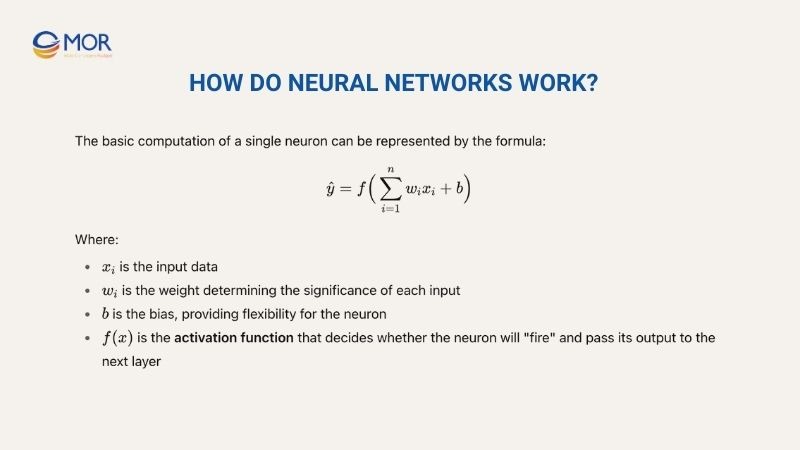

To understand how do neural networks work, imagine each node in a neural network as a small linear regression model, consisting of input data, weights, a bias, and an output. The weights determine the importance of each input variable, while the bias adjusts the neuron's activation threshold, making the neuron more flexible in learning.

The output of one neuron becomes the input for neurons in the next layer, forming a feedforward neural network, where information flows from the input layer to the output layer without looping backward.

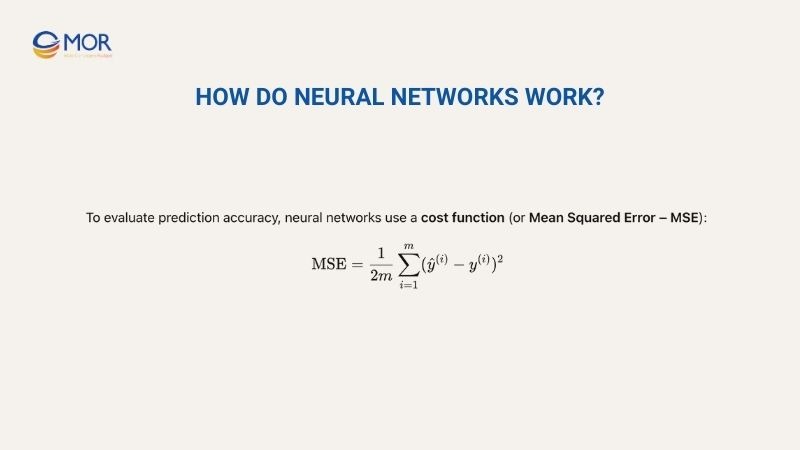

To evaluate prediction accuracy, neural networks use a cost function (or Mean Squared Error – MSE):

In more complex applications, such as image recognition or text classification, neural networks use sigmoid neurons with outputs between 0 and 1. It reduces sudden impact from any single input and improves stability during training.

When training with labeled datasets (supervised learning), the network calculates the cost function to measure the difference between predictions and actual values. The goal is to minimize the cost function, ensuring increasingly accurate predictions.

To optimize weights, neural networks apply gradient descent, adjusting parameters gradually based on error, converging toward the optimal solution. Besides feedforward, networks also use backpropagation, which propagates errors from the output layer back to earlier layers to update weights.

This mechanism is the core of neural networks learning, allowing models to continuously improve predictions over time. Thanks to this process, AI neural networks can handle complex tasks in real-world applications in robotics and self-driving vehicles.

Understanding this explains the neural network process, enabling businesses and developers to design, optimize, and deploy AI neural network systems effectively.

Popular Types Of Neural Networks You Should Know

When you learn about neural networks, it becomes clear that there are many different architectures, each suited to specific types of data and problems. In this section, we will explore four of the most common forms of neural networking today.

Feed-forward Neural Networks (FNNs)

A feed-forward neural network (FNN) is one of the most basic architectures when exploring how neural networks work. Its main characteristic is that data flows in a single direction — from the input layer → hidden layer → output layer — without any loops or backward connections.

Because of this simplicity, FNNs are often applied in basic classification tasks such as handwritten digit recognition or predicting labels for normalized data. While not as powerful as more advanced models like CNNs or RNNs, FNNs remain a fundamental building block when beginners learn about neural networks.

Convolutional Neural Networks (CNNs)

Convolutional neural networks (CNNs) are one of the most important architectures when discussing how convolutional neural networks work. CNNs operate through convolution layers that extract features from images, combined with pooling layers that reduce dimensionality and improve computational efficiency.

Thanks to their ability to detect edges, shapes, and complex patterns, CNNs are widely used in facial recognition, medical image classification, and self-driving systems.

Recurrent Neural Networks (RNNs)

Recurrent neural networks (RNNs) are built to handle sequential data, making them essential when understanding how do neural networks work with time series or natural language. The defining feature of RNNs is their ability to “remember” information from previous steps and use it for the current step, allowing them to capture context in sentences or patterns over time.

Traditional RNNs struggle with long sequences, which is why advanced variants such as LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit) were developed. These architectures power machine translation, sentiment analysis, and chatbot systems, showcasing the strength of AI neural networks in language-related tasks.

Generative Adversarial Networks (GANs)

Generative adversarial networks (GANs) are among the most innovative architectures when trying to explain neural network capabilities today. A GAN consists of two main components: a generator, which creates synthetic data, and a discriminator, which evaluates whether the data is real or fake. The two networks compete against each other, resulting in increasingly realistic outputs.

This unique mechanism has made GANs highly useful in generating digital art, producing deepfake videos. GANs demonstrate how neural networks learning can go beyond prediction to actually generate new information.

The Major Advantages Of Neural Networks In Machine Learning

Artificial neural networks are not just ordinary data processing tools—they also offer many powerful advantages that enhance learning and prediction performance in AI. In this section, we will explore the key benefits of neural networking:

Adaptability

One of the most notable advantages of artificial neural networks is adaptability. When exposed to new data, the network can automatically adjust its weights and structure to improve prediction accuracy.

This adaptability also allows AI neural networks to be applied flexibly across various fields, from financial analysis and healthcare to image recognition and natural language processing, ensuring that the system keeps learning and improving over time.

Nonlinearity

Another critical advantage of neural networks is nonlinearity. Thanks to activation functions, networks can handle complex and nonlinear relationships in data that simple linear algorithms cannot. This is key to explain neural network operations effectively in tasks involving complex predictions or classifications.

Nonlinearity makes how do neural networks work more flexible, allowing the network to learn and model intricate relationships in images, language, or time series. In facial recognition, neural networks can distinguish different expressions of the same person by capturing nonlinear relationships between pixels.

Parallel Processing

Artificial neural networks can perform parallel processing, leveraging the power of GPUs or TPUs to compute thousands of neurons and connections simultaneously. This capability accelerates training and allows models to learn faster when AI neural networks handle large-scale datasets.

Parallel processing is particularly crucial in neural networks learning for deep or complex models, where sequential computation would be slow and inefficient. For example, training a CNN model for medical image recognition can process thousands of X-ray images simultaneously on a GPU.

Fault Tolerance

Neural networks have fault tolerance, meaning they maintain stable performance even when input data is noisy or incomplete. Thanks to multi-layer connections and distributed signals, minor errors do not collapse the entire system, showcasing the resilience of neural networking.

In speech recognition systems, the network can still understand user commands even with background noise or microphone issues, allowing virtual assistants to operate smoothly.

Generalization

One of the most important strengths of neural networks is generalization. Networks do not just learn from training data. They can apply that knowledge to new, unseen data.

This capability highlights the power of neural networks learning, enabling accurate predictions across various real-world scenarios, from image analysis and financial forecasting to natural language processing.

Key Benefits Of Neural Networks For Your Business

The application of artificial neural networks in businesses helps optimize processes and reduce operational costs, and opens up opportunities to enhance data-driven decision-making. In this section, we will explore the key benefits that companies can gain from neural networking.

Increased Operational Efficiency

By implementing neural networking, businesses can automate repetitive processes, from warehouse management and order processing to basic customer service. This reduces labor costs and speeds up operations and minimizes human errors.

According to McKinsey, applying AI in warehouse management can save 5–20% in logistics costs. For example, Walmart is investing $200 million in autonomous forklifts to improve efficiency and reduce labor expenses in its warehouses.

Data-Driven Decision Making

Applying neural networking allows companies to leverage massive datasets from business operations, customer behavior, and market trends. Neural networks learning helps analyze complex data patterns, predict consumer trends, identify potential risks, and provide actionable strategic insights.

Moreover, businesses can quickly adjust plans when markets fluctuate, enhancing flexibility and maximizing profitability.

Personalized Customer Experience

Artificial neural networks enable companies to gain deep insights into each customer by analyzing behavioral data, preferences, purchase history, and interactions across digital channels. This neural networking capability allows for personalized product or service recommendations, boosting conversion rates and revenue.

For instance, Amazon uses neural networks to analyze purchase history and search behavior to provide personalized product recommendations, significantly increasing sales. Similarly, Netflix applies neural networks to predict viewer preferences based on watch history and content ratings.

Competitive Advantage

Implementing AI neural networks helps businesses maintain a competitive edge by providing deep insights into customer behavior, market trends, and competitor activities. Neural networking supports advanced data analysis and predicts potential business opportunities, enabling companies to innovate products and services ahead of competitors.

According to an IBM survey, three-quarters (75%) of CEOs believe that organizations with advanced AI will have a competitive advantage. Among them, 43% have integrated AI into strategic decision-making, and 36% into operational decisions.

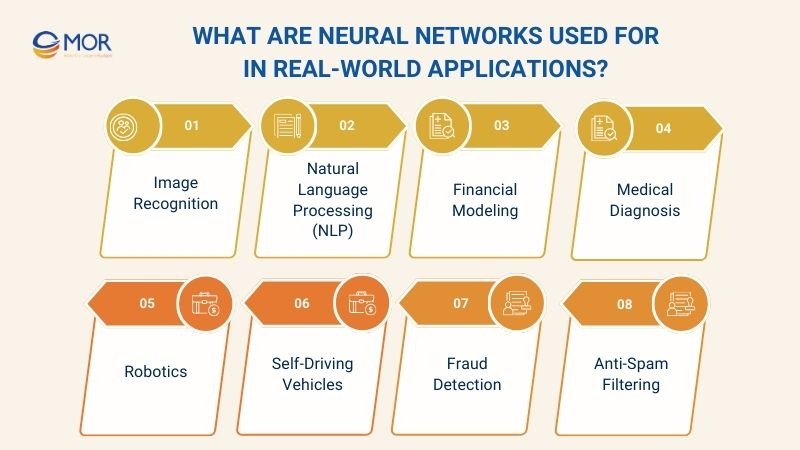

What Are Neural Networks Used For In Real-World Applications?

When exploring how do neural networks work, it becomes clear that the power of neural networking goes beyond theory, extending into practical applications. In this section, we will explore the prominent real-world uses of AI neural networks that businesses and technologies are leveraging daily.

Image Recognition

The application of neural networks in image recognition enables computers to classify, detect, and identify objects in images or videos. This application is particularly important in AI neural networks systems, helping automate quality control, security monitoring, and product classification across industries.

Example: A manufacturing company uses CNNs to inspect products on the assembly line, automatically detecting defective or damaged items, reducing errors, and improving operational efficiency.

Processing Flow:

- Input data: Product images from cameras on the assembly line.

- Feature extraction: Convolution and pooling layers detect important details, defects, or abnormalities.

- Classification/recognition: Fully connected layers determine whether the product meets quality standards.

- Output: The system automatically labels and removes defective products, sending reports to the operations team.

Natural Language Processing (NLP)

Applying neural networks in NLP allows computers to understand, analyze, and generate human language. Neural networking in NLP powers chatbots, virtual assistants, and sentiment analysis systems, enabling businesses to process large volumes of text or voice data and improve customer interactions.

Example: A fintech company uses RNN/LSTM to analyze customer feedback from emails and chats, predicting satisfaction trends and improving service quality.

Processing Flow:

- Input data: Text from emails, chats, or social media comments.

- Preprocessing: Tokenization, normalization, and removal of irrelevant words.

- Feature extraction: Embedding layers or word2vec convert text into vector representations.

- Classification/text generation: RNN/LSTM predicts sentiment or generates automated responses.

- Output: The system suggests replies, classifies sentiment, or generates summary reports for the business.

Financial Modeling

Neural networks in financial modeling help predict stock prices, analyze risks, and optimize investment portfolios. Neural networking can analyze complex, nonlinear financial data to provide more accurate forecasts than traditional methods.

Example: A financial company uses neural networks to predict stock price trends based on trading history, market fluctuations, and economic data, guiding strategic investment decisions.

Processing Flow:

- Input data: Stock prices, market indices, economic news.

- Preprocessing: Normalization, noise removal, handling missing data.

- Feature extraction: Neural network learns patterns and nonlinear relationships in the data.

- Prediction: The network forecasts prices or risk levels for investment portfolios.

- Output: Supports analysts and risk managers in making informed investment decisions.

Medical Diagnosis

Neural networks in healthcare assist in analyzing medical images, predicting diseases, and supporting accurate diagnosis. AI neural networks can process X-rays, MRIs, or CT scans, detecting abnormalities that are difficult for humans to spot.

Example: A hospital applies CNNs to analyze X-ray images, automatically detecting lung lesions and helping doctors diagnose faster while reducing errors.

Processing Flow:

- Input data: X-ray, MRI, or CT scan images.

- Feature extraction: Convolution layers identify abnormal regions.

- Classification: Fully connected layers predict the type of lesion or disease.

- Output: Automated reports are sent to doctors for reference during diagnosis.

Robotics

Neural networks enable robots to learn and adapt to their environment by processing visual and sensor data to make decisions. Neural networking helps robots automate complex tasks, from product assembly to navigation in open spaces.

Example: A manufacturing company applies neural networks to allow robots to recognize objects and adjust their actions on the assembly line, reducing errors and improving productivity.

Processing Flow:

- Input data: Camera images and environmental sensor data.

- Feature extraction: CNNs or RNNs recognize objects and analyze surroundings.

- Behavior decision: The neural network determines appropriate actions.

- Output: Robots execute tasks accurately and adapt to environmental changes.

Self-Driving Vehicles

Neural networks in self-driving vehicles process camera, radar, and lidar data to control cars, detect obstacles, traffic signals, and predict pedestrian behavior. This is a practical example of how do neural networks work in real-world applications.

Example: A self-driving car company uses CNNs and RNNs to analyze camera and sensor data, allowing the vehicle to recognize traffic signs, obstacles, and make safe driving decisions.

Processing Flow:

- Input data: Camera images, lidar/radar data, GPS.

- Feature extraction: CNN detects traffic signs, pedestrians, and obstacles.

- Behavior prediction: RNN predicts the movement of surrounding objects.

- Decision-making: The system controls speed, braking, and steering.

- Output: The car drives safely, accurately, and efficiently.

Fraud Detection

Neural networks help detect unusual transactions in banking, credit cards, or online payment platforms. Neural networking learns normal transaction patterns and identifies nonlinear or sophisticated fraudulent activities.

Example: A fintech company applies neural networks to monitor credit card transactions, automatically flagging suspicious activity and reducing financial risk.

Processing Flow:

- Input data: Transaction history, account information, user behavior.

- Feature extraction: The network learns normal and abnormal patterns.

- Classification: Predicts whether a transaction is legitimate or fraudulent.

- Output: Sends automatic alerts and blocks suspicious transactions if necessary.

Anti-Spam Filtering

Neural networks support filtering spam emails and messages by detecting patterns and keywords characteristic of unwanted content. Neural networking improves accuracy compared to traditional filters.

Example: An email service company uses neural networks to categorize incoming messages as primary inbox, spam, or promotional, saving users time and improving their experience.

Processing Flow:

- Input data: emails or messages.

- Feature extraction: keywords, frequency, sentence structure.

- Classification: the network predicts spam or legitimate messages.

- Output: automatically moves messages to the correct folder or alerts the user.

Key Differences Between Neural Networks And Deep Learning

When exploring how do neural networks work, many people wonder about the differences between traditional neural networks and deep learning. While both are based on neural network models, they differ in structure, data handling, and complexity. The comparison table below explains the main differences, from computation to real-world applications.

Criteria | Neural Networks (NN) | Deep Learning (DL) |

Complexity | Typically shallow with few hidden layers, simpler architecture | Multiple hidden layers (deep architecture), highly complex |

Computation Requirements | Low can run on the CPU | High, often requires GPU/TPU for training |

Training Time | Faster due to fewer layers and neurons | Slower due to deep architecture and a large number of neurons |

Use Cases | Simple classification, basic predictions | Image recognition, NLP, speech recognition, and complex AI tasks |

Feature Engineering | Requires manual feature engineering | Automatically extracts features from raw data |

Performance on Complex Tasks | Limited effectiveness for nonlinear or large datasets | Excellent for nonlinear, image, audio, and text data |

Why Choose MOR Software For Your Neural Network And AI Projects?

MOR Software brings extensive experience in AI development and neural network implementation, delivering tailored solutions that meet diverse business needs. With expertise across multiple industries, MOR Software ensures projects are executed efficiently, effectively, and with measurable results.

Reasons to choose MOR Software:

- Proven Expertise: Years of experience in developing and deploying AI neural networks for real-world applications.

- Industry Versatility: Successful AI projects in Fintech, Healthcare, Education, Automotive, and more.

- Customized Solutions: Tailored neural network models designed to solve specific business challenges and optimize operations.

- Cutting-Edge Technology: Utilizes state-of-the-art frameworks, GPUs, and deep learning techniques to ensure performance and scalability.

- Data-Driven Approach: Focused on leveraging neural networks learning to generate actionable insights and enhance decision-making.

- End-to-End Support: From planning and development to deployment and maintenance, providing comprehensive project support.

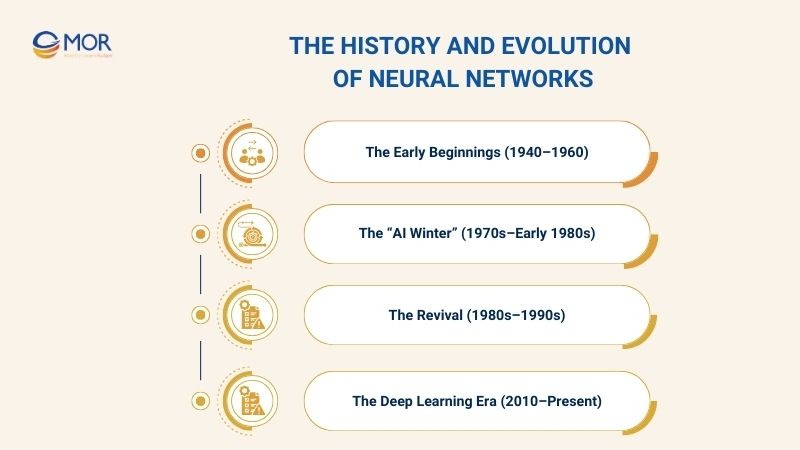

The History And Evolution Of Neural Networks

When exploring how do neural networks work, it’s essential to understand the history and key milestones that have shaped AI neural networks today. In this section, we will examine the journey from the early to the modern deep learning era, providing insight into how neural networks have learned and evolved over time.

The Early Beginnings (1940–1960)

The period from 1940 to 1960 marked the emergence of the first artificial neural network models, helping us learn about neural networks and their foundational principles.

In 1958, Frank Rosenblatt introduced the Perceptron, a simple neural network model with a single layer of neurons capable of learning and classifying data. This was an early example of how a neural network works to simulate human brain processing.

Earlier, in 1943, scientists Warren McCulloch and Walter Pitts developed the first mathematical model of artificial neurons, illustrating the basics of neural networking. In 1949, Donald Hebb proposed Hebbian learning, strengthening connections between neurons when activated together, laying the groundwork for neural networks learning today.

The “AI Winter” (1970s–Early 1980s)

During the mid-1970s to early 1980s, the field of artificial intelligence and early neural networks faced a period known as the AI Winter. Despite initial excitement, limited computing power and insufficient data caused many researchers to question how neural networks work in practical applications.

During this phase, the development of Perceptrons and other early models slowed, and much of the focus shifted to rule-based expert systems. Nevertheless, this period laid the theoretical foundation for AI neural networks, highlighting the challenges of neural networking and neural networks learning with limited resources.

The Revival (1980s–1990s)

The 1980s brought a revival of interest in neural networks, largely due to breakthroughs in algorithms and hardware. The introduction of backpropagation allowed researchers to finally learn about neural networks in deeper architectures.

Multi-layer perceptrons (MLPs) became popular for solving complex problems such as handwriting recognition, speech processing, and financial prediction. This era demonstrated that neural networks learning could be applied beyond simple theoretical exercises into real-world tasks.

The Deep Learning Era (2010–Present)

Since 2010, the rise of deep learning has transformed the AI landscape. Advances in GPU computing, Big Data, and cloud platforms have enabled training of highly complex neural networks. This period illustrates how neural networks work on large-scale real-world datasets, making deep architectures like CNNs, RNNs, and GANs widely used.

Modern AI neural networks can process images, text, and sequential data efficiently, showing the power of neural networking in practical applications.

In Conclusion

Understanding how do neural networks work is not just theoretical knowledge; it opens up practical opportunities for businesses. Let MOR Software partner with your business to deploy customized AI neural network solutions, boost operational efficiency, and create sustainable value. Contact us today to discover how neural networks can transform your data into a strategic advantage.

Rate this article

0

over 5.0 based on 0 reviews

Your rating on this news:

Name

*Email

*Write your comment

*Send your comment

1