What Is Transfer Learning? A Complete Guide To Techniques & Uses

Are you looking to accelerate your business’s AI projects while reducing training costs and time? Transfer learning could be the solution your business has been searching for. In this article, MOR Software will provide your business with a comprehensive understanding of learning transfer and guide you on how to leverage pre-trained models to optimize AI development.

What Is Transfer Learning?

Transfer learning is a technique in machine learning and deep learning. It allows a model that has been trained on one task (source task) to reuse its knowledge to solve a new task (target task). In other words, learning transfer enables the model to build on features it has already learned instead of starting from scratch.

Example: Imagine you already know how to ride a bicycle. When learning to ride a motorcycle, you don’t need to relearn balance or steering; you only need to master using the throttle and brakes.

What Is Transfer Learning?

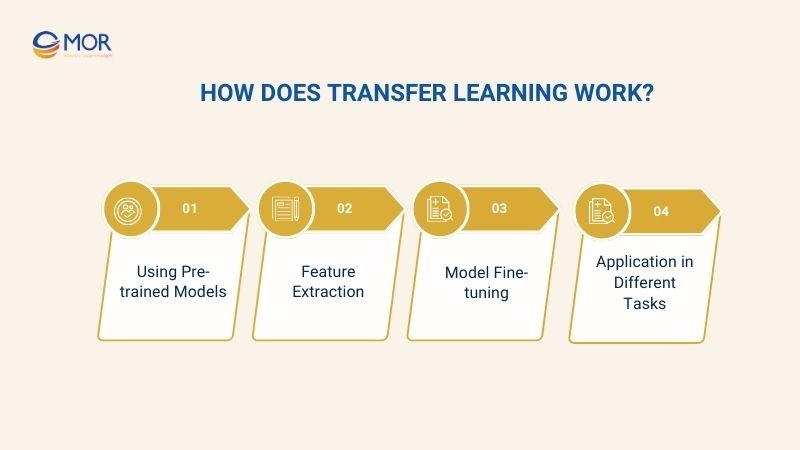

How Does Transfer Learning Work?

To better understand machine learning transfer learning, we first need to grasp how this technique works. Here are the main methods that a model uses for learning transfer:

Using Pre-trained Models

One of the most popular transfer learning techniques is using pre-trained models. These are models that have already been trained on very large and diverse datasets. This training process allows the model to learn general features of the data, such as:

- For images: detecting edges, shapes, and colors.

- For text: understanding sentence structure, semantics, and grammar.

In practice, most of the layers in the pre-trained model are kept unchanged, and only the final layers are fine-tuned so the model can adapt to new data and specific tasks.

Feature Extraction

Feature extraction is the process of extracting important features from intermediate layers of a pre-trained model to use for a new task. Instead of training a model from scratch, you use the learned features as inputs for the new model.

For example, in image recognition, a model has already learned to detect basic elements like edges, shapes, and colors. When moving to a new task, such as medical image classification, these features are still useful.

Model Fine-tuning

Model fine-tuning involves adjusting the weights of certain layers in a pre-trained model to better fit new data and tasks. During this process, the base layers retain knowledge from the source dataset, while the final layers are fine-tuned so the model can learn features specific to the new task.

This is one of the key transfer learning techniques, helping the model improve performance without training the entire model from scratch, especially when labeled data is limited.

Application in Different Tasks

The concept of learning transfer is widely applied across various AI fields:

- Natural Language Processing (NLP): Using models like BERT and GPT for text classification, question answering, sentiment analysis, and text generation.

- Computer Vision: Applying models such as ResNet, EfficientNet, or MobileNet for object recognition, image classification, face detection, or medical image analysis.

- Other fields: Speech recognition, time series analysis, financial data prediction, and many other AI tasks.

How Does Transfer Learning Work?

Key Benefits Of Using Transfer Learning In Machine Learning

Using transfer learning in machine learning offers significant benefits for AI projects. Here are the main advantages that this technique provides:

Reduced Computational Cost

One of the most notable benefits of transfer learning in machine learning is the reduction in computational cost. By using pre-trained models, there is no need to train a model from scratch for each new task. Instead, the model can leverage knowledge learned from previous tasks and only fine-tune the necessary layers.

A study in the manufacturing sector showed that applying transfer learning improved overall accuracy by up to 88% while reducing computational cost and training time by 56% compared to traditional learning methods.

Solve Limited Data Problems

A major challenge in machine learning is the lack of labeled data. Transfer learning addresses this problem by reusing knowledge from pre-trained models trained on large datasets.

For example, when classifying medical images with a limited number of labeled samples, instead of training a model from scratch, the model can use features learned from ImageNet and fine-tune them for the new task.

Better Generalization

Another key advantage of transfer learning in machine learning is its ability to improve model generalization. By learning from multiple data sources, the model not only memorizes training data but also captures general features, enabling it to perform well on unseen data.

For instance, NLP models like BERT or GPT can be fine-tuned on a new task while still leveraging previously learned language knowledge. Thanks to learning transfer, these models become more flexible and can effectively handle various AI tasks without the need for retraining from scratch.

Key Benefits Of Using Transfer Learning In Machine Learning

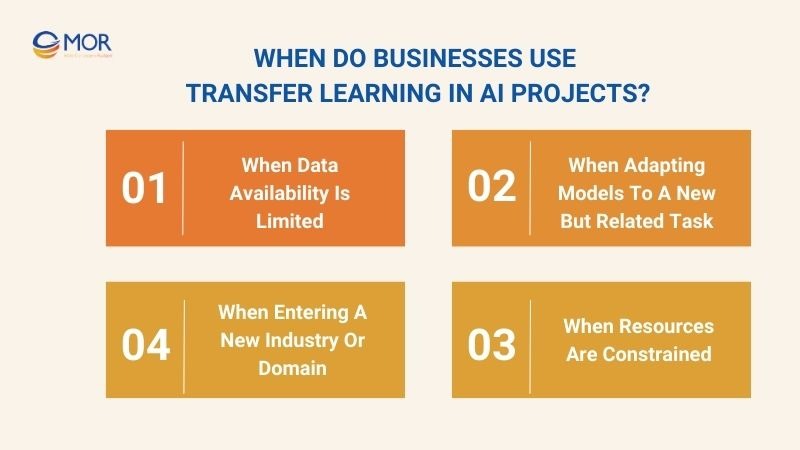

When Do Businesses Use Transfer Learning in AI Projects?

In AI projects, transfer learning is used by businesses in various real-world scenarios. Here are the common situations where this technique proves most effective:

When Data Availability Is Limited

In many AI projects, especially in healthcare or finance, labeled data is often limited. Transfer learning allows models to leverage knowledge from large pre-trained datasets to identify important features.

This enables the model to maintain high accuracy even with small training datasets while reducing the risk of overfitting due to insufficient data. Using transfer learning in such cases helps businesses accelerate project timelines and maximize the effectiveness of limited data resources.

When Adapting Models To A New But Related Task

Businesses often need to apply an AI model to a new task that is related but differs in specifics. Transfer learning helps retain the general features already learned while fine-tuning only the necessary layers for the new task.

Example: A retail AI model trained to recognize beverage products can be fine-tuned to classify packaged foods. By leveraging previously learned features such as shape, color, and labeling, the model efficiently adapts to the new classification task with minimal additional data and training time.

When Resources Are Constrained

In large-scale AI projects, training models from scratch consumes significant computational resources, time, and costs. Transfer learning reduces these expenses by utilizing pre-trained layers and only fine-tuning the parts required for the new task.

Example: A startup developing an AI-powered speech recognition system may not have access to large-scale GPU clusters. They can use a pre-trained model like Wav2Vec and fine-tune it on a smaller dataset of domain-specific audio recordings.

When Entering A New Industry Or Domain

When expanding into a completely new industry or domain, available data and expertise may be limited. Transfer learning enables models to learn general patterns from a previous domain and apply them to a new one, minimizing trial-and-error risks and speeding up AI deployment.

The focus here is on cross-domain knowledge transfer rather than merely fine-tuning for a specific task.

When Do Businesses Use Transfer Learning in AI Projects?

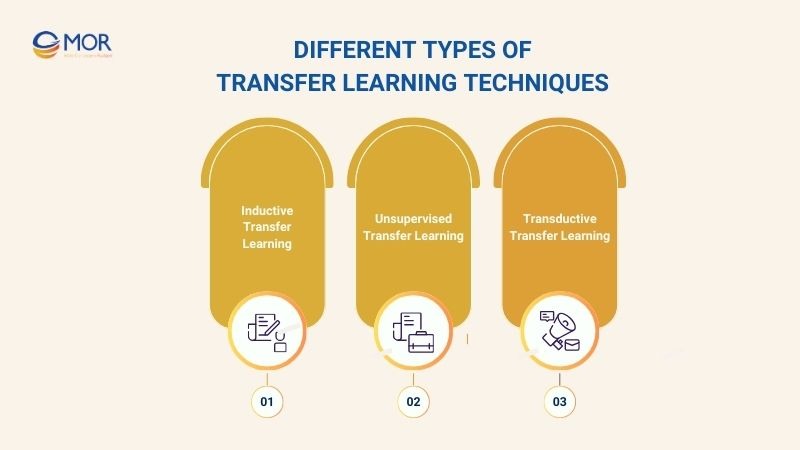

Different Types Of Transfer Learning Techniques

In the field of artificial intelligence, transfer learning offers various techniques that enable models to learn faster and more efficiently. Depending on the relationship between the source task, businesses can apply different types of transfer learning accordingly.

Inductive Transfer Learning

Inductive transfer learning occurs when the source task differs from the target task, but knowledge from the source can still be reused to improve model performance. This technique lets businesses use pre-trained models on large datasets for new, related tasks with different requirements.

Unsupervised Transfer Learning

Unsupervised transfer learning is applied when the target task has no labeled data available. This approach enables a model to learn general features from a source task—whether labeled or unlabeled—and transfer this knowledge to the target task.

In real-world scenarios, businesses can use unsupervised transfer learning to exploit raw data, cut down on labeling costs, and speed up AI deployment.

Transductive Transfer Learning

Transductive transfer learning is used when the source and target tasks are the same, but the data distributions differ. This method is particularly useful for companies applying a model trained on one dataset domain to another domain with different characteristics.

For instance, a speech recognition model trained on American English can be fine-tuned for British or Australian English, maintaining high recognition accuracy despite differences in pronunciation and speech patterns.

Different Types Of Transfer Learning Techniques

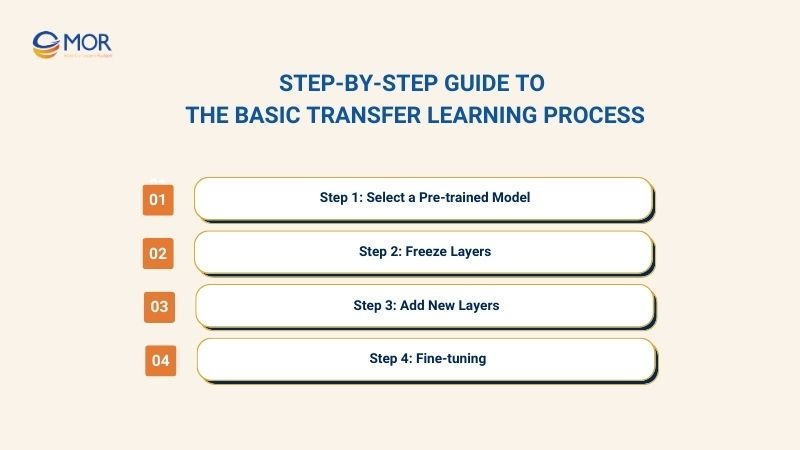

Step-by-Step Guide To The Basic Transfer Learning Process

In AI projects, transfer learning enables businesses to deploy models faster while saving computational resources. The basic process outlined below helps understand each step, optimize performance, and reduce training time.

Step 1: Select a Pre-trained Model

To save time, reduce costs, and get better results, businesses should choose a suitable pre-trained model instead of building one from scratch. This lets you leverage existing knowledge that the model has already learned.

For example, if you want to build a system to recognize different types of fruit from images. Instead of starting from zero, you can choose a model that was previously trained to recognize many different kinds of images.

This model has already learned how to identify basic features like shape, color, and texture, making the fine-tuning process for new fruits faster and more accurate.

Step 2: Freeze Layers

After selecting a pre-trained model, the next step in transfer learning is to freeze the learned layers to preserve the knowledge from the source task. This helps the model retain the fundamental features it has already learned while focusing on learning the new, specific features of the target task.

Freezing layers doesn't mean the model learns nothing new. It simply limits the adjustments to the critical, pre-trained parts, which reduces the risk of overfitting and saves computational resources during the retraining process.

Step 3: Add New Layers

To adapt the model for a new task, you need to add new layers, like Dense or Classifier layers. These new layers learn the specific features of your new task, while the frozen layers keep the core knowledge from the original training.

Adding new layers helps the model both leverage old knowledge and flexibly adapt to the target data and task, ultimately optimizing performance without having to train the entire model from scratch. This is a crucial step in a transfer learning workflow.

Step 4: Fine-tuning

Finally, the model needs to be fine-tuned on the target task data to adjust the weights accordingly.

- If the source and target tasks are similar: Can fine-tune the entire model.

- If the tasks are different: Should only fine-tune the higher-level layers and keep the lower layers frozen.

Fine-tuning is the key step that allows a model to achieve high performance on new data without being trained from scratch.

Step-by-Step Guide To The Basic Transfer Learning Process

Main Drawbacks Of Using Transfer Learning For AI Tasks

Despite its significant benefits, the transfer learning approach is not without its drawbacks. Here are the main limitations to consider:

Dependence on the Source Model

The effectiveness of transfer learning heavily depends on the quality and suitability of the pre-trained source model. If the source model is not compatible with the target task or the new type of data, applying transfer learning may result in poor performance, overfitting, or learning irrelevant features.

During AI deployment, businesses should carefully evaluate the source model by considering:

- Whether the data type and task scope of the source model are similar to the target task.

- Whether the model architecture can be scaled or fine-tuned effectively for the new task.

Lack of Standard Metrics for Task Similarity

A major drawback of transfer learning is the lack of standardized metrics to accurately measure the similarity between the source task and the target task. Without knowing which tasks are sufficiently related, businesses risk suboptimal knowledge transfer or even decreased model performance.

Research by Xuetong Wu et al. (2021) indicates that improper application of transfer learning can reduce learning performance instead of improving it, a phenomenon known as “negative transfer”.

Main Drawbacks Of Using Transfer Learning For AI Tasks

Practical Applications Of Transfer Learning In AI And Machine Learning

In practice, transfer learning has become an essential solution in AI and machine learning projects. Applying transfer learning not only reduces training costs but also enhances the accuracy and efficiency of AI systems in real-world applications.

Image Recognition and Computer Vision

Transfer learning in computer vision allows models to quickly identify objects, patterns, and shapes in images. Instead of training from scratch, models can use previously learned visual features to adapt to new tasks like image classification, object detection, or medical image analysis, saving time and computational resources while maintaining high accuracy.

Real-world case: Detecting plant diseases from leaf images in agriculture.

Implementation flow:

- Select a pre-trained model: Choose a model trained on general image datasets to utilize its learned ability to recognize edges, colors, and textures.

- Preprocess data: Collect leaf images, resize, normalize, and label according to disease types and split into training, validation, and test sets.

- Fine-tune the model: Keep the feature extraction layers and replace the output layer with a classifier for specific disease categories.

- Train the model: Train only the new classifier layers with labeled images to reduce training time and resources.

- Deploy: Integrate into an app or monitoring system for farmers.

Natural Language Processing (NLP)

Transfer learning in NLP allows models to understand language faster by leveraging knowledge from pre-trained models like BERT or GPT. Instead of training from scratch, the model can recognize syntax, semantics, and relationships between words. It can then be fine-tuned for specific tasks such as text classification, question answering, or sentiment analysis

Real-world case: Classifying customer feedback on an e-commerce platform.

Implementation flow:

- Select a pre-trained model: Use a general language model to leverage its ability to understand grammar and context.

- Preprocess data: Collect customer feedback, remove special characters, normalize text, and split into training, validation, and test sets.

- Fine-tune the model: Keep language understanding layers and replace the output layer with a classifier for labels (positive, neutral, negative).

- Train the model: Train only the output layer using real feedback data, monitor validation accuracy, and adjust the learning rate if needed.

- Deploy: Integrate into the customer feedback management system. The model automatically classifies feedback, supports staff, and improves product decisions.

Speech Recognition and Audio Processing

Transfer learning in audio processing enables models to quickly learn voice features, intonation, and frequency patterns. Instead of training from scratch, models can leverage knowledge from general audio datasets to fine-tune for tasks like speech recognition, audio classification, or speech-to-text conversion.

Real-world case: Automated voice-based customer support system.

Implementation flow:

- Select a pre-trained model: Choose a general speech recognition model to utilize its ability to detect frequency, rhythm, and pronunciation patterns.

- Preprocess data: Collect voice recordings, remove noise, normalize volume and format, and split into training, validation, and test sets.

- Fine-tune the model: Keep feature extraction layers and replace the final layer with a classifier or speech-to-text module.

- Train the model: Train with real customer voice data and monitor conversion accuracy.

- Deploy: Integrate into a voice chatbot or call center system. The system automatically recognizes customer queries, reducing staff workload and improving customer experience.

Practical Applications Of Transfer Learning In AI And Machine Learning

Emerging Trends And Future Directions Of Transfer Learning

In the rapidly evolving field of artificial intelligence, transfer learning helps optimize training costs and time, and opens up new trends for modern AI applications. Below, we explore the future directions of transfer learning.

Multimodal Transfer Learning

Multimodal transfer learning combines information from multiple types of data, such as images, text, and audio, to improve model performance. This approach enables the model to better understand context and relationships between different data types, enhancing prediction and classification accuracy.

Recent studies show that applying multimodal data integration can increase model accuracy by up to 15% compared to traditional single-modal learning methods.

Federated Transfer Learning

Federated transfer learning allows models to be trained across multiple devices or network nodes. The training happens without directly sharing data, which ensures privacy protection and compliance with data security regulations.

This method is particularly effective in applications like healthcare, banking, and IoT systems, where sensitive data cannot leave local environments.

Lifelong Transfer Learning

Lifelong transfer learning enables models to continuously learn from multiple tasks without forgetting previously acquired knowledge. This approach helps maintain and improve model performance when encountering new tasks, without needing to retrain from scratch.

Research indicates that applying lifelong learning methods can reduce catastrophic forgetting and improve average model accuracy by up to 8% during continuous learning processes.

Zero-shot and Few-shot Learning

Zero-shot learning lets models handle tasks without seeing any training examples. Few-shot learning allows models to learn from only a small number of examples.

Both approaches expand the capabilities of models and allow applications in multiple domains without requiring large amounts of training data.

Emerging Trends And Future Directions Of Transfer Learning

In Conclusion

Harnessing the power of transfer learning can give your business a competitive edge by accelerating AI deployment and improving model performance. Ready to elevate your AI strategy? Contact MOR Software today to explore how transfer learning can drive smarter, faster, and more efficient AI solutions for your business.

MOR SOFTWARE

Frequently Asked Questions (FAQs)

What are the 3 types of transfer learning?

There are 3 types of transfer learning:

- Inductive transfer learning

- Unsupervised transfer learning

- Transductive transfer learning

Why is learning transfer important?

Learning transfer is important because it allows models to reuse knowledge from previous tasks, reducing training time and improving performance on new tasks.

Does transfer learning improve accuracy?

Transfer learning improves accuracy if the pre-trained model is relevant to the target task and fine-tuning is performed on the new dataset.

What are the challenges of transfer learning?

Challenges include selecting the right source model, managing differences in data distribution, and avoiding negative transfer that can decrease performance.

What are the disadvantages of transfer learning?

Disadvantages include dependence on the source model, limited applicability when tasks differ, and difficulty in measuring task similarity.

Can transfer learning introduce bias?

Transfer learning can introduce bias if the pre-trained model reflects biased patterns or the source data is unbalanced.

When not to use transfer learning?

Do not use transfer learning if the source and target tasks are too dissimilar or if there is sufficient labeled data to train a model from scratch.

Rate this article

0

over 5.0 based on 0 reviews

Your rating on this news:

Name

*Email

*Write your comment

*Send your comment

1