AI Tech Stack Explained: Complete Guide for Modern Enterprises

A well-built AI tech stack determines how fast and accurately your business can turn data into decisions. Yet many enterprises still struggle with fragmented tools, poor scalability, and rising costs. This MOR Software’s guide breaks down every layer of the modern architecture, helping you design smarter, leaner, and future-ready AI systems.

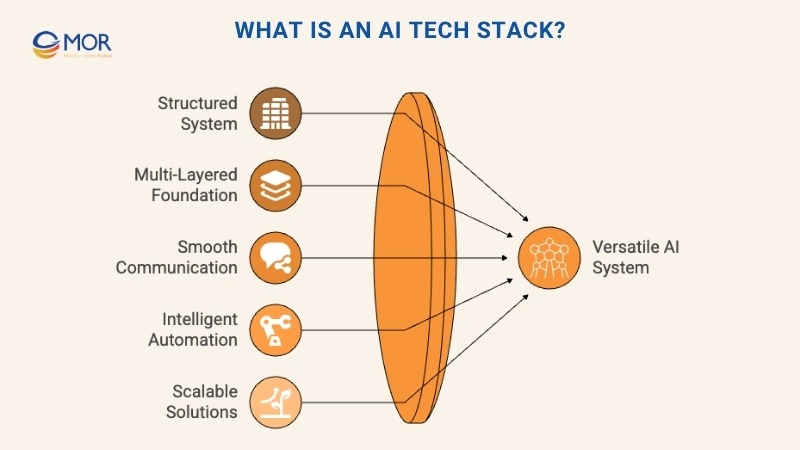

What Is An AI Tech Stack?

An AI tech stack is a complete structure of technologies, tools, and frameworks that power the entire process of building and running artificial intelligence systems. It works like a multi-layered foundation, where each layer handles a specific stage of the AI workflow, data collection, model training, deployment, and ongoing improvement. A popular AI stack combines both software and hardware resources, ensuring that every component communicates smoothly to support automation and intelligence across business operations. According to IBM Newsroom, around 42% of large companies say they already use AI as part of their business operations.

In practice, this structured system helps teams manage complex workflows efficiently, from raw data processing to real-time decision-making. According to JetBrains, almost half of data professionals spend about 30% of their time preparing data, a task that a well-designed stack can greatly simplify. It promotes alignment between data scientists, engineers, and business teams, turning AI initiatives into measurable outcomes.

For enterprises investing in innovation, a well-designed AI tech stack becomes more than just infrastructure, it’s the engine behind scalable and reliable AI-driven solutions. According to McKinsey & Company, generative AI could contribute between $2.6 trillion and $4.4 trillion to the global economy every year, showing how powerful this technology can be for growth.

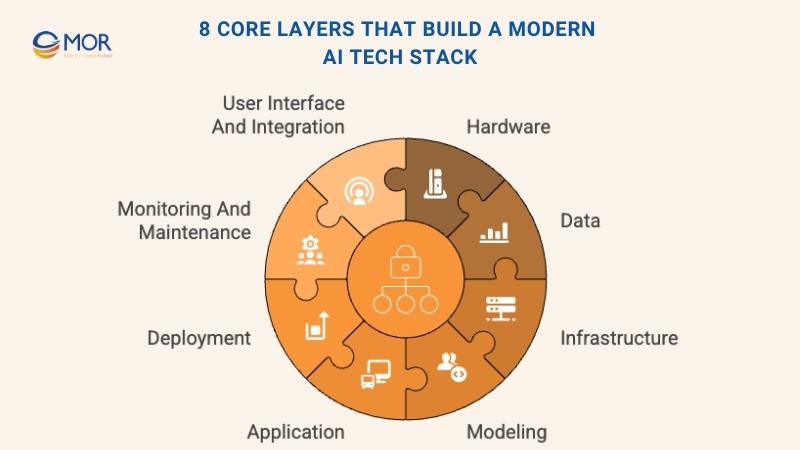

8 Core Layers That Build A Modern AI Tech Stack

Creating a functional AI ecosystem requires several interconnected layers, each responsible for specific tasks that drive model performance and scalability. Together, these layers form the backbone of a reliable AI tech stack, supporting everything from data ingestion to real-world deployment.

Let’s break down how each layer contributes to that foundation.

Layer 1: Hardware

Every modern AI tech stack starts with hardware. CPUs handle general processing tasks, but as data volumes and model complexity grow, more specialized hardware takes the lead. GPUs step in to accelerate parallel computations, especially during training phases, while TPUs, Google’s purpose-built chips, push performance further by optimizing machine learning workloads.

Data also needs a place to live. SSDs deliver faster access speeds for smaller datasets, whereas HDDs still win on capacity for large-scale projects. When storage demands go beyond a single server, distributed or cloud-based systems like HDFS or Amazon S3 come into play. They provide scalable and secure environments for handling massive datasets, a critical base for building an AI tech stack diagram that supports continuous innovation.

Layer 2: Data

The data layer is the foundation of every AI tech stack, responsible for collecting, storing, and refining the information that fuels AI models. It connects multiple sources to ensure data is both rich and reliable for analysis and training.

Data can come from many directions, IoT devices and sensors in factories, healthcare equipment, or smart homes, all capturing real-world inputs. APIs bring in information from digital platforms, while web scraping tools like BeautifulSoup extract online data for research or sentiment analysis.

Once collected, data must be organized. Structured data fits neatly into systems like MySQL, while unstructured information works better in NoSQL databases such as MongoDB. For large-scale storage, enterprises often use data lakes that keep vast, raw datasets ready for future use.

Before models can learn from it, data needs cleaning and transformation. ETL processes, Extract, Transform, and Load, prepare it for analysis, removing errors and standardizing formats. Tools like Apache NiFi and Talend streamline this stage, helping teams build efficient, automated data pipelines that strengthen the overall AI stack.

Layer 3: Infrastructure

The infrastructure layer forms the operational core of an AI tech stack, providing the computing power and environment where AI models are developed, trained, and deployed. It determines how efficiently data moves, how models scale, and how securely systems operate.

Cloud platforms like AWS, Google Cloud, and Microsoft Azure are the go-to choices for most enterprises, offering flexible compute resources, elastic storage, and built-in AI services that adapt to changing workloads. Their pay-as-you-go model keeps development agile and cost-effective.

For organizations with strict data protection or compliance requirements, on-premises setups remain essential. Private data centers give full control over hardware and governance policies. Many companies now blend both approaches into hybrid architectures, combining cloud scalability with on-premise security. This balance ensures a resilient AI tech stack capable of supporting continuous innovation without compromising performance or compliance.

Layer 4: Modeling

The modeling layer is where intelligence truly takes shape within an AI tech stack. It’s focused on designing, training, and refining models that can recognize patterns, make predictions, and automate decision-making. Frameworks like TensorFlow and PyTorch simplify this process by offering pre-built libraries for architecture design, algorithm tuning, and hyperparameter optimization, making experimentation faster and more consistent.

Training these models demands substantial computing power. Many teams use cloud-based environments such as AWS SageMaker or Google AI Platform to access scalable resources for heavy workloads. These managed services handle the intensive demands of iterative training, allowing developers to focus on accuracy and performance.

Organizations with high security or compliance needs sometimes choose on-premises infrastructure instead, gaining full control over their data and hardware. Each setup offers unique benefits, cloud platforms deliver speed and scalability, while dedicated environments support tighter governance and predictable long-term costs. This stage often sets the foundation for more advanced architectures like the generative AI tech stack, where large-scale models learn to create new and adaptive outputs.

Layer 5: Application

The application layer brings the AI tech stack into action, turning trained models into practical solutions that deliver real business value. This stage connects intelligence with everyday tools and systems, allowing AI to influence decisions, automate tasks, and improve user experiences.

Once a model is validated, it’s integrated into applications through APIs or embedded directly into software systems. This setup enables seamless interaction between the model and other digital components, whether for analytics, automation, or personalized content delivery.

Common use cases include recommendation engines in retail platforms, fraud detection in finance, or predictive maintenance in manufacturing. Each example shows how well-integrated AI can reshape workflows and outcomes. In essence, this layer transforms the AI tech stack from a technical framework into a driver of smarter, more responsive business operations.

Layer 6: Deployment

The deployment layer is where AI models transition from experimentation to production, enabling real-world performance and measurable outcomes. It’s the stage that connects your trained models to live systems, allowing them to process data, make predictions, and deliver insights at scale.

To serve models effectively, businesses often combine real-time APIs for instant predictions with batch processing for scheduled analytics or reporting. Containerization tools like Docker simplify deployment by packaging models and their dependencies into portable units, while Kubernetes orchestrates these containers to handle scaling, load balancing, and updates seamlessly.

Modern AI tech stack setups also integrate Continuous Integration and Continuous Deployment (CI/CD) pipelines. These automated workflows test, validate, and roll out new model versions, ensuring stability, accuracy, and rapid iteration. The result is a production-ready environment that keeps AI systems reliable and responsive to changing business demands.

Layer 7: Monitoring and Maintenance

The monitoring and maintenance layer ensures that every model within an AI tech stack continues to perform reliably after deployment. This stage focuses on tracking model behavior, detecting issues early, and making adjustments to keep systems accurate and compliant.

Performance metrics such as accuracy, precision, and recall help measure how well a model is doing over time. When model drift occurs, where real-world data changes and reduces prediction quality, continuous tracking and retraining are essential. Logging tools and performance dashboards provide deeper visibility, helping teams analyze usage patterns and identify potential errors.

Security and compliance are equally important. Data encryption safeguards sensitive information, while strict adherence to privacy regulations like GDPR keeps operations within legal and ethical boundaries. In short, consistent monitoring turns a deployed model into a dependable, evolving asset within the broader AI tech stack.

Layer 8: User Interface and Integration

The user interface and integration layer connects the AI tech stack to people and systems, turning data outputs into accessible insights and real-time interactions. It focuses on how users, developers, and applications communicate with AI models to make the technology functional and user-friendly.

Tool | Description | Example Use Case |

| APIs | Serve as communication bridges between systems, enabling easy integration of AI features without building models from scratch. | Use an API to connect a sentiment analysis model to a customer feedback platform. |

| SDKs | Provide ready-to-use libraries and documentation that simplify embedding AI into software applications. | Use an SDK to add voice recognition or image analysis to a mobile app. |

| Dashboards | Offer interactive visualizations for tracking model outcomes, performance metrics, or business KPIs in real time. | Use a dashboard to monitor predictive analytics for sales or inventory planning. |

| Libraries | Include visualization tools like Matplotlib, Plotly, and D3.js that turn raw model outputs into clear visual insights. | Use libraries to present AI-driven forecasts or pattern recognition results. |

Together, these tools ensure that an AI tech stack isn’t just powerful behind the scenes but also intuitive and accessible for end users and enterprise systems alike.

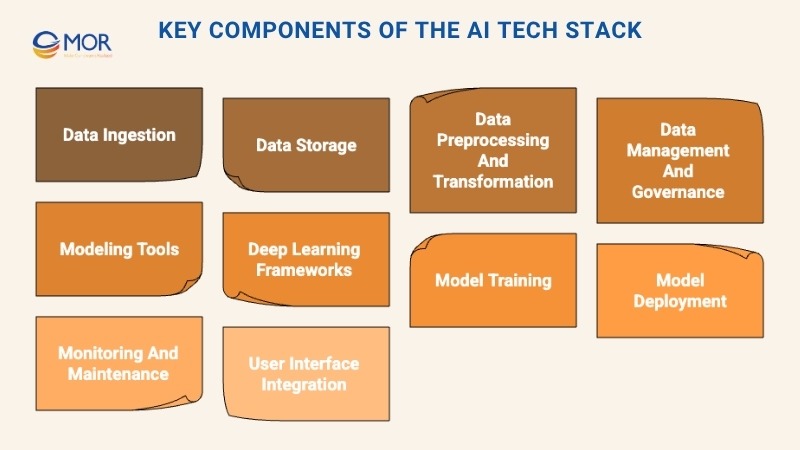

Key Components Of The AI Tech Stack

The AI tech stack is built on core components that handle every stage of the AI lifecycle, from collecting raw information to deploying trained models. These building blocks ensure that data flows smoothly, models train efficiently, and insights are delivered accurately. Each part plays a specific role in creating a stable, scalable environment for innovation. Let’s look closer at the essential elements driving modern AI development.

Data Ingestion

Data ingestion is where the process begins. It involves capturing information from various internal and external sources, including CRM systems, IoT devices, customer logs, and third-party APIs. For enterprises, reliable ingestion frameworks ensure a continuous, high-quality data flow into machine learning pipelines. This steady stream of structured and unstructured inputs lays the groundwork for smarter analytics and informed decision-making within a gen AI tech stack.

Data Storage

Once collected, data must be securely stored for easy retrieval and processing. SQL databases handle structured datasets effectively, while NoSQL systems like MongoDB are ideal for handling unstructured or semi-structured information. Scalable cloud-based repositories further enhance flexibility and performance, ensuring teams can access and query large datasets efficiently. In any AI tech stack, a well-designed storage architecture minimizes latency, supports growth, and keeps analytics operations running seamlessly.

Data Preprocessing and Transformation

Data preprocessing is where raw inputs are refined into structured, usable formats that AI models can learn from effectively. This stage focuses on cleaning, normalizing, and labeling data to remove inconsistencies and errors. Quality preprocessing leads to better model accuracy and faster iteration cycles. When data is properly prepared, predictions become more consistent and meaningful, improving decision-making and increasing ROI from AI initiatives. Within a popular AI stack, this step bridges the gap between messy data and actionable intelligence.

Data Management and Governance

Data management and governance safeguard the reliability, compliance, and security of information across the AI tech stack. Strong governance frameworks ensure that only clean, validated data enters the system, while enforcing privacy standards and auditability. This is especially critical for industries like finance or healthcare, where regulatory oversight is strict.

Maintaining data lineage and version control, organizations can trace how information evolves over time. Effective management practices help teams trust their analytics, protect customer data, and align AI outcomes with long-term business goals, strengthening both accountability and confidence in AI-driven operations.

Modeling Tools

Modeling tools are the engines that turn data into intelligence. They include frameworks and algorithms that help teams build predictive and analytical models efficiently. Libraries like Scikit-learn make it easy to apply machine learning methods that reveal patterns in customer behavior or market activity. These tools reduce technical barriers, helping analysts and developers collaborate smoothly on model creation.

Within a gen AI tech stack, modeling tools play a central role in speeding up experimentation and deployment. They empower organizations to turn massive datasets into actionable insights that support data-driven strategies and strengthen business agility.

Deep Learning Frameworks

Deep learning frameworks like TensorFlow and PyTorch bring advanced AI capabilities to life. They make it possible to design and train highly complex models for image recognition, voice processing, and natural language understanding. These frameworks handle large-scale computations with precision, transforming raw information into business-ready intelligence.

For companies scaling innovation, deep learning sits at the heart of the AI tech stack. It fuels automation, personalization, and creative applications that define modern AI systems. By adopting these frameworks, enterprises unlock the depth and flexibility needed to build smarter, more adaptive AI solutions.

Model Training

The model training stage is where data meets computation. Here, algorithms learn from curated datasets, and parameters are adjusted to improve prediction accuracy. Tools for hyperparameter tuning and optimization help fine-tune models until they reach the desired performance level. This process ensures models remain relevant and reliable across different business scenarios.

In a mature AI tech stack, well-structured training environments support experimentation, validation, and iteration. When businesses invest in optimized training pipelines, they not only improve precision but also strengthen the strategic value of AI initiatives, turning data into dependable, high-impact insights.

Model Deployment

Model deployment brings AI out of the lab and into everyday business operations. This phase uses automation platforms, APIs, and version-controlled workflows to move trained models into production environments quickly and safely. With solutions like MLOps, deployment becomes repeatable, traceable, and easy to scale.

An efficient deployment strategy within the AI tech stack shortens time-to-value, ensuring insights reach decision-makers faster. Automated pipelines keep systems up to date, while continuous integration practices minimize downtime. Ultimately, this phase transforms technical models into accessible, AI-driven tools that deliver measurable results across departments.

Monitoring and Maintenance

Monitoring and maintenance ensure that every deployed model within an AI tech stack continues to perform as intended. Real-time tracking systems evaluate model accuracy, detect drift, and flag anomalies before they affect business outcomes. Automated alerts and performance dashboards make it easier to respond quickly and maintain consistency in predictions.

Scheduling regular updates and retraining cycles, organizations extend the lifespan of their models and keep them aligned with evolving data trends. This proactive approach helps preserve the accuracy, reliability, and strategic value of AI investments, ensuring that each solution remains relevant across business operations.

User Interface (UI) Integration

UI integration serves as the connection point between complex algorithms and human interaction. It transforms backend intelligence into clear, actionable insights through intuitive visuals, dashboards, and interactive tools. Whether displayed in business applications, internal platforms, or APIs, this layer makes AI accessible and practical.

Within a modern AI tech stack, UI integration enhances the usability of AI-powered systems. It allows decision-makers and customers to interpret outcomes instantly, interact with predictive features, and apply insights to real-world contexts, turning advanced analytics into a natural part of daily workflows.

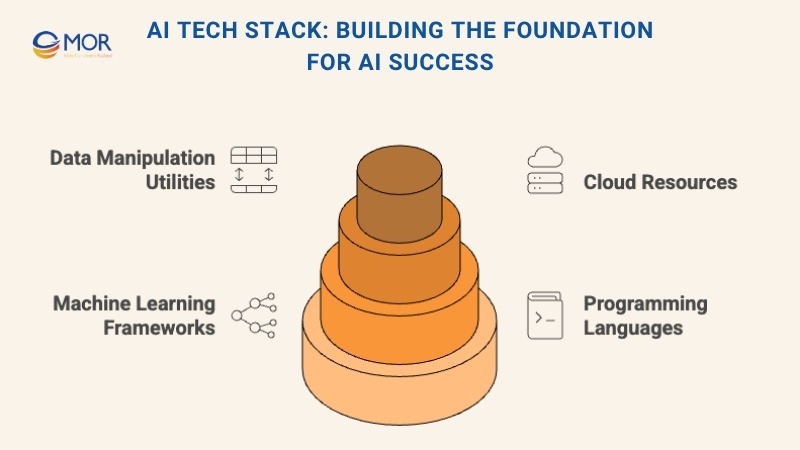

AI Tech Stack: Building The Foundation For AI Success

A well-structured AI tech stack is the foundation of every successful AI initiative. In an era where data and automation shape competitiveness, each layer, from programming tools to infrastructure, contributes to building scalable, adaptable, and intelligent systems. Below is a closer look at the components that define an effective AI tech stack examples for modern enterprises.

Programming Languages: The Functional Foundation

Python remains the most widely used language thanks to its simplicity and extensive libraries for data science and AI development. R continues to serve well for statistical modeling, while Julia offers high-performance capabilities for numerical computing. Choosing the right combination ensures better collaboration between teams and supports efficient, maintainable AI solutions across business domains.

Machine Learning Frameworks: The Backbone of AI Models

Frameworks like TensorFlow, PyTorch, and Keras streamline the creation and optimization of models, handling everything from natural language processing to image recognition. These frameworks come with pre-built architectures and customization options, allowing businesses to align AI performance directly with strategic goals and market needs.

Cloud Resources: The Scalable Infrastructure

Platforms such as AWS, Google Cloud, and Microsoft Azure deliver the flexibility to scale AI workloads effortlessly. Their managed services provide reliable environments for experimentation, training, and deployment. With pay-as-you-go options, teams can control costs while maintaining high availability and performance across global operations.

Data Manipulation and Processing Utilities: The Key to Clean Data

Data preparation tools like Apache Spark and Hadoop play a pivotal role in transforming raw datasets into model-ready formats. These utilities clean, normalize, and structure information to maintain quality and relevance. When integrated properly within an AI tech stack, they enhance model precision, accelerate insights, and drive more accurate predictions for business growth.

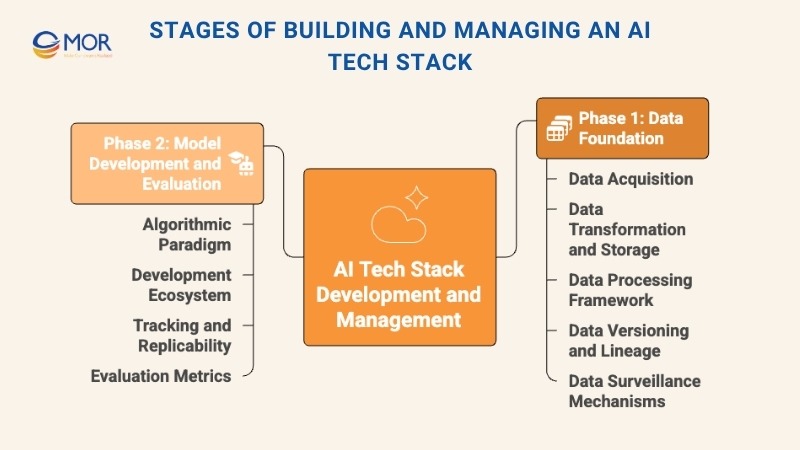

Stages Of Building And Managing An AI Tech Stack

Building and managing an AI tech stack requires a structured, step-by-step approach that aligns data, models, and infrastructure. Each stage plays a vital role in turning raw information into intelligent, scalable business solutions.

Phase 1: Data Foundation

This initial phase establishes the groundwork for a reliable AI tech stack, focusing on data acquisition, processing, and governance. It ensures that AI systems learn from clean, structured, and trustworthy data, a prerequisite for accurate and scalable outcomes.

Stage 1: Data Acquisition

Data collection begins with capturing information from multiple sources, such as IoT devices, enterprise databases, and third-party APIs. This process may include annotation, enrichment, or synthetic data generation to create diverse datasets that better represent real-world conditions. Effective sourcing builds a solid base for the next steps in the AI stack layers.

Stage 2: Data Transformation and Storage

Once collected, data goes through transformation pipelines that clean, normalize, and standardize formats for consistency. Depending on the scale and structure, organizations use SQL, NoSQL, or cloud storage systems to manage this information securely. These platforms make data easily accessible for model training while meeting compliance standards.

Stage 3: Data Processing Framework

In this stage, raw data becomes actionable. Using frameworks for analytics and feature engineering, teams refine data attributes to enhance model accuracy. Removing noise and irrelevant values improves learning efficiency, enabling the AI tech stack to focus on quality over quantity.

Stage 4: Data Versioning and Lineage

Tracking how data changes over time is crucial for transparency and compliance. Lineage tools record every transformation, while versioning ensures reproducibility. This visibility helps teams verify results and maintain control over data used in different experiments and deployments.

Stage 5: Data Surveillance Mechanisms

To maintain ongoing integrity, businesses implement automated monitoring for data drift, anomalies, and unauthorized access. These mechanisms ensure continuous quality and reliability, safeguarding the foundation that supports future modeling and deployment phases across the entire AI tech stack.

Phase 2: Model Development and Evaluation

This phase of the AI tech stack centers on building, refining, and monitoring AI models to maintain consistent accuracy and long-term reliability. It defines how algorithms are designed, evaluated, and improved as business needs evolve.

Algorithmic Paradigm

Choosing the right algorithm is fundamental to success. Teams align model selection with business goals and problem types, whether predictive analytics, recommendation engines, or classification models. The right approach ensures the AI logic directly supports desired outcomes and performance targets.

Development Ecosystem

A well-structured environment brings together tools, frameworks, and collaboration platforms for developers and data scientists. By integrating shared repositories, version control systems, and workflow automation, the AI tech stack promotes teamwork, consistency, and faster iteration throughout the model lifecycle.

Tracking and Replicability

Versioning systems record every model build and experiment, making replication simple and reliable. This traceability enables teams to roll back to previous checkpoints, compare performance, and continuously enhance outcomes, a key feature of modern AI agents tech stack designs focused on agility and accountability.

Evaluation Metrics

Clear performance indicators such as accuracy, precision, recall, and F1 scores measure ongoing model health. These metrics help identify drift or degradation early, guiding timely adjustments. A structured evaluation process ensures models remain relevant, stable, and aligned with both data patterns and evolving business strategies.

Best Practices For Building A Reliable AI Tech Stack

Developing a strong AI tech stack requires thoughtful planning and smart design choices to ensure scalability, security, and efficiency. The goal is to create a flexible system that can evolve with technology while maintaining stable performance and compliance.

Choose Flexible Tools

Start by selecting modular tools that connect easily across your ecosystem. Flexible, interoperable solutions make it simple to expand capabilities or replace components as technology advances. This adaptability allows businesses to strengthen their systems over time, adding innovations without interrupting current operations, a principle widely emphasized within the AI tech stack masters exchange community.

Strengthen Security

Safeguarding data is non-negotiable. Encryption, access controls, and ongoing security audits help protect sensitive information from unauthorized access. Implementing a solid governance model also reinforces transparency and compliance, which is critical for maintaining user trust and meeting regulatory standards across industries that rely heavily on AI.

Manage Resources Wisely

Using scalable cloud environments enables businesses to optimize performance while managing costs. Cloud-based automation adjusts computing resources based on real-time demand, preventing waste and maintaining system efficiency. This approach helps enterprises keep their AI tech stack resilient under varying workloads, supporting long-term growth without overspending on infrastructure.

Actionable Steps To Improve Your AI Tech Stack

Keeping your AI tech stack efficient and future-ready requires consistent optimization across processes, tools, and infrastructure. The following actions help ensure scalability, reliability, and cost efficiency as your AI initiatives grow.

Regular Audits

Conduct periodic audits of your data pipelines, storage layers, and model performance. These assessments uncover bottlenecks or inefficiencies before they escalate, enabling timely improvements. Regular reviews also strengthen compliance and ensure the stack continues to support evolving business goals effectively.

Automation (MLOps)

Introducing automation through MLOps transforms how models are deployed, monitored, and retrained. Automated pipelines reduce manual work, limit errors, and accelerate updates. With MLOps integrated into the AI tech stack, teams can focus on strategy and innovation while maintaining continuous model performance.

Data Scaling

As your organization collects more information, scalable systems are essential. Cloud-based storage and elastic computing help manage growing datasets without affecting speed or accuracy. This adaptability keeps the AI tech stack responsive and prepared for long-term data expansion.

Cost Management

Effective budgeting is critical. Optimize cloud resources, balance computational usage, and track spending to prevent unnecessary costs. Smart allocation ensures the stack delivers value while maintaining peak performance under variable workloads.

Tool Updates

Staying up to date with new frameworks and libraries keeps your infrastructure relevant and secure. Regularly upgrading ensures access to improved features and stability. This continuous refresh cycle strengthens the overall AI developer tech stack, allowing teams to stay competitive in a fast-moving AI ecosystem.

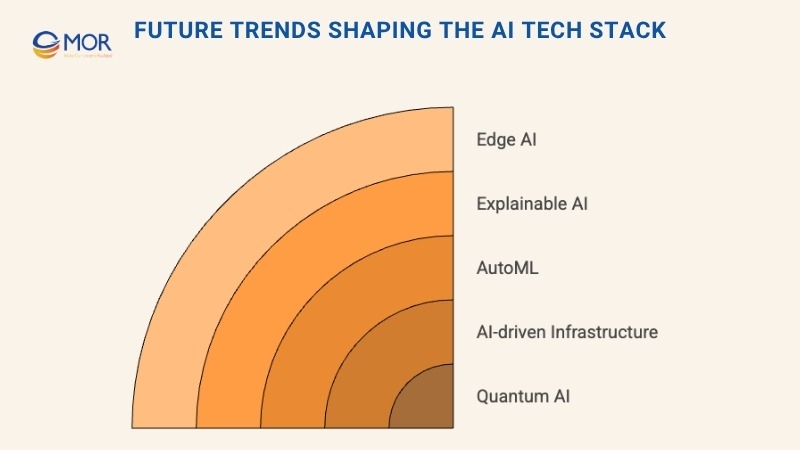

Future Trends Shaping The AI Tech Stack

The modern AI tech stack continues to evolve as organizations demand faster, smarter, and more transparent systems. Below are the leading innovations transforming how enterprises design and deploy AI at scale.

Edge AI

Edge computing brings intelligence closer to where data is generated. By processing information locally, this approach reduces latency and enables faster, real-time decisions. It’s ideal for IoT networks, autonomous systems, and devices that rely on immediate feedback without depending on cloud connections.

Explainable AI

Transparency is becoming a cornerstone of AI adoption. Explainable AI makes model reasoning more interpretable, helping users and regulators understand how decisions are made. For industries like finance or healthcare, this clarity builds trust and ensures accountability within the broader AI tech stack.

AutoML Advancements

AutoML simplifies the creation and tuning of AI models, opening access to teams without deep technical expertise. As these tools mature, they enhance productivity and allow businesses to customize models quickly. This evolution marks a shift toward more intuitive and accessible AI development within the agentic AI tech stack ecosystem.

AI-Driven Infrastructure

Self-optimizing infrastructure is reshaping operations. AI systems now manage computing resources autonomously, allocating workloads, predicting demand, and scaling automatically. This trend improves performance and cost efficiency across enterprise environments, solidifying automation as a core feature of the AI tech stack.

Quantum AI

Quantum computing introduces exponential processing power for solving problems that traditional systems can’t handle. When combined with AI algorithms, it unlocks possibilities in materials science, cryptography, and high-dimensional data modeling. Though still early in development, Quantum AI represents the next frontier for computational intelligence.

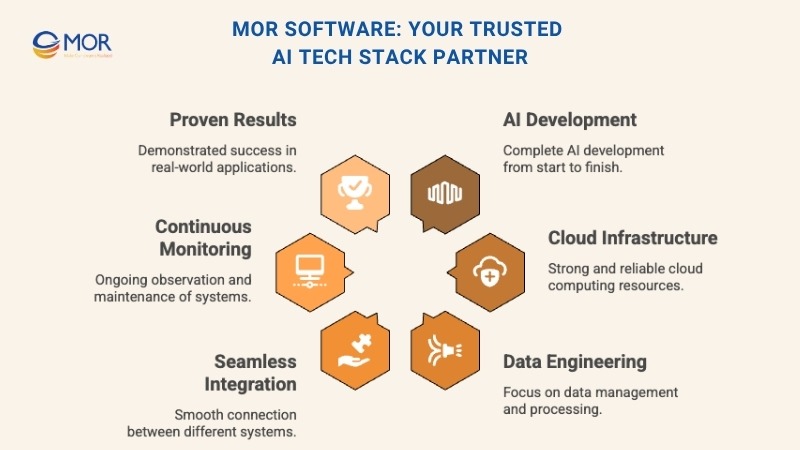

MOR Software: Your Trusted AI Tech Stack Partner

At MOR Software, we help businesses turn complex data into intelligent action. Our AI tech stack brings together the right tools, platforms, and expertise to design, build, and scale smarter digital solutions across industries.

- End-to-End AI Development: From data collection to model deployment, we handle the full AI lifecycle. Our experts combine Python, TensorFlow, and PyTorch to build models that deliver measurable business outcomes.

- Robust Cloud Infrastructure: We use AWS, Google Cloud, and Azure to deliver scalable and secure AI environments. This flexibility allows enterprises to process massive data volumes and deploy models efficiently.

- Data-Centric Engineering: We design systems that prioritize data quality, integrity, and accessibility. SQL, NoSQL, and data lakes are core to our architecture, ensuring consistent performance across applications.

- Seamless Integration: We embed AI into existing workflows, CRMs, and platforms like Salesforce, Slack, or ERP systems. Our approach minimizes disruption and accelerates adoption.

- Continuous Monitoring: Real-time tracking and model validation help businesses maintain accuracy, compliance, and reliability. We use MLOps pipelines with Docker, Kubernetes, and CI/CD for ongoing optimization.

- Industry-Proven Results: From healthcare and finance to smart cities, our AI-powered projects drive measurable growth. Solutions like I-Medisync and N-Cloud show how MOR transforms innovation into impact.

With global offices in Vietnam and Japan, MOR Software provides agile delivery and enterprise-grade security under ISO 27001 standards. We partner with you to build a future where data works smarter, faster, and more human.

Let’s turn your AI vision into reality?

Contact us to start your project today.

Conclusion

Building the right AI tech stack is key to unlocking your organization’s full potential. When every layer, from data to deployment, works seamlessly, AI becomes a true driver of growth and innovation. At MOR Software, we bring technical expertise, cloud scalability, and real-world experience to help you design intelligent systems that last. Ready to accelerate your AI journey? Contact MOR Software today and start transforming data into smarter business outcomes.

Rate this article

0

over 5.0 based on 0 reviews

Your rating on this news:

Name

*Email

*Write your comment

*Send your comment

1