The Ultimate Guide to Interpretability in Machine Learning

As AI systems grow more complex, understanding how they make decisions has never been more important. Interpretability in machine learning helps uncover what happens inside models that shape everyday outcomes. In this guide, MOR Software explains how transparent AI builds trust, improves accuracy, and supports responsible innovation.

What Is Interpretability In Machine Learning?

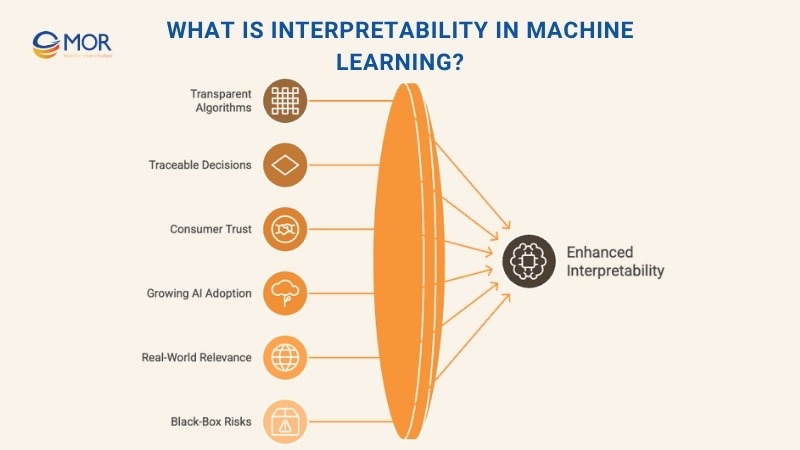

Interpretability in machine learning explains how and why an algorithm makes its predictions. It reveals the logic behind complex systems and helps users see how data, models, and parameters connect to real-world outcomes. When models are interpretable, people can trace every step that leads to a decision.

Transparency is also important to users. About 61% of U.S. consumers believe that any content generated by AI should be clearly identified, showing that traceability is now an expectation rather than a bonus.

Modern AI models combine vast datasets, layers of algorithms, and sophisticated computation. As models grow deeper and more abstract, understanding their inner workings becomes harder, even for developers who built them. That opacity now touches most companies. McKinsey’s 2025 update finds 71% of organizations regularly use generative AI in at least one business function, up from 65% in early 2024. An interpretable model, by contrast, allows users to follow the reasoning process, making results easier to trust and validate.

As AI interpretability gains attention, its relevance extends beyond research labs. From voice assistants to credit scoring and fraud detection, interpretable systems are shaping everyday life. The rise of deep learning, neural networks, and large language models (LLMs) has made this understanding even more crucial. These systems perform impressively but often act like 'black boxes,' leaving users guessing how outputs are formed.

The risks of “black-box” behavior are no longer theoretical. According to Stanford’s 2025 AI Index, there were 233 reported AI-related incidents in 2024, marking a 56% increase from the previous year. This surge highlights why transparent, explainable systems are becoming essential.

That’s why interpretable models in machine learning matter so much today. Industries like healthcare and finance depend on transparent, fair algorithms to make critical decisions about diagnosis, risk, and access. When professionals and the public understand how AI reaches a conclusion, confidence grows. Trust in technology comes not from automation itself but from the clarity behind its choices.

White-Box And Black-Box Models In AI

In interpretability in machine learning, models are often described as either white-box or black-box depending on how transparent their inner logic is. White-box models, like basic decision trees, make it easy to trace how inputs lead to outputs. Every branch and condition is visible, so users can follow the reasoning without guessing. These models usually rely on straightforward and linear processes, which makes them highly explainable. The trade-off is that they may miss deeper or non-linear relationships in data, sometimes limiting their predictive accuracy.

Black-box models, on the other hand, hide their decision paths inside complex architectures. Deep learning systems, neural networks, and ensemble models often fall into this category. Their internal workings are so layered that even experts find it hard to see why a specific prediction was made. These models can deliver remarkable precision, but their AI interpretability vs explainability raises questions about fairness, trust, and bias. Increasing transparency through visualization tools and post-hoc analysis helps uncover how these systems think, making them safer and more reliable to deploy in real-world applications.

Interpretability Vs. Explainability In Machine Learning

In interpretability in machine learning, the goal is to understand how a model works from the inside out. Interpretability deals with transparency, showing how features, data, and algorithms interact to produce outcomes. An interpretable model lets users see its structure, logic, and weighting of variables. This clarity helps developers and analysts verify that the system behaves as expected, especially when models influence decisions in sensitive areas.

Explainability, by contrast, focuses on why a model produced a specific prediction. It provides justifications after the output is generated. Explainable AI (XAI) uses tools and methods to make these explanations understandable for humans, translating complex relationships into natural language or visual form. When comparing interpretability and explainability in machine learning, interpretability is about opening the black box, while explainability is about describing what the box did and why. Both are key to building responsible, transparent, and fair AI systems that people can trust.

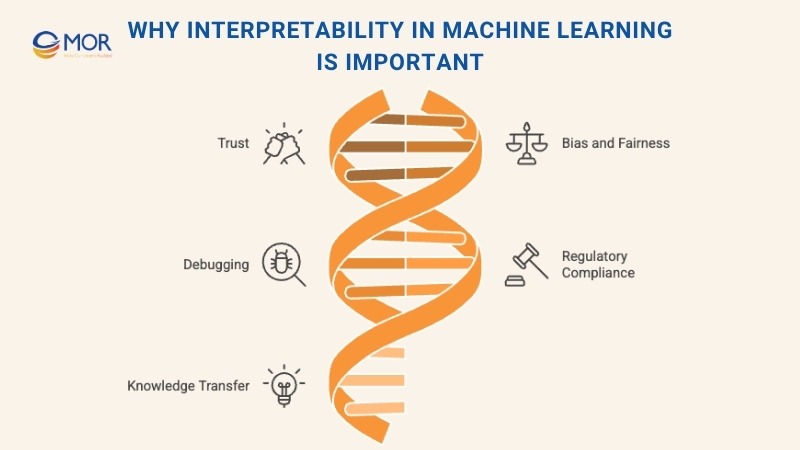

Why Interpretability In Machine Learning Is Important

Interpretability in machine learning allows teams to uncover how and why models make their predictions. It supports debugging, bias detection, regulatory compliance, and trust building. When decision systems affect people’s health, finances, or safety, understanding those systems isn’t optional, it’s a responsibility. Transparent models help developers refine performance while ensuring that technology aligns with ethical and legal standards.

Trust

Trust is the foundation of any intelligent system. When users can’t understand how a model reaches its conclusions, confidence drops quickly. Model interpretability in machine learning bridges that gap by showing the reasoning behind predictions. This visibility makes it easier for doctors to rely on AI in diagnostics, for banks to approve credit responsibly, and for the public to trust automation in daily life. Clear, interpretable systems turn uncertainty into assurance, building confidence across industries that depend on fair and explainable AI.

In consumer markets, for example, 62% say they would trust brands more if companies were transparent about how AI is used. This shows that clarity directly lifts confidence.

Bias And Fairness

Bias often hides inside training data, where subtle imbalances can shape how a model behaves. When left unchecked, these biases can lead to unfair or discriminatory results, affecting decisions about credit, hiring, or healthcare access. Interpretability in machine learning helps uncover where such patterns emerge and why they occur.

Studying how features influence predictions, developers can spot whether outcomes are being skewed by factors like race, gender, or age. Transparent and interpretable models in machine learning make it possible to adjust algorithms responsibly, ensuring that predictions remain fair and that organizations avoid both ethical and legal pitfalls.

Debugging

Even the best algorithms make mistakes, and finding them requires clarity. Interpretability in machine learning gives developers visibility into how each feature and decision path contributes to a result. Without that insight, debugging turns into guesswork. When engineers can trace a model’s reasoning, they can quickly locate faulty logic, mislabeled data, or weak correlations that distort predictions.

This transparency makes it easier to fine-tune parameters, retrain models efficiently, and improve accuracy over time. In short, interpretable systems turn debugging from trial and error into a structured, data-driven process that builds stronger, more dependable AI.

Regulatory Compliance

Governments worldwide are tightening rules around automated decision-making. Laws like the Equal Credit Opportunity Act (ECOA) in the U.S., the GDPR in Europe, and the EU AI Act all emphasize transparency and accountability in AI systems. Interpretability in machine learning supports compliance by making model behavior understandable and traceable.

When organizations can explain how predictions are formed, they meet legal standards for fairness and disclosure. Beyond that, interpretable systems simplify auditing, clarify liability, and reinforce data privacy protections. The financial consequences are significant. As of March 1, 2025, EU regulators had issued more than 2,200 GDPR fines totaling roughly €5.65 billion. The EU AI Act now adds further requirements for high-risk systems, including detailed documentation, logging, and human oversight.

As AI interpretability becomes central to governance, companies that prioritize it build safer, more accountable models that regulators and users alike can trust.

Knowledge Transfer

When teams can’t see how a model works, valuable insights stay locked inside the system. Interpretability in machine learning turns those insights into knowledge that people can share, refine, and reuse. It helps developers, analysts, and decision-makers understand why a model behaves a certain way, making collaboration smoother and model improvement faster.

Transparent reasoning also supports applying interpretable machine learning in computational biology, finance, or other research fields, where knowledge gained from one project can guide new ones. In short, interpretability keeps AI innovation human-centered by making its intelligence transferable and teachable.

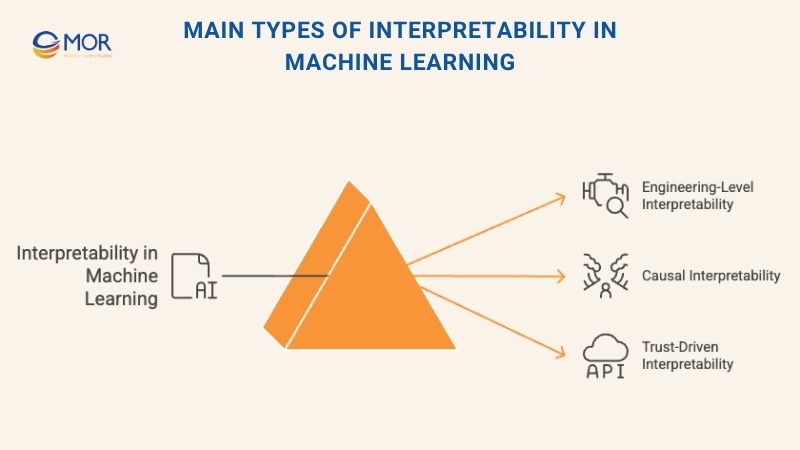

Main Types Of Interpretability In Machine Learning

Researchers often group interpretability in machine learning into three core categories: engineering-level, causal, and trust-driven interpretability. Each type serves a different purpose and audience, from developers who refine models to users who rely on their predictions.

Engineering-Level Interpretability

Engineering-level interpretability explains how a model generates its results. It focuses on the structure, parameters, and logic that connect inputs to outputs. This level of transparency helps data scientists debug, refine algorithms, and strengthen performance. For teams building complex systems, such as interpretable machine learning in healthcare, it ensures that every step can be examined, verified, and improved.

Causal Interpretability

Causal interpretability answers why a model behaves a certain way. It identifies which variables most affect predictions and how altering them changes outcomes. In AI interpretability, this approach uncovers relationships hidden in the data, revealing cause-and-effect links that guide better decision-making and scientific discovery.

Trust-Driven Interpretability

Trust-driven interpretability focuses on communication, not code. It’s about explaining predictions in human terms so users can relate to the reasoning behind them. This type of interpretability helps non-technical audiences understand model outputs clearly, building confidence and acceptance in practical applications like finance, education, or public policy.

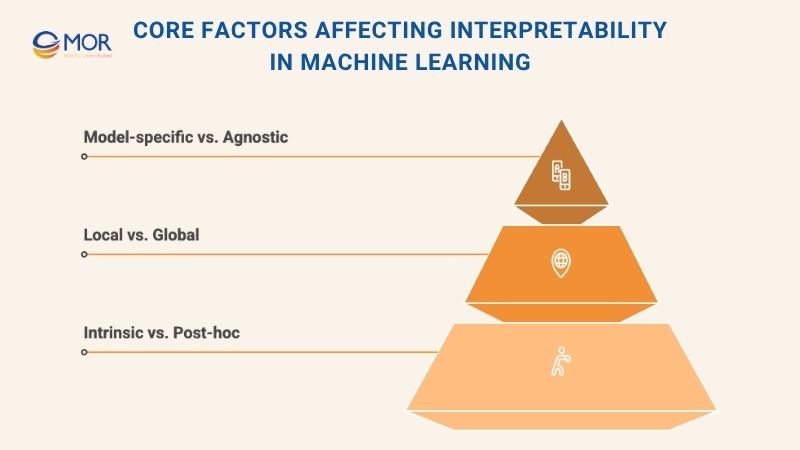

Core Factors Affecting Interpretability In Machine Learning

Several characteristics shape interpretability in machine learning, influencing how models can be explained and understood:

- Intrinsic vs. post-hoc

- Local vs. global

- Model-specific vs. model-agnostic

Intrinsic Vs. Post-Hoc

Intrinsic interpretability comes from models designed to be transparent from the start. Decision trees, linear regression, and rule-based systems fall into this group. Their logic is simple enough for users to follow without extra tools. Post-hoc interpretability, on the other hand, involves analyzing pre-trained or complex models after training. It uses external methods to explain how the model behaves, which is especially valuable for black-box AI principles where internal mechanics are too complicated to observe directly.

Local Vs. Global

Local interpretability explains a single prediction, why a specific input led to a certain output. It’s often used when developers need to validate one case or detect anomalies. Global interpretability provides the bigger picture by analyzing the model’s behavior across the entire dataset, revealing trends, biases, and overall logic. Both are vital to achieving full AI interpretability and ensuring consistency in decision-making.

Model-Specific Vs. Model-Agnostic

Model-specific interpretability methods rely on understanding a model’s internal design to create explanations. In contrast, model-agnostic methods can be applied universally to any algorithm, regardless of structure. This flexibility makes them ideal for comparing different models and improving ml explainability across varied AI systems.

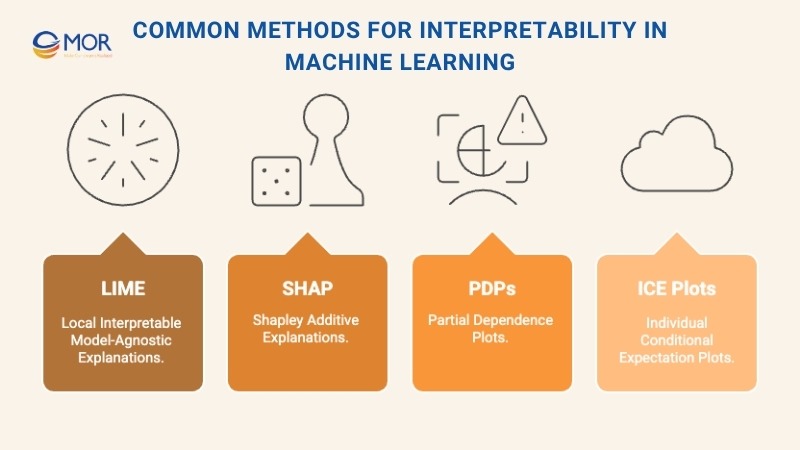

Common Methods For Interpretability In Machine Learning

There are many ways to achieve interpretability in machine learning, depending on how complex a model is and how much transparency it needs.

Some models are inherently interpretable because of their simplicity. Decision trees, rule-based systems, and linear regressions fall into this group. Their logic can be traced easily, showing clear patterns between input and output. These are often used when transparency and accountability matter more than raw predictive power.

For more advanced or black-box models, interpretability must be added after training through post-hoc methods. These techniques reveal how features influence predictions without changing the model’s structure. Some of the most widely used post-hoc approaches include:

- Local Interpretable Model-Agnostic Explanations (LIME)

- Shapley Additive Explanations (SHAP)

- Partial Dependence Plots (PDPs)

- Individual Conditional Expectation (ICE) Plots

Local Interpretable Model-Agnostic Explanations (LIME)

LIME focuses on explaining one prediction at a time by building a simpler, interpretable version of the original complex model. It works by generating multiple samples with small variations in feature values and observing how those changes affect predictions.

From these results, LIME constructs an easy-to-understand model that mirrors the local behavior of the larger system. This method highlights how specific features contribute to a particular output, making it a practical tool for AI interpretability in areas like healthcare, finance, and customer analytics, where understanding individual outcomes is critical.

Shapley Additive Explanations (SHAP)

SHAP uses concepts from cooperative game theory to explain how each feature contributes to a model’s prediction. It calculates Shapley values by analyzing every possible combination of features and their collective impact on the outcome. This gives each feature a clear, measurable contribution score.

SHAP can be applied to nearly any type of machine learning model, making it a flexible and powerful tool for both local and global analysis. While it offers detailed insights into model behavior, its computations can be heavy, which makes it slower for large-scale systems. Still, SHAP remains one of the most trusted techniques for interpretability vs explainability in machine learning, balancing precision with clarity.

Partial Dependence Plots (PDPs)

Partial Dependence Plots visualize how changing one feature influences a model’s prediction while keeping other features constant. By averaging results across a dataset, PDPs reveal whether a feature has a positive, negative, or nonlinear relationship with the output.

This helps analysts understand how specific factors drive predictions in interpretable models in machine learning. PDPs are particularly helpful when teams want to focus on a few key variables and need clear, visual explanations that can be shared easily with non-technical stakeholders.

Individual Conditional Expectation (ICE) Plots

ICE plots break down how a single feature influences a prediction for each individual data point. Unlike PDPs, which average results across an entire dataset, ICE plots show variations at the instance level, making them more precise.

They reveal how feature interactions change outcomes for specific cases and help detect unusual or inconsistent behaviors. This granularity makes ICE plots especially valuable for researchers studying AI interpretability and for stakeholders who need to identify outliers or unexpected trends within complex models.

Practical Use Cases Of Interpretability In Machine Learning

Interpretability in machine learning plays a vital role in industries where AI influences real people and high-stakes decisions. Understanding how models think and act ensures fairness, compliance, and trust across fields that depend on data-driven intelligence.

Healthcare

In medicine, interpretable machine learning in healthcare allows doctors to understand how an AI system reaches a diagnosis or treatment recommendation. When professionals can trace the reasoning behind a prediction, they can confirm accuracy, spot errors, and make safer choices. This transparency helps both clinicians and patients trust AI-assisted outcomes.

Finance

Financial institutions use AI for fraud detection, credit scoring, and risk analysis. Interpretability meaning in machine learning becomes essential for meeting auditing and regulatory standards. Clear explanations of why a loan was denied or an investment flagged can prevent bias and protect both banks and customers from unfair decisions.

Criminal Justice

In law enforcement and forensic analysis, AI interpretability supports fair and transparent use of predictive tools. It ensures that models analyzing DNA, evidence, or sentencing data remain accountable and unbiased. Without interpretability, errors in data or logic could influence serious legal outcomes.

Human Resources

When companies use AI for hiring or candidate screening, transparency is key. Model interpretability in machine learning helps HR teams understand how resumes are scored and prevents hidden bias in recruitment processes. This creates fairer, more inclusive hiring decisions.

Insurance

AI supports risk assessment, policy pricing, and claims management in insurance. Interpretable systems allow both customers and insurers to understand how rates are determined, improving trust and simplifying compliance audits under consumer protection laws.

Customer Support

AI chatbots and virtual assistants now shape customer interactions across industries. By applying explainable AI examples to analyze chatbot decisions, businesses can see why certain recommendations are made and adjust their systems for accuracy, fairness, and personalization, strengthening user confidence and loyalty.

Current Challenges And Limits Of Interpretability in Machine Learning

While interpretability in machine learning is essential, it also comes with clear challenges and trade-offs. Simpler white-box models like decision trees are easy to understand but may sacrifice predictive accuracy. Complex black-box systems, such as deep neural networks, often perform better but hide their logic behind layers of abstraction, making them harder to explain. Balancing clarity and performance remains one of the field’s biggest struggles.

Another issue is the lack of standardization. Different interpretation techniques can produce conflicting explanations for the same model, leaving researchers unsure which result is most reliable. Since interpretability can be subjective, what seems transparent to a data scientist may still feel unclear to a policymaker or end user.

Experts also point out that AI interpretability vs explainability isn’t always necessary or even desirable. For private, low-impact systems, forcing interpretability can waste resources or expose sensitive model details. In high-risk settings, excessive transparency might even backfire by allowing malicious users to manipulate or reverse-engineer the model. As research advances, the goal is to find a balance where interpretability supports accountability without compromising security or performance.

Work With MOR Software for Interpretability In Machine Learning

At MOR Software, we specialize in developing AI and machine learning solutions that are transparent, ethical, and practical for real-world applications. Our engineering teams design systems that balance accuracy with interpretability, allowing businesses to understand how their models make decisions and build trust with users.

We apply data visualization, model auditing, and bias detection techniques to help clients meet compliance standards and ensure their AI models perform fairly. Whether it’s a healthcare platform requiring decision transparency, or a financial system needing explainable algorithms, we build AI models that are interpretable, reliable, and scalable.

With a proven track record across 850+ projects in more than 10 countries, MOR Software continues to help global enterprises make smarter, more accountable use of machine learning technology.

Contact us to learn how we can help your organization apply interpretability in machine learning to create ethical, high-performing AI systems.

Conclusion

Interpretability in machine learning isn’t just a technical feature, it’s the foundation of trust and accountability in AI. As organizations rely more on intelligent systems, understanding how those systems think becomes critical. At MOR Software, we turn complex models into transparent, reliable tools that support fair and informed decision-making. Contact us today to discover how interpretable AI can strengthen your business and shape smarter, more ethical innovation.

Rate this article

0

over 5.0 based on 0 reviews

Your rating on this news:

Name

*Email

*Write your comment

*Send your comment

1